Test: Asymptotic Worst Case Time & Space Complexity- 3 - Computer Science Engineering (CSE) MCQ

20 Questions MCQ Test - Test: Asymptotic Worst Case Time & Space Complexity- 3

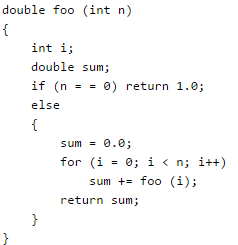

Consider the following C-function:

Suppose we modify the above function foo() and store the values of foo (i), 0 < = i < n, as and when they are computed. With this modification, the time complexity for function foo() is significantly reduced. The space complexity of the modified function would be:

Two matrices M1 and M2 are to be stored in arrays A and B respectively. Each array can be stored either in row-major or column-major order in contiguous memory locations. The time complexity of an algorithm to compute M1 × M2 will be

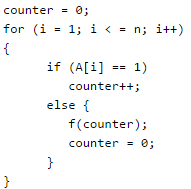

Let A[1, ..., n] be an array storing a bit (1 or 0) at each location, and f(m) is a unction whose time complexity is θ(m). Consider the following program fragment written in a C like language:

Q. The complexity of this program fragment is

The recurrence equation

T(1) = 1

T(n) = 2T(n - 1) + n, n ≥ 2

evaluates to

Consider the following three claims

1. (n + k)m = Θ(nm), where k and m are constants

2. 2n + 1 = O(2n)

3. 22n + 1 = O(2n)

Q. Which of these claims are correct ?

In a permutation a1.....an of n distinct integers, an inversion is a pair (ai, aj) such that i < j and ai > aj. What would be the worst case time complexity of the Insertion Sort algorithm, if the inputs are restricted to permutations of 1.....n with at most n inversions?

Randomized quicksort is an extension of quicksort where the pivot is chosen randomly. What is the worst case complexity of sorting n numbers using randomized quicksort?

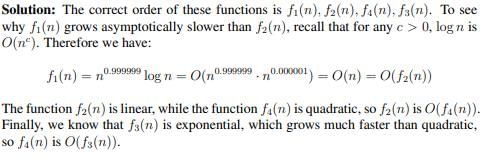

The increasing order of following functions in terms of asymptotic complexity is:

Which one of the following is the recurrence equation for the worst case time complexity of the Quicksort algorithm for sorting n(≥ 2) numbers? In the recurrence equations given in the options below, c is a constant.

Consider the following C function.

Q. Which one of the following most closely approximates the return value of the function fun1?

An unordered list contains n distinct elements. The number of comparisons to find an element in this list that is neither maximum nor minimum is

Q. The equality above remains correct if X is replace by

In the following table, the left column contains the names of standard graph algorithms and the right column contains the time complexities of the algorithms. Match each algorithm with its time complexity.

1. Bellman-Ford algorithm

2. Kruskal’s algorithm

3. Floyd-Warshall algorithm

4. Topological sorting

A : O(m log n)

B : O(n3)

C : O(nm)

D : O(n + m)

Let T(n) be a function defined by the recurrence T(n) = 2T(n/2) + √n for n ≥ 2 and T(1) = 1 Which of the following statements is TRUE?

The worst case running times of Insertion sort, Merge sort and Quick sort, respectively, are:

Assume that the algorithms considered here sort the input sequences in ascending order. If the input is already in ascending order, which of the following are TRUE ?

I. Quicksort runs in Θ(n2) time

II. Bubblesort runs in Θ(n2) time

III. Mergesort runs in Θ(n) time

IV. Insertion sort runs in Θ(n) time

A problem in NP is NP-complete if

The characters a to h have the set of frequencies based on the first 8 Fibonacci numbers as follows

a : 1, b : 1, c : 2, d : 3, e : 5, f : 8, g : 13, h : 21

A Huffman code is used to represent the characters. What is the sequence of characters corresponding to the following code? 110111100111010

What is the size of the smallest MIS(Maximal Independent Set) of a chain of nine nodes?

Arrange the following functions in increasing asymptotic order:

A. n1/3

B. en

C. n7/4

D. n log9n

E. 1.0000001n