Entropy: Definition | Basic Physics for IIT JAM PDF Download

Entropy, the measure of a system’s thermal energy per unit temperature that is unavailable for doing useful work. Because work is obtained from ordered molecular motion, the amount of entropy is also a measure of the molecular disorder, or randomness, of a system. The concept of entropy provides deep insight into the direction of spontaneous change for many everyday phenomena. Its introduction by the German physicist Rudolf Clausius in 1850 is a highlight of 19th-century physics.

The idea of entropy provides a mathematical way to encode the intuitive notion of which processes are impossible, even though they would not violate the fundamental law of conservation of energy. For example, a block of ice placed on a hot stove surely melts, while the stove grows cooler. Such a process is called irreversible because no slight change will cause the melted water to turn back into ice while the stove grows hotter. In contrast, a block of ice placed in an ice-water bath will either thaw a little more or freeze a little more, depending on whether a small amount of heat is added to or subtracted from the system. Such a process is reversible because only an infinitesimal amount of heat is needed to change its direction from progressive freezing to progressive thawing. Similarly, compressed gas confined in a cylinder could either expand freely into the atmosphere if a valve were opened (an irreversible process), or it could do useful work by pushing a moveable piston against the force needed to confine the gas. The latter process is reversible because only a slight increase in the restraining force could reverse the direction of the process from expansion to compression. For reversible processes the system is in equilibrium with its environment, while for irreversible processes it is not.

To provide a quantitative measure for the direction of spontaneous change, Clausius introduced the concept of entropy as a precise way of expressing the second law of thermodynamics. The Clausius form of the second law states that spontaneous change for an irreversible process in an isolated system (that is, one that does not exchange heat or work with its surroundings) always proceeds in the direction of increasing entropy. For example, the block of ice and the stove constitute two parts of an isolated system for which total entropy increases as the ice melts.

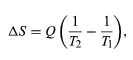

By the Clausius definition, if an amount of heat Q flows into a large heat reservoir at temperature T above absolute zero, then the entropy increase is ΔS = Q/T. This equation effectively gives an alternate definition of temperature that agrees with the usual definition. Assume that there are two heat reservoirs R1 and R2 at temperatures T1 and T2 (such as the stove and the block of ice). If an amount of heat Q flows from R1 to R2, then the net entropy change for the two reservoirs is

which is positive provided that T1 > T2. Thus, the observation that heat never flows spontaneously from cold to hot is equivalent to requiring the net entropy change to be positive for a spontaneous flow of heat. If T1 = T2, then the reservoirs are in equilibrium, no heat flows, and ΔS = 0.

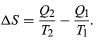

The condition ΔS ≥ 0 determines the maximum possible efficiency of heat engines—that is, systems such as gasoline or steam engines that can do work in a cyclic fashion. Suppose a heat engine absorbs heat Q1 from R1 and exhausts heat Q2 to R2 for each complete cycle. By conservation of energy, the work done per cycle is W = Q1 – Q2, and the net entropy change is

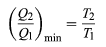

To make W as large as possible, Q2 should be as small as possible relative to Q1. However, Q2 cannot be zero, because this would make ΔS negative and so violate the second law. The smallest possible value of Q2 corresponds to the condition ΔS = 0, yielding

as the fundamental equation limiting the efficiency of all heat engines. A process for which ΔS= 0 is reversible because an infinitesimal change would be sufficient to make the heat engine run backward as a refrigerator.

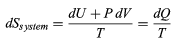

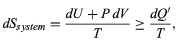

The same reasoning can also determine the entropy change for the working substance in the heat engine, such as a gas in a cylinder with a movable piston. If the gas absorbs an incremental amount of heat dQ from a heat reservoir at temperature T and expands reversibly against the maximum possible restraining pressure P, then it does the maximum work dW = PdV, where dV is the change in volume. The internal energy of the gas might also change by an amount dU as it expands. Then by conservation of energy, dQ = dU + P dV. Because the net entropy change for the system plus reservoir is zero when maximum work is done and the entropy of the reservoir decreases by an amount dSreservoir = −dQ/T, this must be counterbalanced by an entropy increase of

For the working gas so that dSsystem + dSreservoir = 0. For any real process, less than the maximum work would be done (because of friction, for example), and so the actual amount of heat dQ′ absorbed from the heat reservoir would be less than the maximum amount dQ. For example, the gas could be allowed to expand freely into a vacuum and do no work at all. Therefore, it can be stated that

with dQ′ = dQ in the case of maximum work corresponding to a reversible process.

This equation defines Ssystem as a thermodynamic state variable, meaning that its value is completely determined by the current state of the system and not by how the system reached that state. Entropy is an extensive property in that its magnitude depends on the amount of material in the system.

In one statistical interpretation of entropy, it is found that for a very large system in thermodynamic equilibrium, entropy S is proportional to the natural logarithm of a quantity Ω representing the maximum number of microscopic ways in which the macroscopic state corresponding to S can be realized; that is, S = k ln Ω, in which k is the Boltzmann constant that is related to molecular energy.

All spontaneous processes are irreversible; hence, it has been said that the entropy of the universe is increasing: that is, more and more energy becomes unavailable for conversion into work. Because of this, the universe is said to be “running down.”

|

210 videos|156 docs|94 tests

|

FAQs on Entropy: Definition - Basic Physics for IIT JAM

| 1. What is entropy? |  |

| 2. How is entropy related to information theory? |  |

| 3. Is entropy always increasing in a closed system? |  |

| 4. Can entropy be negative? |  |

| 5. How is entropy calculated in thermodynamics? |  |

|

Explore Courses for Physics exam

|

|