System of Linear Equations, Eigen Values & Eigen Vectors: Notes | Mathematics for Competitive Exams PDF Download

System of Linear Equations

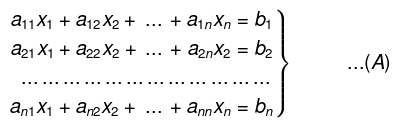

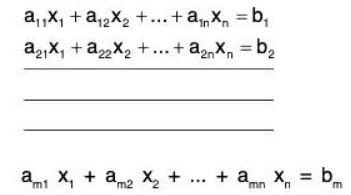

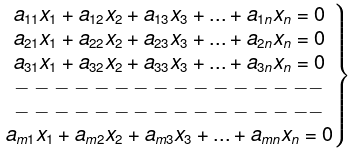

Definition: A system of linear equation in unknowns x1, x2, .... , xn is of the form

If b’s are all zero this system is called homogeneous and if at least one of bi's is non-zero then the above system is called non-homogeneous.

Case I. Non-Homogeneous linear equation (Two unknowns):

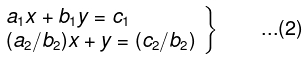

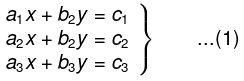

consider the system of equations

The following three cases arise

- a’s, b’s and c’s are all zero, then any pair of numbers (x, y) is a solution of the system (1) since each equation reduces to an identity. So in this case equations are always consistent and indeterminate.

- ai, bi (i = 1, 2) are zero but at least one of c1, c2 is non-zero. Then the system has no solutions, i.e. the system (1) is inconsistent.

- At least one of ai, bi (i = 1, 2) is non-zero.

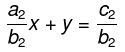

Let b2 ≠ 0. Then the system (1) is equivalent to

It is obvious that if pair (x0, y0) is a solution of the system (1). It is also a solution of system (2) and vice-versa.

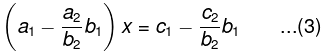

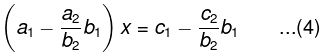

Multiplying the second equation of (2) by b, and subtracting it from the first equation of (2), we get

Now replacing the first equation of the system (2) by the equation (3), the system (2) is equivalent to the system of equations

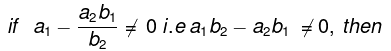

Now consider the following sub-cases:

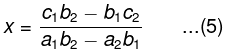

the first equation of the system (4) gives

Substituting this value of x into the second equation of system (4), we get

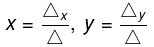

For convenience, we write

Any set of values of x1, x2, ...., xn (real numbers or complex numbers if the coefficients are complex number) which simultaneously satisfy each of these equations is called solution of the system (1).

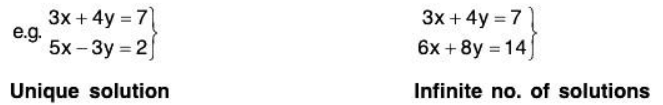

A system of equations is called consistent if it has at least one solution, inconsistent if it does not have any solution.

If the system of equations has a unique solution it is called determinate, it is said to be indeterminate if it has more than one solution.

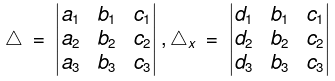

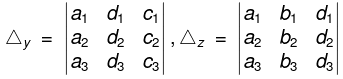

➤ Non-homogeneous Equations

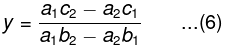

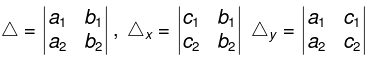

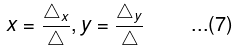

Here Δx and Δy are obtained by replacing the elements in the first and second columns in A by the elements c1, c2. Thus (5) and (6) can be written as

This method of solving simultaneous equations is known as Cramer’s rule. Then if a1b2 - a2b1 ≠ 0 then the system (4) or system (1) has the unique solution given by (7). Hence the equations are consistent and determinate.

When Δ = a1b2 - a2b1 = 0.

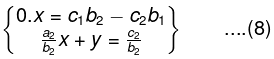

Then the system (4) becomes

Obviously this system (8) has no solution if c1b2 - c2b1 = Δx ≠0. Therefore the equations are inconsistent.

But if c1b2 - c2b1 = Δx =0, then any pair of number (x, y), where is a solution of system (8).

is a solution of system (8).

Hence in this case, the equations are consistent and indeterminate. We from the above discussion, conclude that

- If ai = bi = ci = 0 (i = 1, 2) the system is consistent and indeterminate.

- if Δ≠0 the system is consistent and determinate and its unique solution is given by

- If Δ = 0 but a least one of Δx, Δy is non-zero the system is inconsistent

- if Δ = 0 and Δx = Δy = 0 but at least one of a’s and b’s is non-zero the system is consistent and indeterminate.

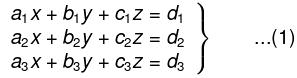

Case II. Non-homogeneous linear equations in three unknowns.

Consider the system of three non-homogeneous linear equations in three unknowns x, y, z

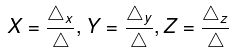

Introduce the following notations

For testing the consistency of the given system of equations (1), we give the following rule.

- For ai = bi = ci = di = 0, (i = 1, 2, 3), the system (1) has infinite number of solutions. Hence the equations are consistent and indeterminate.

- When ai = bi = ci = 0, (i = 1, 2, 3) and at least one di (i = 1, 2, 3) is non-zero, the system (1) has no solution it is inconsistent.

- For Δ ≠ 0, the system (1) has a unique solution given by

The method of solving the above equations is known as Cramer’s rule. So equations in this case are consistent and determinate - If Δ= 0 but at least one of Δx, Δy and Δz is non-zero, the system has no solution i.e. it is inconsistent.

- When D = Δx = Δy = Δz = 0 and at least one cofactor of Δ is non-zero, the system will have an infinite number of solutions. In this case the system (1) reduces to two equations. To obtain the solution set give any arbitrary value to one of the variables i.e. unknowns and express the other variables in terms of that variable. In such case the three equations reduce to two equations. So the equations in this case are consistent and indeterminate.

- If all the cofactors of Δ, Δx, Δy, Δz are zero but A has at least one non-zero element then in this case the given system will reduce to a single equation, the solution set of the given system can be obtained by giving arbitrary values to any two variables, so in this case the equations are consistent and indeterminate.

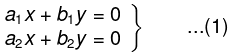

➤ Solution of simultaneous Homogeneous linear equations

Two unknowns: Consider the system of linear equations

The system (1) always has the trivial solution x = 0, y = 0 so it is always consistent.

The system (1) has a non-trivial solution iff Δ = 0 and in this case the equations have infinite number of solutions. If Δ ≠ 0 then the system (1) has unique solution x = 0, y = 0 similarly, we can discuss the solution of a system of three homogeneous linear equations in three unknowns x, y, z for such a system if Δ ≠ 0, then it has the unique solution x = 0, y = 0, z = 0.

If A = 0 then the system has an infinite number solutions.

Thus such a system of equations is always consistent.

Three equations in two unknowns consider the system of equations

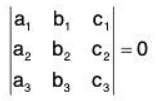

The system (1) will be consistent if the values of x, y obtained from any two equations also satisfy the third equation i.e. if

➤ Solution of Simultaneous Linear Non-Homogeneous Equations

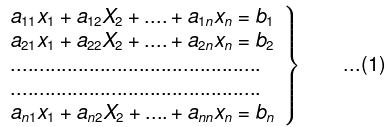

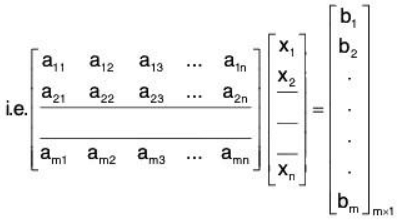

Let us consider the system of n simultaneous equations having n unknowns x1, x2, x3 .... xn; as given below:

(No of unknowns = No of equations) or in compact form, the above system can be written as where i = 1, 2,..... n.

where i = 1, 2,..... n.

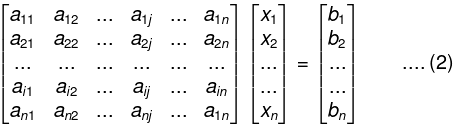

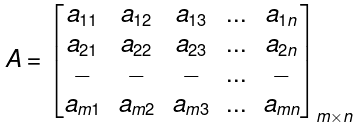

In matrix form, equation (1) can be put as

⇒ AX = B

where is known as the coefficient matrix.

is known as the coefficient matrix.

X = transposed matrix of [x1, x2,x3, ... xn ]

and B = transposed matrix of [b1, b2,b3, ... bn ]

when A is a non-singular matrix, then A-1 exists and hence A-1 A = I, where I is the identity matrix 1st Method. From above, we have AX = B

A-1 AX = A-1B ⇒ I X = A-1 B ⇒ X = A-1B

From here, one can obtain x1, x2, ... xn. This solution is unique.

When the system of equations has one or more solutions, the equations are said to be consistent, otherwise the equations can said to be inconsistent.

2nd Method: To solve the equation AX = B, we perform E-row operations on the both A and B so that the matrix A is reduced to the triangular form. Let A be reduced to A1 and B to B1; Then AX = B give A1X1 = B1 which when solved gives x1,x2, ..., xn.

Example. Solve by matrix method, the system x + y + z = 3, x + 2y + 3z = 4, x + 4y + 9z = 6.

The given system in matrix from can be put as

⇒ AX = B

⇒ X = A-1B

To find A-1, we compute Adj A and I A I as follows:

Also

= 1 (18 - 12) -1 (9 - 3) + 1 (4 - 2) = 2

Consistent and In-Consisyent Non-Homogeneous Linear Equations

Consistent: A system of equations is said to be consistent if they have one or more solution,

In-consistent: When a particular system of equations has no solution at all, it is said to be inconsistent e.g., x + 2y = 4, 3x + 6x = 5.

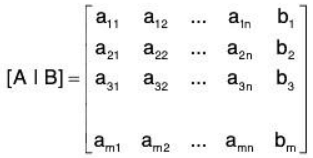

Consistency of a set of m equations (non-homogeneous) in n unknowns

⇒ AX = B ..(1)

Then we obtain the augmented matrix:

For the solution of AX = B, proceed as follows.

Here we have m non-homogeneous equations in n unknowns, then matrix A is of order m x n. Write the augmented matrix [A I B] and reduce it to the Echelon form by applying elementary row operations only. Thus we shall be able to find the rank of augmented matrix [A I B]. Also we can find the rank coefficient matrix A by deleting the last column of the Echelon form [A I B]. Note that deleting the last column of [A I B] we get the Echelon form of coefficient matrix A.

Now there are two possibilities.

- Rank of A ≠ Rank of [A I B].

i.e.ρ(A) ≠ ρ([A I B])

In this case the equations are said to be in-consistent i.e. they have no solution. - Rank of A = Rank of [A I B] = r (say)

i.e. ρ [A] = ρ ([A I B])

In this case the equations are said to be consistent i.e. they have a solution.

From the Echelon form of [A I B], we write the equivalent system of equations. If r<m, then in the process of reducing [A I B] to Echelon form m-r rows are reduced to zero rows and so m-r equations will be eliminated. Thus here we obtain an equivalent system of r equations only. From these r equations we shall be able to express the values of r unknowns in terms of the remaining n-r unknowns. We can give any arbitrary chosen values to these n-r unknowns. Now there exist the following cases.

I. If r=n, then n-r=0. In this case there will be an unique solution of the equations as no variable will be given an arbitrary value.

II. If r<n, then n-r >0. In this case arbitrary value will be given to the n-r variables. Thus in this case there will be an infinite number of solutions.

III. If m<n, then r<m<n, then n-r>0. In this case also arbitrary values will be given to the n - r variables. Thus in this case there will be an infinite number of solutions.

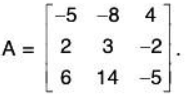

Example. Test for consistency and hence solve x + y + z = -3, 3x + y - 2z = -2, 2x + 4y + 7z = 7.

We have

and

Now

or

But rank of the R.H.S matrix is 3 as

so ρ([A|B]) = 3

Also

or

Now

∴ ρ(A) = 2

ρ(A) ≠ ρ([A|B]) and hence the given system of equations is inconsistent.

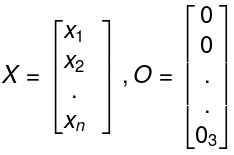

Consistency of a set of equations (homogeneous) in n unknowns Consider the following set of equations

where the number of equations (m here) may be different from the number of unknown. The above system in matrix form takes the form AX = O ...(1)

where

Here A and augmented matrix [A I B] i.e [A I 0] are the same so that ρ(A) = rank of the augmented matrix. Hence the system of homogenous equations is always consistent ⇒ x1 = x2 = ... = xn = 0 is always a solution if det A ≠ 0 which is called a trivial solution or zero solution. But we are interested in non-trivial solution.

Case (i) Let ρ(A) = r = n(≤ m)

In this case the number of variables to be assigned arbitrary values in n - r = 0. Hence ∃ a unique solution X = 0 i.e x1 = x2 = . . = xn = 0.

Case (ii) ρ(A) = r < n(≤ m)

Here (n - r) variables can be selected and assigned arbitrary values and hence ∃ infinite number of solutions.

When m < n, ρ(A) = r ≤ m < n, (n - r) Variables can be selected and assigned arbitrary values. Hence when the number of equations is less then the number of unknowns, the equations will always have an infinite number of solutions.

Example. Solve the system 2x - y + 3z = 0, 3x + 2y + z = 0 and x - 4y + 5z = 0.

Here

⇒ ρ(A) = 2 < 3

(i.e rank is less than the number of variables)

Now, the matrix form of the system reduces to

⇒ x = -z, y = z. Choosing z = k,

we get x = -k, y = k, z = k.

This gives the general solution of the given system where k is any arbitrary constant.

Eigen Values & Eigen Vectors

Definitions

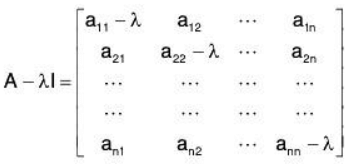

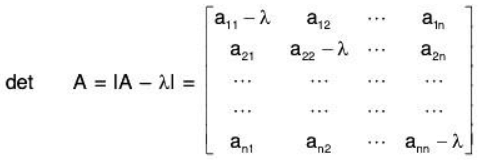

Let A = [aij]n*n be a square matrix of order n, I is an unit matrix of order n and λ an indeterminate, then the matrix

is called the characteristic matrix of A and the determinant

is called the characteristic polynomial of A.

Also the equation IA - λII = 0, is called the characteristic equation of A. The roots of this equations are called the characteristic roots or characteristic value or Eigen roots or Eigen values of latent roots of the matrix.

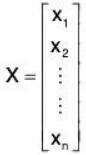

The set of the Eigen values of the matrix A is called the spectrum of the matrix A If X is a characteristic root of a n * n matrix A, then the non-zero solution

of the equation AX = λX i.e. (A - λI) X = 0 is called the characteristic vector or Eigen vector of the matrix A corresponding to the characteristic root λ.

Chief Characteristics of Eigen Values

The sum of the elements of the principal diagonal of a matrix is called the trace of the matrix.

- Any matrix A and its transpose both have the same Eigen values.

- The trace of the matrix equals to the sum of the Eigen values of a matrix.

- The determinant of the matrix A equals to the product of the Eigen values of A.

- If λ1, λ2, .... , λn are the n-Eigen values of A, then

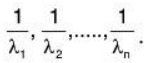

- Eigen value of kA are kλ1, kλ2, .... , kλn Eigen values of A-1 are

- Eigen values of Am are λ1m, λ2m........ λnm.

- If λ is an Eigen value of a matrix A, then 1/λ, is the Eigen value of A-1.

- Eigen value of kA are kλ1, kλ2, .... , kλn Eigen values of A-1 are

Diagonalisation of a matrix

Definition: Any matrix A is said to diagonalisable if A can be written as PDP-1 where D is diagonal matrix.

In that case, f(A) = f(PDP -1) = P f(D)P-1 for any polynomial. This is useful as it’s easier to calculate f(D).

Some important Theorems on Eigen values and eigen vectors

Theorem: λ is a characteristic root of a matrix A if and only if there exists a non-zero vector X such that AX = λX.

Theorem: The matrix A and its transpose have the same characteristic roots.

Recall that the eigenvalues of a matrix are roots of its characteristic polynomial.

Hence if the matrices A and AT have the same characteristic polynomial, then they have the same eigenvalues.

So we show that the characteristic polynomial pA(t) = det (A - tl) of A is the same as the characteristic polynomial  of the transpose AT.

of the transpose AT.

We have

= det(AT - tlT) since lT = I

= det((A - tl)T)

= det(A - tl) since det (BT) = det(B) for any square matrix B

= Pa(t).

Therefore we obtain  , and we conclude that the eigenvalues of A and AT are the same.

, and we conclude that the eigenvalues of A and AT are the same.

Theorem: If A and P be square matrices and A~B and if P is invertible, then the matrices A and P-1 AP have the same characteristic roots.

Theorem: If A and B be n rowed square matrices and if A be invertible, then the matrices A-1 B and BA-1 have the same characteristic roots.

Theorem: 0 is a characteristic root of a matrix if and only if the matrix is singular.

Theorem: If A and B be two square invertible matrices then AB and BA have, the same characteristics roots.

Theorem: The characteristic roots of a triangular matrix are just the diagonal elements of the matrix.

Theorem: All the characteristics roots of a Hermitian matrix are real.

Cor. : Characteristic roots of a real symmetric matrix are all real.

Cor. : Characteristic roots of skew Hermitian matrix is either zero or a pure imaginary number.

Theorem: Any two characteristic vectors corresponding to two distinct characteristic roots of a : (i) Hermitian, (ii) real symmetric, (iii) Unitary matrix are orthogonal.

Theorem: The characteristic vector corresponding to characteristic root X of matrix A is also a characteristic vector of every matrix f(A) where f(x) is any scalar polynomial and the corresponding root for f(A) is f(λ) and in general.

if  and |f2(A)| ≠ 0 then g(λ) is a characteristic root of g(A) = f1(A) .{f2(A)}-1.

and |f2(A)| ≠ 0 then g(λ) is a characteristic root of g(A) = f1(A) .{f2(A)}-1.

Theorem: The modulus of each characteristic root of a unitary matrix is unity.

Theorem: Any system of characteristic vectors X1, X2, .... Xk respectively corresponding to a system of distinct characteristic roots λ1,λ2, .... λk of any square matrix A are linearly independent.

Theorem: A matrix is nilpotent iff 0 is the only eigenvalue.

(⇒)

Suppose the matrix A is nilpotent. Namely there exists k ∈ ℕ such that Ak = O. Let λ be an eigenvalue of A and let x be the eigenvector corresponding to the eigenvalue λ.

Then they satisfy the equality Ax = λx. Multiplying this equality by A on the left, we have

A2x = λAx = λ2x.

Repeatedly multiplying by A, we obtain that Akx = λkx. (To prove this statement, use mathematical induction.)

Now since Ak = O, we get λkx = 0n, n-dimensional zero vector.

Since x is an eigenvector and hence nonzero by definition we obtain that λk = 0, and hence λ = 0.

(⇐)

Now we assume that all the eigenvalues of the matrix A are zero.

We prove that A is nilpotent.

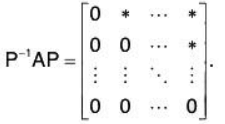

There exists an invertible n * n matrix P such that P-1 AP is an upper triangular matrix whose diagonal entries are eigenvalues of A.

(This is always possible. Study a triangularizable matrix or Jordan normal/canonical form.)

Hence we have

Then we have (P-1 AP)n = O. This implies that P-1 An P = O and thus An = POP-1 = O.

Therefore the matrix A is nilpotent.

Theorem: The characteristic polynomial of an n x n matrix A has the form

p(t) = tn - (trace A )tn - 1 + (intermediate terms) + (-1)n(detA)

Theorem: Every complex matrix A can be written as PTP1 where T is triangular matrix. Theorem: Let A be a unitary matrix. There is a unitary matrix P such that P*AP is diagonal. In particular all unitary and hence symmetric matrices are diagonalisable.

Note: Proof of above theorems is not required. You can directly use them as results.

Example. Determine the Eigen values and Eigen vectors of the matrix .

.

= (5 - λ)(2 - λ) - 4 =λ2 - 7λ + 6

IA - λII = λ2 - 7λ + 6 = 0

⇒ (λ - 1 )(λ - 6) = 0

∴ λ = 1, 6. Hence the Eigen values of matrix A are 1, 6.

Now the Eigen vector of matrix A corresponding to the Eigen vector of matrix A corresponding to the Eigen value λ is given by the non-zero solution of the equation (A - λI) X = 0

or...(1)

When λ = 1, the corresponding Eigen vector is given by

or 4x1+ 4x2 = 0 and x1 + x2 = 0

From which we get x, = -x2

or

may be taken as an Eigen vector of A corresponding to the Eigen value λ = 1

When λ = 6, substituting in (1) the corresponding Eigen vector is given by

From which we get, x1 = 4x2

Thusmay be taken as an Eigen vector of A corresponding to the Eigen value λ = 6.

Hence the Eigen vectors are

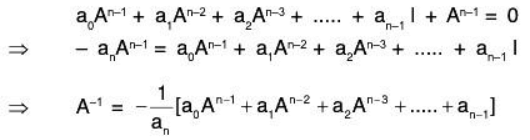

Cayley Hamilton Theorem

Every square matrix satisfies its own characteristic equation.

or

For a square matrix A of order n, if

IA - λII = ( - 1)n (λn + a1λn-1 + a2λn-2 + .... + an)

be the characteristic polynomial, then the matrix equation Xn + a1Xn-1+ a2Xn-2 + .... + an I = 0 is satisfied by X = A i.e., An + a1An-1 + a2An-2 + .... + anI = 0.

Cor. Inverse of matrix by Cayley-Hamilton Theorem

IA - λII = a0λn + a1λn-1 + a2λn-2 + .... + an-1λ + an

is the characteristic polynomial of A, so we have

a0An-1 + a1An-1 + ... + an-1A + an I = 0 ... (1)

Now pre-multiplying (1) by A-1, we get

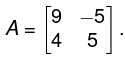

Example. Verify Cayley Hamilton Theorem for the matrix  Hence find A-1.

Hence find A-1.

We have det (A - λI) = IA - λII

Now the characteristic equation of A is given byIA - λII = - λ3 + 6λ2 - 7λ - 2 = 0

⇒ λ3 - 6λ2 + 7λ + 2 = 0 ...(1)

By Cayley-Hamilton theorem, the matrix A must satisfy (1) i.e. A3 - 6A2 + 7A + 2I = 0

Now

⇒ Cayley-Hamilton theorem is verified.

To find A-1

we have 2I = - A3 + 6A2 - 7A

Then pre-multiplying both side by A-1, we get

2A-1 I = - A-1 A. + 6A-1 A2 - 7A-1 A

2A-1 = - IA2 + 6IA - 7I ( ∴ A-1 A = I and IA = A etc)

⇒ 2A-1 = (- A2 + 6A - 7I)

Theorem: If A is an n * n matrix with

then

Theorem: If A is an n x n upper (or lower) triangular matrix, the eigenvalues are the entries on its main diagonal.

Definition: Let A be an n * n matrix and let

(1) The number n1, n2, n3, ..., nk are the algebraic multiplicities of the eigenvalues r1,r2, r3, ... , respectively.

(2) The geometric multiplicity of the eigenvalue rj (j = 1, 2, 3 ...... k) is the dimension of the null space A - rjln (j = 1, 2, 3, ... , k).

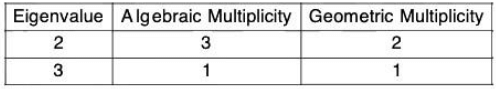

for example

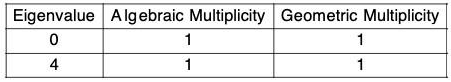

- The table below gives the algebraic and geometric multiplicity for each eigenvalue of the 2 x 2 matrix

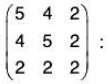

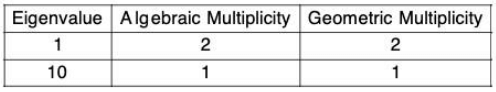

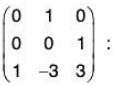

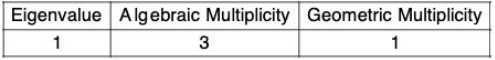

- The table below gives the algebraic and geometric multiplicity for each eigenvalue of the 3 x 3 matrix

- The table below gives the algebraic and geometric multiplicity for each eigenvalue of the 3 x 3 matrix

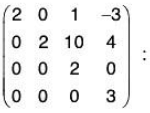

- The table below gives the algebraic and geometric multiplicity for each eigenvalue of the 4 x 4 matrix

The above examples suggest the following theorem:

Theorem: Let A be an n x n matrix with eigenvalue λ. Then the geometric multiplicity of λ is less than or equal to the algebraic multiplicity of λ.

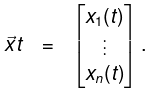

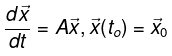

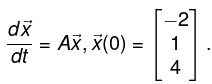

Objective: Solve

with an n x n constant coefficient matrix A.

Here, the unknown is the vector function

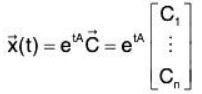

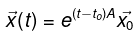

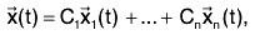

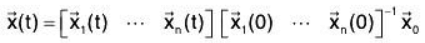

General Solution Formula in Matrix Exponential Form:

where C1,......Cn are arbitrary constants.

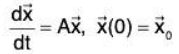

The solution of the initial value problem

is given by

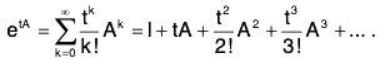

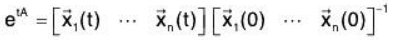

Definition (Matrix Exponential): For a square matrix A,

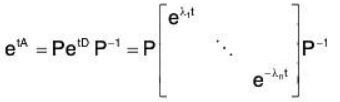

Evaluation of Matrix Exponential in the Diagonalizable Case: Suppose that A is diagonalizable; that is, there are an invertible matrix P and a diagonal matrix  such that A = PDP-1. In this case, we have

such that A = PDP-1. In this case, we have

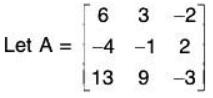

Example:

(a) Evaluate etA

(b) Find the general solutions of  .

.

(c) Solve the initial value problem

The given matrix A is diagonalized: A = PDP-1 with

Part (a): We have

eta = PetD P-1

Part (b): The general solutions to the given system are

where C1, C2, C3 are free parameters.

Part (c): The solution to the initial value problem is

Evaluation of Matrix Exponential Using Fundamental Matrix: In the case A is not diagonalizable, one approach to obtain matrix exponential is to use Jordan forms. Here, we use another approach. We have already learned how to solve the initial value problem

We shall compare the solution formula with  to figure out what etA is. We know the general solutions of

to figure out what etA is. We know the general solutions of  are of the following structure:

are of the following structure:

where  are n linearly independent particular solutions. The formula can be rewritten as

are n linearly independent particular solutions. The formula can be rewritten as

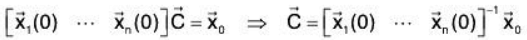

For the initial value problem  is determined by the initial condition

is determined by the initial condition

Thus, the solution of the initial value problem is given by

Comparing this with  , we obtain

, we obtain

In this method of evaluating etA, the matrix  plays an essential role. Indeed, etA = M(t) M(0)-1.

plays an essential role. Indeed, etA = M(t) M(0)-1.

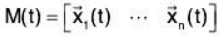

Definition (Fundamental Matrix Solution): if  are n linearly independent solutions of the n dimensional homogeneous linear system

are n linearly independent solutions of the n dimensional homogeneous linear system  we call

we call

a fundamental matrix solution of the system.

(Remark 1: The matrix function M(t) satisfies the equation M’(t) = AM(t). Moreover, M(t) is an invertible matrix for every t. These two properties characterize fundamental matrix solutions.)

(Remark 2: Given a linear system, fundamental matrix solutions are not unique. However, when we make any choice of a fundamental matrix solution M(t) and compute M(t)M(0)-1 we always get the same result.)

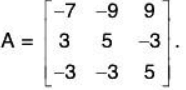

Example 1: Evaluate etA for

We first solve

We obtain

This gives a fundamental matrix solution:

The matrix exponential is

Example 2: Evaluate etA for

We first solve

We obtain

This gives a fundamental matrix solution:

The matrix exponential is

Example 3: Evaluate etA for

- (Use Diagonalization)

Solving det(A - λI) = 0, we obtain the eigenvalues of A: λ1 = 7 + 4i, λ2 = 7 - 4i.

Eigen vectors for λ1 = 7 + 4i; are obtained by solving

Eigenvectors for λ2 = 7 - 4i: are complex conjugate of the vector in (*). The matrix A is now diagonalized: A = PDP-1 with

we have

etA = PetD P-1- (Use fundamental solutions and complex exp functions)

A fundamental matrix solution can be obtained from the eigenvalues and eigenvectors:

The matrix exponential is- (Use fundamental solutions and avoid complex exp functions)

A fundamental matrix solution can be obtained from the eigenvalues and eigenvectors :

The matrix exponential is

|

98 videos|27 docs|30 tests

|

FAQs on System of Linear Equations, Eigen Values & Eigen Vectors: Notes - Mathematics for Competitive Exams

| 1. What is a system of linear equations? |  |

| 2. What does it mean for a system of linear equations to be consistent? |  |

| 3. What does it mean for a system of linear equations to be inconsistent? |  |

| 4. What is the importance of eigenvalues and eigenvectors in linear algebra? |  |

| 5. How can eigenvalues and eigenvectors be computed? |  |

|

Explore Courses for Mathematics exam

|

|

...(1)

...(1)

may be taken as an Eigen vector of A corresponding to the Eigen value λ = 1

may be taken as an Eigen vector of A corresponding to the Eigen value λ = 1

may be taken as an Eigen vector of A corresponding to the Eigen value λ = 6.

may be taken as an Eigen vector of A corresponding to the Eigen value λ = 6.

We obtain

We obtain