All questions of Operating System for Computer Science Engineering (CSE) Exam

demand - paging uses a TLB (Translation Look - aside Buffer) and single level page table stored in main memory. The memory access time is 5 μs and the page fault service time is 25 ms. If 70% of access is in TLB with no page fault and of the remaining, 20% is not present in main memory. The effective memory access time in ms is _________. (Write answer up to two decimal places)- a)1.49-1.51

- b)1.494-1.511

- c)1.497-1.516

- d)1.499-1.512

Correct answer is option 'A'. Can you explain this answer?

demand - paging uses a TLB (Translation Look - aside Buffer) and single level page table stored in main memory. The memory access time is 5 μs and the page fault service time is 25 ms. If 70% of access is in TLB with no page fault and of the remaining, 20% is not present in main memory. The effective memory access time in ms is _________. (Write answer up to two decimal places)

a)

1.49-1.51

b)

1.494-1.511

c)

1.497-1.516

d)

1.499-1.512

|

Machine Experts answered |

EAT = ht (tt + tm) + (1 – ht) [p * (tt + (n + 1) tm) + (1 – p) = td]

= 0.7 * (0 + 5 μs) + 0.3 * [0.8(0 + 2 * 5 μs) + 0.2 * 25 ms]

= 3.5 μs + 0.3 * [8 μs + 5000 μs]

= 1505.9 μs = 1.5 ms

A system has 12 identical resources and Y processes competing for them. Each process can request at most 3 resources. Which one of the following values of Y could lead to a deadlock?- a)5

- b)6

- c)7

- d)Both 6 and 7

Correct answer is option 'D'. Can you explain this answer?

A system has 12 identical resources and Y processes competing for them. Each process can request at most 3 resources. Which one of the following values of Y could lead to a deadlock?

a)

5

b)

6

c)

7

d)

Both 6 and 7

|

Sanchita Pillai answered |

Understanding Deadlock in Resource Allocation

Deadlock occurs in a system when processes are unable to proceed because each is waiting for resources held by another. In this scenario, we analyze the potential for deadlock with 12 identical resources and Y processes, where each process can request a maximum of 3 resources.

Maximum Resources Requested

- Each process can request up to 3 resources.

- Therefore, for Y processes, the maximum resources that could be requested is 3Y.

Condition for Deadlock

- A deadlock can occur when the total number of resources is less than the total number of resources that could potentially be held by the processes.

- For deadlock to happen, the formula is: Total resources < (maximum="" resources="" requested="" by="" all="" processes)="" -="" (number="" of="" />

Calculation for Different Values of Y

1. For Y = 5:

- Maximum resources needed = 3 * 5 = 15

- Total resources (12) < 15="" (not="" a="" deadlock="" />

2. For Y = 6:

- Maximum resources needed = 3 * 6 = 18

- Total resources (12) < 18="" (deadlock="" />

3. For Y = 7:

- Maximum resources needed = 3 * 7 = 21

- Total resources (12) < 21="" (deadlock="" />

Conclusion

- Only when Y is 6 or 7 can the system potentially enter a deadlock, as the total resources available (12) are insufficient to satisfy the maximum requests from the processes.

- Thus, the correct answer is option 'D', as both 6 and 7 can lead to a deadlock situation.

Deadlock occurs in a system when processes are unable to proceed because each is waiting for resources held by another. In this scenario, we analyze the potential for deadlock with 12 identical resources and Y processes, where each process can request a maximum of 3 resources.

Maximum Resources Requested

- Each process can request up to 3 resources.

- Therefore, for Y processes, the maximum resources that could be requested is 3Y.

Condition for Deadlock

- A deadlock can occur when the total number of resources is less than the total number of resources that could potentially be held by the processes.

- For deadlock to happen, the formula is: Total resources < (maximum="" resources="" requested="" by="" all="" processes)="" -="" (number="" of="" />

Calculation for Different Values of Y

1. For Y = 5:

- Maximum resources needed = 3 * 5 = 15

- Total resources (12) < 15="" (not="" a="" deadlock="" />

2. For Y = 6:

- Maximum resources needed = 3 * 6 = 18

- Total resources (12) < 18="" (deadlock="" />

3. For Y = 7:

- Maximum resources needed = 3 * 7 = 21

- Total resources (12) < 21="" (deadlock="" />

Conclusion

- Only when Y is 6 or 7 can the system potentially enter a deadlock, as the total resources available (12) are insufficient to satisfy the maximum requests from the processes.

- Thus, the correct answer is option 'D', as both 6 and 7 can lead to a deadlock situation.

Suppose there is a paging system with a translation lookaside buffer. Assuming that the entire page table and all the pages are in the physical memory, what is the effective memory access time in ms if it takes 5 msec to search the TLB and 70 msec to access physical memory? The TLB hit ratio is 0.8.- a)89-89

- b)894-891

- c)897-896

- d)899-892

Correct answer is option 'A'. Can you explain this answer?

Suppose there is a paging system with a translation lookaside buffer. Assuming that the entire page table and all the pages are in the physical memory, what is the effective memory access time in ms if it takes 5 msec to search the TLB and 70 msec to access physical memory? The TLB hit ratio is 0.8.

a)

89-89

b)

894-891

c)

897-896

d)

899-892

|

Crack Gate answered |

Data:

TLB hit ratio = p = 0.8

TLB access time = 5 milliseconds

Memory access time = m = 70 milliseconds

Formula:

EMAT = p × (t + m) + (1 – p) × (t + m + m)

Calculation:

EMAT = 0.8 × (5 + 70) + (1 – 0.8) × (5 + 70 + 70)

EMAT = 89 milliseconds.

Important points:

During TLB hit

The frame number is fetched from the TLB (5 ms)

and page is fetched from physical memory (70 ms)

During TLB miss

TLB no entry matches (5 ms)

The frame number is fetched from the physical memory (70 ms)

and pages are fetched from physical memory (70 ms)

Which of the following is/are TRUE about user-level threads and kernel-level threads?- a)Typically if a user-level thread is performing blocking system call then the entire process will be blocked

- b)Kernel level threads are designed as dependent threads

- c)Every thread has its own register and stack

- d)Communication between multiple threads is easier than between two process.

Correct answer is option 'A,C,D'. Can you explain this answer?

Which of the following is/are TRUE about user-level threads and kernel-level threads?

a)

Typically if a user-level thread is performing blocking system call then the entire process will be blocked

b)

Kernel level threads are designed as dependent threads

c)

Every thread has its own register and stack

d)

Communication between multiple threads is easier than between two process.

|

Machine Experts answered |

Option 1: TRUE:

If a user-level thread block then entire process will get blocked

Option 2: FALSE

Kernel level threads are independent that is why they also require more context switch than user – level threads.

Option 3: TRUE

Every thread has its own register and stack

Option 4: TRUE

Communication between multiple threads is easier, as the threads shares common address space. while in process we have to follow some specific communication technique for communication between two process.

Consider a hypothetical system with byte-addressable memory, 64-bit logical addresses, 8 Megabyte page size and page table entries of 16 bytes each. What is the size of the page table in the system in terabytes?- a)32-32

- b)324-321

- c)327-326

- d)329-322

Correct answer is option 'A'. Can you explain this answer?

Consider a hypothetical system with byte-addressable memory, 64-bit logical addresses, 8 Megabyte page size and page table entries of 16 bytes each. What is the size of the page table in the system in terabytes?

a)

32-32

b)

324-321

c)

327-326

d)

329-322

|

Sanchita Pillai answered |

Understanding the System Components

- Logical Address Space: The system has a 64-bit logical address space, which means it can address 2^64 bytes of memory.

- Page Size: Each page in this system is 8 Megabytes (MB). This is equivalent to 2^23 bytes (since 1 MB = 2^20 bytes).

- Page Table Entry Size: Each page table entry is 16 bytes.

Calculating the Number of Pages

- Total Memory: The total memory addressable by the system is 2^64 bytes.

- Number of Pages: To find the number of pages, divide the total addressable memory by the page size:

- Number of pages = Total addressable memory / Page size

- Number of pages = 2^64 bytes / 2^23 bytes = 2^(64-23) = 2^41 pages.

Calculating the Page Table Size

- Size of the Page Table: The size of the page table can be calculated by multiplying the number of pages by the size of each page table entry:

- Page table size = Number of pages * Size of each page table entry

- Page table size = 2^41 pages * 16 bytes = 2^41 * 2^4 = 2^45 bytes.

Converting to Terabytes

- Bytes to Terabytes Conversion:

- 1 Terabyte (TB) = 2^40 bytes.

- Therefore, Page table size in TB = Page table size in bytes / 2^40 bytes = 2^45 / 2^40 = 2^5 = 32 TB.

Conclusion

The size of the page table in this system is 32 Terabytes, confirming that option 'A' is indeed correct.

- Logical Address Space: The system has a 64-bit logical address space, which means it can address 2^64 bytes of memory.

- Page Size: Each page in this system is 8 Megabytes (MB). This is equivalent to 2^23 bytes (since 1 MB = 2^20 bytes).

- Page Table Entry Size: Each page table entry is 16 bytes.

Calculating the Number of Pages

- Total Memory: The total memory addressable by the system is 2^64 bytes.

- Number of Pages: To find the number of pages, divide the total addressable memory by the page size:

- Number of pages = Total addressable memory / Page size

- Number of pages = 2^64 bytes / 2^23 bytes = 2^(64-23) = 2^41 pages.

Calculating the Page Table Size

- Size of the Page Table: The size of the page table can be calculated by multiplying the number of pages by the size of each page table entry:

- Page table size = Number of pages * Size of each page table entry

- Page table size = 2^41 pages * 16 bytes = 2^41 * 2^4 = 2^45 bytes.

Converting to Terabytes

- Bytes to Terabytes Conversion:

- 1 Terabyte (TB) = 2^40 bytes.

- Therefore, Page table size in TB = Page table size in bytes / 2^40 bytes = 2^45 / 2^40 = 2^5 = 32 TB.

Conclusion

The size of the page table in this system is 32 Terabytes, confirming that option 'A' is indeed correct.

Consider a system has hit ratio as h%. Average access time to service a page fault is s milliseconds and average effective memory access time is e in milliseconds. What is the memory access time in microseconds (m is considered with service time)?- a)100 × (10 × (s - e) + s × h)

- b)1000 × e - 1000 × s + 100 × s × h

- c)10 × (100 × (e - s) + s × h)

- d)100 × (10 × (e - s) + s × h)

Correct answer is option 'C'. Can you explain this answer?

Consider a system has hit ratio as h%. Average access time to service a page fault is s milliseconds and average effective memory access time is e in milliseconds. What is the memory access time in microseconds (m is considered with service time)?

a)

100 × (10 × (s - e) + s × h)

b)

1000 × e - 1000 × s + 100 × s × h

c)

10 × (100 × (e - s) + s × h)

d)

100 × (10 × (e - s) + s × h)

|

Sanchita Pillai answered |

Understanding Memory Access Time

To find the memory access time (m) that includes the service time, we need to consider the hit ratio (h), average access time for a page fault (s), and effective memory access time (e).

Key Concepts

- Hit Ratio (h%): The percentage of time that a requested page is found in memory.

- Page Fault Service Time (s): The time taken to service a page fault when it occurs.

- Effective Memory Access Time (e): The average time taken to access memory, considering both hits and misses.

Effective Memory Access Time Calculation

The effective memory access time can be calculated as:

e = h * (time for hit) + (1 - h) * (s + time for hit)

Where:

- Time for hit = time taken to access memory without a page fault.

This can be rearranged to derive the relationship between e, s, and h.

Memory Access Time (m)

To compute the memory access time (m) incorporating service time, we can manipulate the equation involving e:

m = 10 * (100 * (e - s) + s * h)

This reflects the impact of both hit and miss scenarios on overall memory access time, factoring in service times.

Conclusion

Thus, the correct formula for memory access time, considering the service time, is:

Option C: 10 * (100 * (e - s) + s * h)

This equation effectively captures the relationship between effective memory access time, page fault servicing time, and hit ratio.

To find the memory access time (m) that includes the service time, we need to consider the hit ratio (h), average access time for a page fault (s), and effective memory access time (e).

Key Concepts

- Hit Ratio (h%): The percentage of time that a requested page is found in memory.

- Page Fault Service Time (s): The time taken to service a page fault when it occurs.

- Effective Memory Access Time (e): The average time taken to access memory, considering both hits and misses.

Effective Memory Access Time Calculation

The effective memory access time can be calculated as:

e = h * (time for hit) + (1 - h) * (s + time for hit)

Where:

- Time for hit = time taken to access memory without a page fault.

This can be rearranged to derive the relationship between e, s, and h.

Memory Access Time (m)

To compute the memory access time (m) incorporating service time, we can manipulate the equation involving e:

m = 10 * (100 * (e - s) + s * h)

This reflects the impact of both hit and miss scenarios on overall memory access time, factoring in service times.

Conclusion

Thus, the correct formula for memory access time, considering the service time, is:

Option C: 10 * (100 * (e - s) + s * h)

This equation effectively captures the relationship between effective memory access time, page fault servicing time, and hit ratio.

Consider a system having 20 resources of same type. These resources are shared by 4 process which have peak demands of 4, 5, x, y respectively. What is the maximum value of x + y to ensure that there will be no deadlock in the system?- a)16

- b)20

- c)14

- d)12

Correct answer is option 'C'. Can you explain this answer?

Consider a system having 20 resources of same type. These resources are shared by 4 process which have peak demands of 4, 5, x, y respectively. What is the maximum value of x + y to ensure that there will be no deadlock in the system?

a)

16

b)

20

c)

14

d)

12

|

|

Maulik Iyer answered |

Understanding the Deadlock Situation

To determine the maximum value of x + y that will ensure no deadlock occurs in a system with 20 resources shared among four processes, we need to analyze the peak demands of each process and apply the resource allocation principles.

Peak Demands of Processes

- Process 1: 4 resources

- Process 2: 5 resources

- Process 3: x resources

- Process 4: y resources

Total Resources Available

- The total number of resources available = 20

Safe State Condition

For a system to avoid deadlock, the total number of resources should exceed the sum of the maximum demands of all processes minus the number of processes minus one. This is expressed as:

Total Resources >= Sum of (Peak Demands) - (Number of Processes - 1)

In our case:

Total Resources >= (4 + 5 + x + y) - (4 - 1)

This simplifies to:

20 >= 9 + x + y - 3

20 >= 6 + x + y

14 >= x + y

Conclusion

To ensure no deadlock occurs, the maximum value of x + y must be less than or equal to 14. Thus, the correct answer is option 'C', which is 14.

By keeping the sum of x and y within this limit, we can guarantee that the system remains in a safe state, thus avoiding deadlock.

To determine the maximum value of x + y that will ensure no deadlock occurs in a system with 20 resources shared among four processes, we need to analyze the peak demands of each process and apply the resource allocation principles.

Peak Demands of Processes

- Process 1: 4 resources

- Process 2: 5 resources

- Process 3: x resources

- Process 4: y resources

Total Resources Available

- The total number of resources available = 20

Safe State Condition

For a system to avoid deadlock, the total number of resources should exceed the sum of the maximum demands of all processes minus the number of processes minus one. This is expressed as:

Total Resources >= Sum of (Peak Demands) - (Number of Processes - 1)

In our case:

Total Resources >= (4 + 5 + x + y) - (4 - 1)

This simplifies to:

20 >= 9 + x + y - 3

20 >= 6 + x + y

14 >= x + y

Conclusion

To ensure no deadlock occurs, the maximum value of x + y must be less than or equal to 14. Thus, the correct answer is option 'C', which is 14.

By keeping the sum of x and y within this limit, we can guarantee that the system remains in a safe state, thus avoiding deadlock.

Which of the following statements are true?(a) External Fragmentation exists when there is enough total memory space to satisfy a request but the available space is contiguous.(b) Memory Fragmentation can be internal as well as external.(c) One solution to external Fragmentation is compaction.- a)(a) and (b) only

- b)(a) and (c) only

- c)(b) and (c) only

- d)(a), (b) and (c)

Correct answer is option 'C'. Can you explain this answer?

Which of the following statements are true?

(a) External Fragmentation exists when there is enough total memory space to satisfy a request but the available space is contiguous.

(b) Memory Fragmentation can be internal as well as external.

(c) One solution to external Fragmentation is compaction.

a)

(a) and (b) only

b)

(a) and (c) only

c)

(b) and (c) only

d)

(a), (b) and (c)

|

Crack Gate answered |

(a) External Fragmentation exists when there is enough total memory space to satisfy a request but the available space is contiguous.

This statement is incorrect. As process are loaded and removed from memory, the free memory space is broken into little pieces. External fragmentation exists when enough total memory space exists to satisfy a request but it is not contiguous; storage is fragmented into large number of small holes. External fragmentation is also known as checker boarding. Suppose we have memory blocks available of size 400KB each. But if request come for 600Kb then it cannot be allocated.

(b) Memory Fragmentation can be internal as well as external.

This statement is correct. There is an important issue related to the contiguous multiple partition allocation scheme is to deal with memory fragmentation. There are two types of fragmentation: internal and external fragmentation. Internal fragmentation exists in the case of memory having multiple fixed partitions when the memory is allocated to a process is not fully used by the process.

(c) One solution to external Fragmentation is compaction.

Given statement is correct. To get rid of external fragmentation problem, it is desirable to relocated some or all portions of the memory in order to place all the free holes together at one end of memory to make one large hole. This technique of reforming the storage is known as compaction.

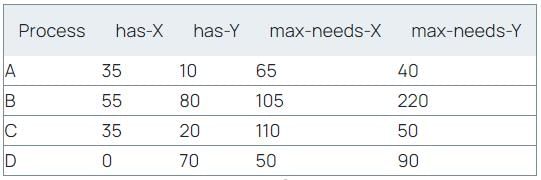

Consider the following scenario: A, B, C, D are processes and X, Y are resources used by the processes. If Resources available: [X: 40 Y: 40] then what will be a safe sequence so that the processes complete execution without deadlock?

If Resources available: [X: 40 Y: 40] then what will be a safe sequence so that the processes complete execution without deadlock?- a)ABDC

- b)ACDB

- c)ADCB

- d)ACBD

Correct answer is option 'C'. Can you explain this answer?

Consider the following scenario: A, B, C, D are processes and X, Y are resources used by the processes.

If Resources available: [X: 40 Y: 40] then what will be a safe sequence so that the processes complete execution without deadlock?

a)

ABDC

b)

ACDB

c)

ADCB

d)

ACBD

|

Crack Gate answered |

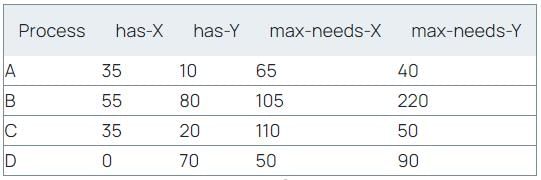

Data:

Remaining Need(X) = (max-needs-X) – (has-X)

Remaining Need(Y) = (max-needs-Y) – (has-Y)

Calculation:

Available resource of X = 40

Available resource of Y = 40

Sequence: ACDB

Need of A(30, 30) ≤ available of (40, 40)

A is executed and release the resource held by it

Available of X = 40 + 35 = 75

Available of Y = 40 + 10 = 55

Available of (X, Y) = (75, 55)

Need of C(75, 30) ≤ available of (75, 55)

Also Need of D(X, Y) ≤ available of (X, Y)

Taking C first (as an exercise take D and solve)

C is executed and release the resource held by it

Available of X = 75 + 35 = 110

Available of Y = 55 + 20 = 75

Available of (X, Y) = (110, 75)

Need of D(50, 20) ≤ available of (110, 75) // B won't be satisfied

D is executed and release the resource held by it

Available of X = 110 + 0 = 110

Available of Y = 75 + 70 = 145

Available of (X, Y) = (110, 140)

Need of B(50, 140) ≤ available of (110, 140)

B is executed and release the resource held by it

Available of X = 110 + 50 = 160

Available of Y = 145 + 140 = 285

Available of (X, Y) = (160, 2885)

∴ safe sequence is ACDB

Similarly, safe sequence is ADCB

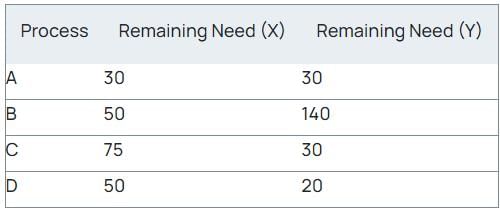

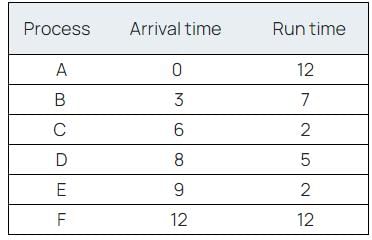

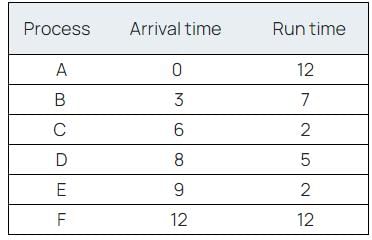

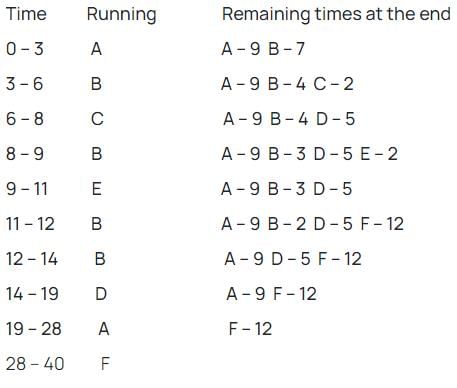

Suppose that jobs arrive according to the following schedule :  Now give the sequence of the processes as they gets share of CPU if the scheduler used is Shortest Remaining Time First.

Now give the sequence of the processes as they gets share of CPU if the scheduler used is Shortest Remaining Time First.- a)A,B,C,B,E,D,B,A,F

- b)A,B,C,B,E,B,D,B,A,F

- c)A,B,C,B,E,B,D,A,F

- d)A,B,C,B,B,B,E,D,A,F

Correct answer is option 'C'. Can you explain this answer?

Suppose that jobs arrive according to the following schedule :

Now give the sequence of the processes as they gets share of CPU if the scheduler used is Shortest Remaining Time First.

a)

A,B,C,B,E,D,B,A,F

b)

A,B,C,B,E,B,D,B,A,F

c)

A,B,C,B,E,B,D,A,F

d)

A,B,C,B,B,B,E,D,A,F

|

Machine Experts answered |

Concept:

Shortest remaining time next: pre-emptive scheduling

In shortest remaining time next, when a process of less burst time(run time) arrives than in ready queue then context switch will happen.

Chapter doubts & questions for Operating System - GATE Computer Science Engineering(CSE) 2026 Mock Test Series 2025 is part of Computer Science Engineering (CSE) exam preparation. The chapters have been prepared according to the Computer Science Engineering (CSE) exam syllabus. The Chapter doubts & questions, notes, tests & MCQs are made for Computer Science Engineering (CSE) 2025 Exam. Find important definitions, questions, notes, meanings, examples, exercises, MCQs and online tests here.

Chapter doubts & questions of Operating System - GATE Computer Science Engineering(CSE) 2026 Mock Test Series in English & Hindi are available as part of Computer Science Engineering (CSE) exam.

Download more important topics, notes, lectures and mock test series for Computer Science Engineering (CSE) Exam by signing up for free.

Contact Support

Our team is online on weekdays between 10 AM - 7 PM

Typical reply within 3 hours

|

Free Exam Preparation

at your Fingertips!

Access Free Study Material - Test Series, Structured Courses, Free Videos & Study Notes and Prepare for Your Exam With Ease

Join the 10M+ students on EduRev

Join the 10M+ students on EduRev

|

|

Create your account for free

OR

Forgot Password

OR

Signup on EduRev and stay on top of your study goals

10M+ students crushing their study goals daily