Notes: Semaphores | Operating System - Computer Science Engineering (CSE) PDF Download

Semaphores in Process Synchronization

Semaphore was proposed by Dijkstra in 1965 which is a very significant technique to manage concurrent processes by using a simple integer value, which is known as a semaphore. Semaphore is simply a variable that is non-negative and shared between threads. This variable is used to solve the critical section problem and to achieve process synchronization in the multiprocessing environment.

Semaphores are of two types:

- Binary Semaphore

This is also known as mutex lock. It can have only two values – 0 and 1. Its value is initialized to 1. It is used to implement the solution of critical section problems with multiple processes. - Counting Semaphore

Its value can range over an unrestricted domain. It is used to control access to a resource that has multiple instances.

Now let us see how it does so.

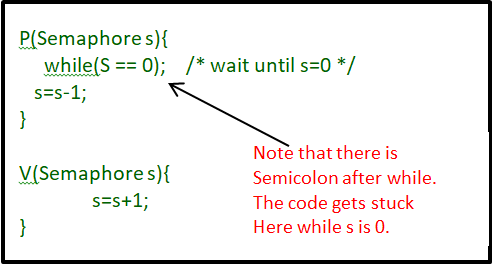

First, look at two operations that can be used to access and change the value of the semaphore variable.

Some point regarding P and V operation:

- P operation is also called wait, sleep, or down operation, and V operation is also called signal, wake-up, or up operation.

- Both operations are atomic and semaphore(s) is always initialized to one. Here atomic means that variable on which read, modify and update happens at the same time/moment with no pre-emption i.e. in-between read, modify and update no other operation is performed that may change the variable.

- A critical section is surrounded by both operations to implement process synchronization. See the below image. The critical section of Process P is in between P and V operation.

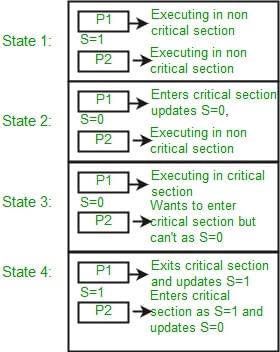

Now, let us see how it implements mutual exclusion. Let there be two processes P1 and P2 and a semaphore s is initialized as 1. Now if suppose P1 enters in its critical section then the value of semaphore s becomes 0. Now if P2 wants to enter its critical section then it will wait until s > 0, this can only happen when P1 finishes its critical section and calls V operation on semaphore s. This way mutual exclusion is achieved. Look at the below image for details which is Binary semaphore.

Mutex vs Semaphore

What is the difference between a mutex and a semaphore? When should you use a mutex and when should you use a semaphore?

A concrete understanding of Operating System concepts is required to design/develop smart applications. Our objective is to educate the reader on these concepts and learn from other expert geeks.

As per operating system terminology, mutexes and semaphores are kernel resources that provide synchronization services (also called synchronization primitives). Why do we need such synchronization primitives? Won’t only one be sufficient? To answer these questions, we need to understand a few keywords. Please read the posts on atomicity and critical section. We will illustrate with examples to understand these concepts well, rather than following the usual OS textual description.

The producer-consumer problem:

Consider the standard producer-consumer problem. Assume, we have a buffer of 4096-byte length. A producer thread collects the data and writes it to the buffer. A consumer thread processes the collected data from the buffer. The objective is, both the threads should not run at the same time.

Note that the content is a generalized explanation. Practical details vary with implementation.

Using Mutex: A mutex provides mutual exclusion, either producer or consumer can have the key (mutex) and proceed with their work. As long as the buffer is filled by the producer, the consumer needs to wait, and vice versa.

At any point of time, only one thread can work with the entire buffer. The concept can be generalized using semaphore.

Using Semaphore: A semaphore is a generalized mutex. In lieu of a single buffer, we can split the 4 KB buffer into four 1 KB buffers (identical resources). A semaphore can be associated with these four buffers. The consumer and producer can work on different buffers at the same time.

Misconception: There is an ambiguity between binary semaphore and mutex. We might have come across that a mutex is a binary semaphore. But it is not! The purpose of mutex and semaphore are different. Maybe, due to similarity in their implementation a mutex would be referred to as a binary semaphore.

Strictly speaking, a mutex is a locking mechanism used to synchronize access to a resource. Only one task (can be a thread or process based on OS abstraction) can acquire the mutex. It means there is ownership associated with a mutex, and only the owner can release the lock (mutex).

Semaphore is signaling mechanism (“I am done, you can carry on” kind of signal). For example, if you are listening to songs (assume it as one task) on your mobile phone and at the same time, your friend calls you, an interrupt is triggered upon which an interrupt service routine (ISR) signals the call processing task to wakeup.

General Questions

Q.1. Can a thread acquire more than one lock (Mutex)?

Ans: Yes, it is possible that a thread is in need of more than one resource, hence the locks. If any lock is not available the thread will wait (block) on the lock.

Q.2. Can a mutex be locked more than once?

Ans: A mutex is a lock. Only one state (locked/unlocked) is associated with it. However, a recursive mutex can be locked more than once (POSIX compliant systems), in which a count is associated with it, yet retains only one state (locked/unlocked). The programmer must unlock the mutex as many number times as it was locked.

Q.3. What happens if a non-recursive mutex is locked more than once.

Ans: Deadlock. If a thread that had already locked a mutex, tries to lock the mutex again, it will enter into the waiting list of that mutex, which results in a deadlock. It is because no other thread can unlock the mutex. An operating system implementer can exercise care in identifying the owner of the mutex and return if it is already locked by a same thread to prevent deadlocks.

Q.4. Are binary semaphore and mutex same?

Ans: No. We suggest treating them separately, as it is explained in signaling vs locking mechanisms. But a binary semaphore may experience the same critical issues (Example: priority inversion) associated with a mutex. We will cover these in a later article.

A programmer can prefer mutex rather than creating a semaphore with count 1.

Q.5. What is a mutex and critical section?

Ans: Some operating systems use the same word critical section in the API. Usually a mutex is a costly operation due to protection protocols associated with it. At last, the objective of mutex is atomic access. There are other ways to achieve atomic access like disabling interrupts which can be much faster but ruins responsiveness. The alternate API makes use of disabling interrupts.

Q.6. What are events?

Ans: The semantics of mutex, semaphore, event, critical section, etc… are same. All are synchronization primitives. Based on their cost in using them they are different. We should consult the OS documentation for exact details.

Q.7. Can we acquire mutex/semaphore in an Interrupt Service Routine?

Ans: An ISR will run asynchronously in the context of current running thread. It is not recommended to query (blocking call) the availability of synchronization primitives in an ISR. The ISR are meant be short, the call to mutex/semaphore may block the current running thread. However, an ISR can signal a semaphore or unlock a mutex.

Q.8. What we mean by “thread blocking on mutex/semaphore” when they are not available?

Ans: Every synchronization primitive has a waiting list associated with it. When the resource is not available, the requesting thread will be moved from the running list of processors to the waiting list of the synchronization primitive. When the resource is available, the higher priority thread on the waiting list gets the resource (more precisely, it depends on the scheduling policies).

Q.9. Is it necessary that a thread must block always when resource is not available?

Ans: Not necessary. If the design is sure ‘what has to be done when resource is not available‘, the thread can take up that work (a different code branch). To support application requirements the OS provides non-blocking API.

For example POSIX pthread_mutex_trylock() API. When the mutex is not available the function returns immediately whereas the API pthread_mutex_lock() blocks the thread till the resource is available.

Lock Variable Synchronization Mechanism

A lock variable provides the simplest synchronization mechanism for processes. Some noteworthy points regarding Lock Variables are

- Its a software mechanism implemented in user mode, i.e. no support required from the Operating System.

- Its a busy waiting solution (keeps the CPU busy even when its technically waiting).

- It can be used for more than two processes.

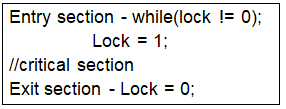

When Lock = 0 implies critical section is vacant (initial value ) and Lock = 1 implies critical section occupied.

The pseudocode looks something like this:

A more formal approach to the Lock Variable method for process synchronization can be seen in the following code snippet:

// Program

char buffer[SIZE];

int count = 0,

start = 0,

end = 0;

struct lock l;

// initialize lock variable

lock_init(&l);

void put(char c)

{

// entry section

lock_acquire(&l);

// critical section begins

while (count == SIZE) {

lock_release(&l);

lock_acquire(&l);

}

count++;

buffer[start] = c;

start++;

if (start == SIZE) {

start = 0;

}

// critical section ends

// exit section

lock_release(&l);

}

char get()

{

char c;

// entry section

lock_acquire(&l);

// critical section begins

while (count == 0) {

lock_release(&l);

lock_acquire(&l);

}

count--;

c = buffer[end];

end++;

if (end == SIZE) {

end = 0;

}

// critical section ends

// exit section

lock_release(&l);

return c;

}

Here we can see a classic implementation of the reader-writer’s problem. The buffer here is the shared memory and many processes are either trying to read or write a character to it. To prevent any ambiguity of data we restrict concurrent access by using a lock variable. We have also applied a constraint on the number of readers/writers that can have access.

Now every Synchronization mechanism is judged on the basis of three primary parameters:

- Mutual Exclusion.

- Progress.

- Bounded Waiting.

Of which mutual exclusion is the most important of all parameters. The Lock Variable doesn’t provide mutual exclusion in some cases. This fact can be best verified by writing its pseudo-code in the form of an assembly language code as given below.

- Load Lock, R0 ; (Store the value of Lock in Register R0.)

- CMP R0, #0 ; (Compare the value of register R0 with 0.)

- JNZ Step 1 ; (Jump to step 1 if value of R0 is not 0.)

- Store #1, Lock ; (Set new value of Lock as 1.) Enter critical section

- Store #0, Lock ; (Set the value of lock as 0 again.)

Now let’s suppose that processes P1 and P2 are competing for Critical Section and their sequence of execution be as follows (initial value of Lock = 0)

- P1 executes statement 1 and gets pre-empted.

- P2 executes statement 1, 2, 3, 4 and enters Critical Section and gets pre-empted.

- P1 executes statement 2, 3, 4 and also enters Critical Section.

Here initially the R0 of process P1 stores lock value as 0 but fails to update the lock value as 1. So when P2 executes it also finds the LOCK value as 0 and enters Critical Section by setting LOCK value as 1. But the real problem arises when P1 executes again it doesn’t check the updated value of Lock. It only checks the previous value stored in R0 which was 0 and it enters critical section.

This is only one possible sequence of execution among many others. Some may even provide mutual exclusion but we cannot dwell on that. According to murphy’s law

“Anything that can go wrong will go wrong“. So like all easy things the Lock Variable Synchronization method comes with its fair share of Demerits but its a good starting point for us to develop better Synchronization Algorithms to take care of the problems that we face here.

|

10 videos|99 docs|33 tests

|

FAQs on Notes: Semaphores - Operating System - Computer Science Engineering (CSE)

| 1. What are semaphores in process synchronization? |  |

| 2. How do semaphores work in process synchronization? |  |

| 3. What are the types of semaphores in process synchronization? |  |

| 4. What is the purpose of using semaphores in process synchronization? |  |

| 5. Can semaphores be used for interprocess communication? |  |