Peterson’s Algorithm | Operating System - Computer Science Engineering (CSE) PDF Download

Peterson’s Algorithm for Mutual Exclusion

Problem: Given 2 processes i and j, you need to write a program that can guarantee mutual exclusion between the two without any additional hardware support.

Solution: There can be multiple ways to solve this problem, but most of them require additional hardware support. The simplest and the most popular way to do this is by using Peterson’s Algorithm for mutual Exclusion. It was developed by Peterson in 1981 though the initial work in this direction was done by Theodorus Jozef Dekker who came up with Dekker’s algorithm in 1960, which was later refined by Peterson and came to be known as Peterson’s Algorithm.

Basically, Peterson’s algorithm provides guaranteed mutual exclusion by using only the shared memory. It uses two ideas in the algorithm:

- Willingness to acquire lock.

- Turn to acquire lock.

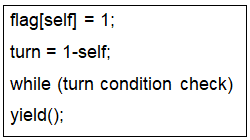

Explanation: The idea is that first a thread expresses its desire to acquire a lock and sets flag[self] = 1 and then gives the other thread a chance to acquire the lock. If the thread desires to acquire the lock, then, it gets the lock and passes the chance to the 1st thread. If it does not desire to get the lock then the while loop breaks and the 1st thread gets the chance.

Wastage of CPU clock cycles

In layman terms, when a thread was waiting for its turn, it ended in a long while loop which tested the condition millions of times per second thus doing unnecessary computation. There is a better way to wait, and it is known as “yield”.

To understand what it does, we need to dig deep into how the Process scheduler works in Linux. The idea mentioned here is a simplified version of the scheduler, the actual implementation has lots of complications.

Consider the following example,

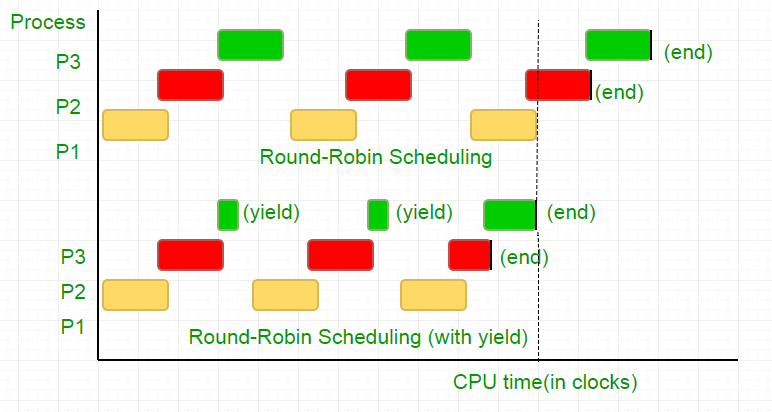

There are three processes, P1, P2 and P3. Process P3 is such that it has a while loop similar to the one in our code, doing not so useful computation, and it exists from the loop only when P2 finishes its execution. The scheduler puts all of them in a round robin queue. Now, say the clock speed of processor is 1000000/sec, and it allocates 100 clocks to each process in each iteration. Then, first P1 will be run for 100 clocks (0.0001 seconds), then P2(0.0001 seconds) followed by P3(0.0001 seconds), now since there are no more processes, this cycle repeats untill P2 ends and then followed by P3’s execution and eventually its termination.

This is a complete waste of the 100 CPU clock cycles. To avoid this, we mutually give up the CPU time slice, i.e. yield, which essentially ends this time slice and the scheduler picks up the next process to run. Now, we test our condition once, then we give up the CPU. Considering our test takes 25 clock cycles, we save 75% of our computation in a time slice. To put this graphically,

Considering the processor clock speed as 1MHz this is a lot of saving!.

Different distributions provide different function to achieve this functionality. Linux provides

sched_yield().

//Program

void lock(int self)

{

flag[self] = 1;

turn = 1-self;

while (flag[1-self] == 1 &&

turn == 1-self)

// Only change is the addition of

// sched_yield() call

sched_yield();

}

Memory fence

The code in earlier tutorial might have worked on most systems, but is was not 100% correct. The logic was perfect, but most modern CPUs employ performance optimizations that can result in out-of-order execution. This reordering of memory operations (loads and stores) normally goes unnoticed within a single thread of execution, but can cause unpredictable behaviour in concurrent programs.

Consider this example,

while (f == 0);

// Memory fence required here

print x;

In the above example, the compiler considers the 2 statements as independent of each other and thus tries to increase the code efficiency by re-ordering them, which can lead to problems for concurrent programs. To avoid this we place a memory fence to give hint to the compiler about the possible relationship between the statements across the barrier.

So the order of statements,

has to be exactly the same in order for the lock to work, otherwise it will end up in a deadlock condition.

To ensure this, compilers provide a instruction that prevent ordering of statements across this barrier. In case of gcc, its __sync_synchronize().

Peterson’s Algorithm in Process Synchronization

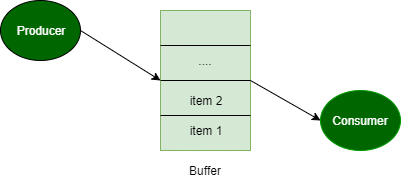

Problem: The producer consumer problem (or bounded buffer problem) describes two processes, the producer and the consumer, which share a common, fixed-size buffer used as a queue. Producer produce an item and put it into buffer. If buffer is already full then producer will have to wait for an empty block in buffer. Consumer consume an item from buffer. If buffer is already empty then consumer will have to wait for an item in buffer. Implement Peterson’s Algorithm for the two processes using shared memory such that there is mutual exclusion between them. The solution should have free from synchronization problems.

Peterson’s algorithm

// code for producer (j)

// producer j is ready

// to produce an item

flag[j] = true;

// but consumer (i) can consume an item

turn = i;

// if consumer is ready to consume an item

// and if its consumer's turn

while (flag[i] == true && turn == i)

{ // then producer will wait }

// otherwise producer will produce

// an item and put it into buffer (critical Section)

// Now, producer is out of critical section

flag[j] = false;

// end of code for producer

//--------------------------------------------------------

// code for consumer i

// consumer i is ready

// to consume an item

flag[i] = true;

// but producer (j) can produce an item

turn = j;

// if producer is ready to produce an item

// and if its producer's turn

while (flag[j] == true && turn == j)

{ // then consumer will wait }

// otherwise consumer will consume

// an item from buffer (critical Section)

// Now, consumer is out of critical section

flag[i] = false;

// end of code for consumer

Explanation of Peterson’s algorithm

Peterson’s Algorithm is used to synchronize two processes. It uses two variables, a bool array flag of size 2 and an int variable turn to accomplish it.

In the solution i represents the Consumer and j represents the Producer. Initially the flags are false. When a process wants to execute it’s critical section, it sets it’s flag to true and turn as the index of the other process. This means that the process wants to execute but it will allow the other process to run first. The process performs busy waiting until the other process has finished it’s own critical section.

After this the current process enters it’s critical section and adds or removes a random number from the shared buffer. After completing the critical section, it sets it’s own flag to false, indication it does not wish to execute anymore.

|

10 videos|99 docs|33 tests

|