The Physiology of Human Vision | Zoology Optional Notes for UPSC PDF Download

| Table of contents |

|

| From the Cornea to Photoreceptors |

|

| From Photoreceptors to the Visual Cortex |

|

| Eye Movements |

|

| Implications for VR |

|

From the Cornea to Photoreceptors

The eye is approximately spherical, with a consistent diameter of about 24mm across individuals. Light enters through the cornea, a hard and transparent surface that offers significant optical power. The outer eye surface is safeguarded by the sclera, a tough, white layer. The majority of the eye's interior is filled with vitreous humor, a transparent and gelatinous substance that allows light to penetrate with minimal distortion.

As light traverses the cornea, it passes through a chamber containing aqueous humor, another transparent and gelatinous substance. Upon passing through this chamber, the light enters the lens by traversing the pupil, the size of which is regulated by the iris—a disc-shaped structure controlling the aperture to manage the amount of light entering. Ciliary muscles alter the optical power of the lens. Subsequently, the light passes through the vitreous humor and reaches the retina, which lines over 180◦ of the inner eye boundary. Although the figure depicts a 2D cross-section, the retina has an arc shape, akin to a curved visual display. It is equipped with photoreceptors, acting as "input pixels," and includes a crucial region known as the fovea, offering the highest visual acuity for precise vision. The optic disc, a small opening in the retina, facilitates the transmission of neural pulses outside the eye through the optic nerve and is located on the same side as the nose in relation to the fovea.

Photoreceptors

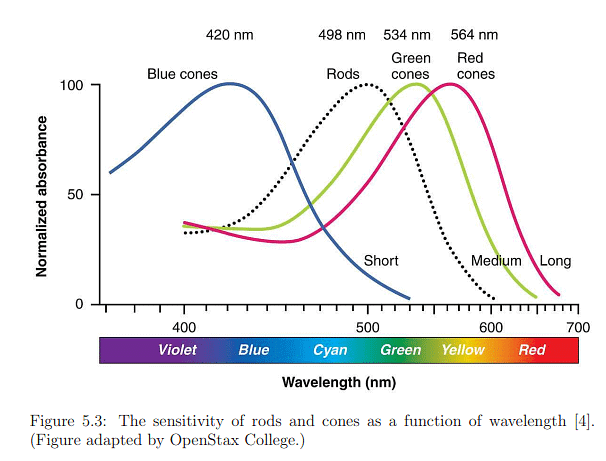

The retina comprises two types of photoreceptors essential for vision: 1) rods, sensitive to extremely low light levels, and 2) cones, requiring higher light levels and specializing in color discrimination. The width of the smallest cones is approximately 1000nm, close to the visible light wavelength. Each human retina densely packs about 120 million rods and 6 million cones. The detection capabilities of these photoreceptor types are illustrated in Figure 5.3. Rod sensitivity peaks at 498nm, falling between blue and green in the spectrum. Cones are categorized into three types based on their responsiveness to blue, green, or red light.

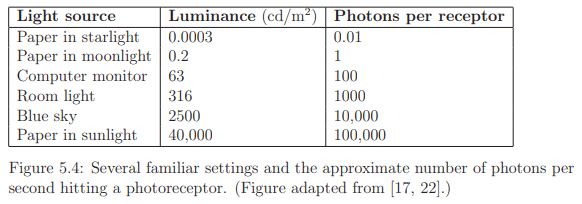

Photoreceptors exhibit responses across a broad dynamic range, as depicted in Figure 5.4, with luminance measured in candelas per square meter. This range spans seven orders of magnitude, from one photon hitting a photoreceptor every 100 seconds to 100,000 photons per receptor per second. In low light conditions, only rods are activated, leading to monochromatic vision known as scotopic vision, where colors are indistinguishable. Full adaptation to low light, taking up to 35 minutes, is required for scotopic vision. In brighter light, cones become active, initiating trichromatic vision called photopic vision, which may take up to ten minutes for complete adaptation. The adjustment period for transitioning between scotopic and photopic vision is noticeable, such as when lights are unexpectedly turned on in a dark environment.

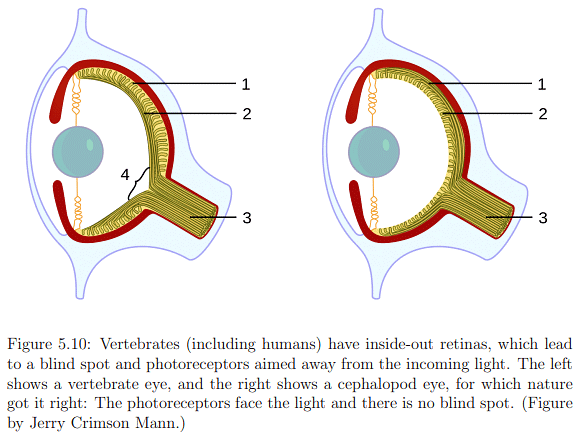

Photoreceptor Density

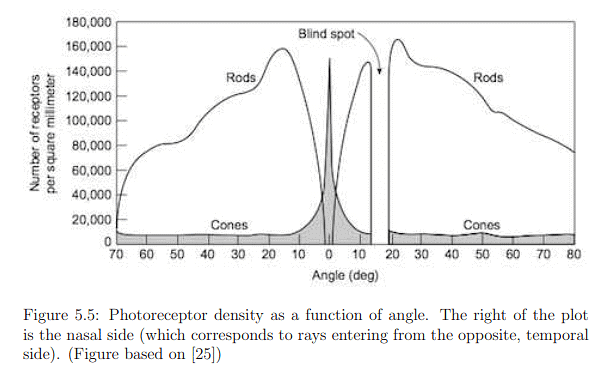

The distribution of photoreceptors across the retina exhibits significant variation, as depicted in Figure 5.5. The fovea, characterized by the highest photoreceptor concentration, is particularly interesting. The innermost section, with a diameter of only 0.5mm or an angular range of ±0.85 degrees, is almost exclusively composed of cones. This implies that to perceive a sharp, colored image, the eye must be directed straight at the target. The entire fovea, with a diameter of 1.5mm (±2.6 degrees angular range), has a dominant concentration of rods in the outer ring. Peripheral vision, corresponding to rays entering the cornea from the sides, lands on retina areas with lower rod density and very low cone density. This results in effective peripheral movement detection but poor color discrimination. The blind spot, where no photoreceptors are present, is a consequence of the retina's inside-out structure, necessitated by the routing of neural signals to the brain.

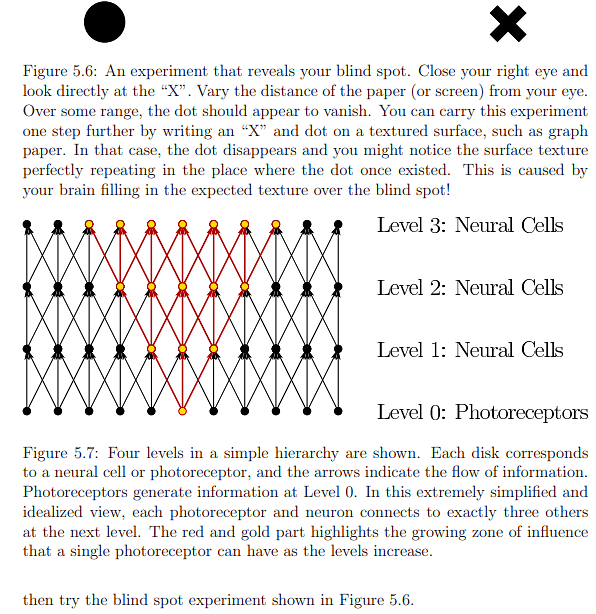

The photoreceptor densities presented in Figure 5.5 raise a paradox. With 20/20 vision, the perception is as if the eyes capture a sharp, colorful image across a vast angular range, which seems implausible given the limited range for sensing sharp, colored images. Moreover, the blind spot should create a void in the visual field. Remarkably, perceptual processes generate an illusion of capturing a complete image by filling in missing details using contextual information, detailed in Section 5.2, and frequent eye movements, discussed in Section 5.3. This illustrates how the brain can create the impression of seeing a comprehensive image despite the limitations of sensory input.

From Photoreceptors to the Visual Cortex

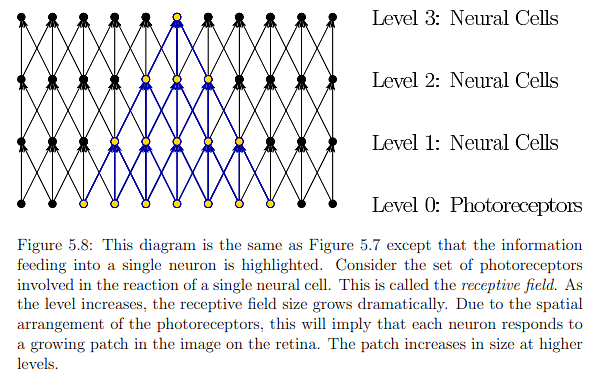

Photoreceptors serve as transducers, converting light-energy stimuli into electrical signals known as neural impulses, thereby conveying information about the external world into neural structures. Signal propagation occurs hierarchically, from photoreceptors to the visual cortex, influencing a network of neurons at various levels. As illustrated in Figure 5.7, the number of influenced neurons grows rapidly as the levels increase. In Figure 5.8, the same diagram highlights how the number of photoreceptors influencing a single neuron increases with level. Neurons at lower levels make simple signal comparisons, while higher levels respond to larger retinal image patches. The brain, through significant processing, fuses information from a lifetime of experiences with that from photoreceptors, resulting in perceptual phenomena like recognizing faces or judging object sizes, a process taking over 100ms to enter consciousness.

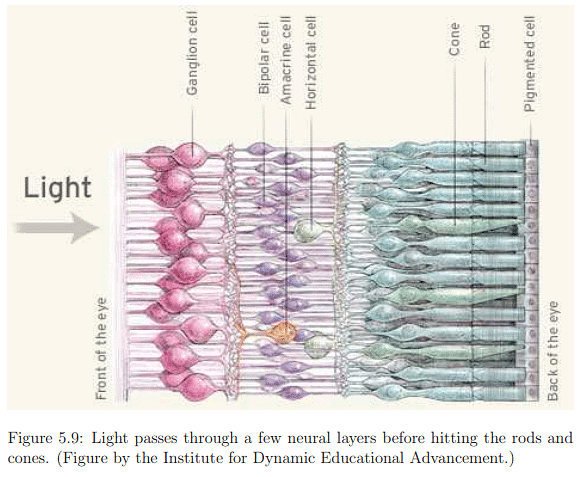

Examining the first layers of neurons in more detail (Figure 5.9), information flows from rods and cones to bipolar, amacrine, and horizontal cells in the inner nuclear layer before reaching the ganglion cells forming the ganglion cell layer. The human retina's inside-out structure, as depicted in Figure 5.10, means light passes over neural cells before reaching photoreceptors. A punctured hole in the retina allows the optic nerve to connect with ganglion cell axons, leading to the blind spot. In Figure 5.9, neural cells are not ideally arranged as in Figure 5.8. Bipolar cells, connecting photoreceptors to ganglion cells, vary in their connections to cones and rods. Two types of bipolar cells, ON and OFF, respond to increasing and decreasing photon absorption, respectively. Horizontal cells exhibit lateral inhibition, decreasing neighboring photoreceptor activation, while amacrine cells connect horizontally between bipolar cells, other amacrine cells, and vertically to ganglion cells. Despite incomplete understanding of human vision at the lowest layers, known aspects contribute significantly to designing effective VR systems and predicting human responses to visual stimuli.

Within the ganglion cell layer, various cell types, such as midget, parasol, and bistratified cells, process segments of the retinal image. Each ganglion cell possesses a large receptive field, determined by the contributing photoreceptors (Figure 5.8). These cells conduct simple filtering operations based on spatial, temporal, and spectral (color) variations in the stimulus across photoreceptors. An example in Figure 5.11 illustrates spatial opponency, where a ganglion cell is triggered by red in the center but not green in the surrounding area. Ganglion cells act as miniature image processing units, detecting local changes in time, space, and/or color and emphasizing simple features like edges. By the time ganglion axons exit the eye through the optic nerve, significant image processing has occurred, enhancing visual perception beyond the raw photon-based image at the photoreceptor level.

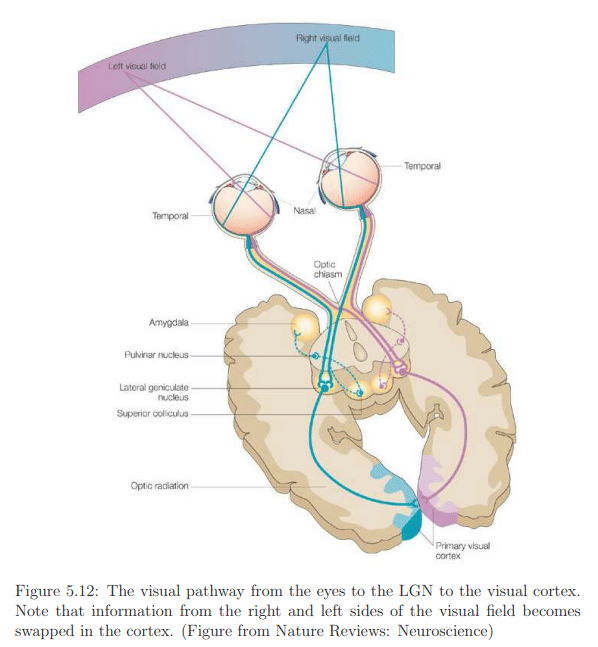

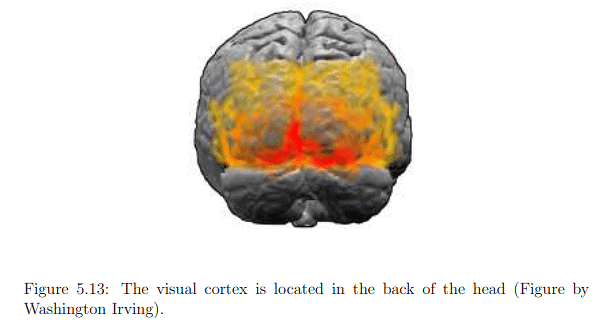

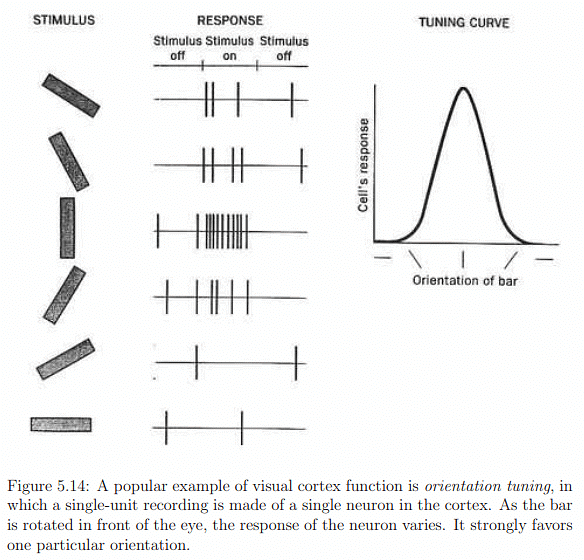

The optic nerve connects to the lateral geniculate nucleus (LGN) in the thalamus (Figure 5.12), primarily serving as a router for sending sensory signals to the brain and performing some processing. The LGN forwards image information to the primary visual cortex (V1) at the back of the brain. The visual cortex, illustrated in Figure 5.13, comprises interconnected areas specializing in various functions (Figure 5.14). Chapter 6 will delve into visual perception, the conscious outcome of processing in the visual cortex, involving neural circuitry, retinal stimulation, input from other senses, and expectations based on prior experiences. Understanding the functioning and integration of these processes remains an active research area.

Eye Movements

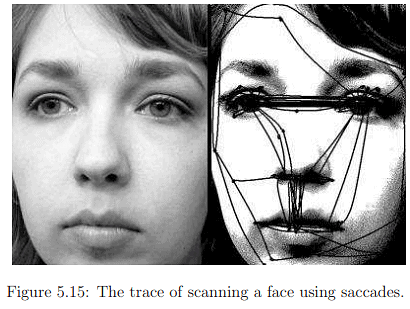

Eye rotations are a complex and essential aspect of human vision, occurring both voluntarily and involuntarily. They enable individuals to focus on specific features in the environment, compensating for head or target movement. A primary purpose of eye movements is to align the feature of interest with the fovea, the region capable of sensing dense, colorful images. Since the fovea has a limited field of view, eyes rapidly scan large objects, fixating on points of interest to construct a detailed view, as depicted in Figure 5.15.

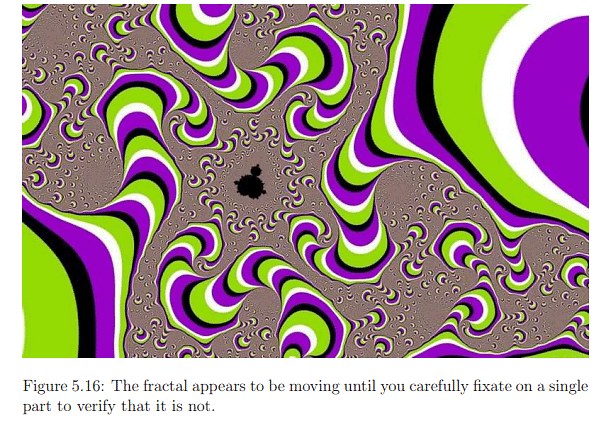

Another reason for eye movements is the sluggish response of photoreceptors to stimuli due to their chemical nature. Taking up to 10ms to fully respond and generating a response lasting up to 100ms, eye movements aid in keeping the image fixed on the same set of photoreceptors to ensure full charging. This addresses a challenge akin to image blurring in cameras under low light and slow shutter speeds. Eye movements also contribute to maintaining a stereoscopic view and preventing adaptation to constant stimulation. Experimental evidence suggests that suppressing eye motions completely leads to the disappearance of visual perception. As movements collaborate to construct a coherent view, predicting and explaining how people interpret certain stimuli, such as the optical illusion in Figure 5.16, can be challenging for scientists.

Eye Muscles

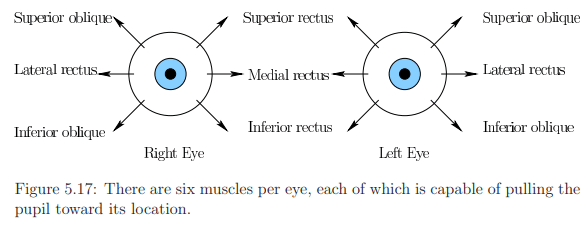

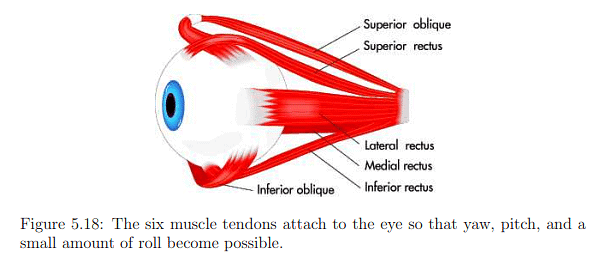

- Eye rotation is governed by six muscles attached to the sclera through tendons.

- Figures 5.17 and 5.18 depict the names and arrangement of these muscles.

- Tendons operate in opposite pairs, e.g., medial rectus and lateral rectus for yaw (side-to-side) rotation.

- Pitch motion involves four muscles per eye, and both pitch and yaw engage all six muscles.

- A minimal amount of roll motion is possible, but eyes are generally not designed for significant roll.

Types of Movements

- Saccades:

- Rapid eye movements (<45ms) with rotations of about 900° per second.

- Purpose: Swiftly relocate the fovea for high visual acuity on important scene features.

- Saccadic masking hides intervals of time during saccades, distorting time perception.

- Creates the illusion of high acuity over a broad angular range.

- Can occur without awareness but can also be consciously controlled.

- Smooth Pursuit:

- Involves slow eye rotation to track a moving target feature.

- Rate of rotation is usually less than 30° per second.

- Main function: Reduce motion blur on the retina, providing image stabilization.

- Saccades may be intermittently inserted if the target is moving too fast.

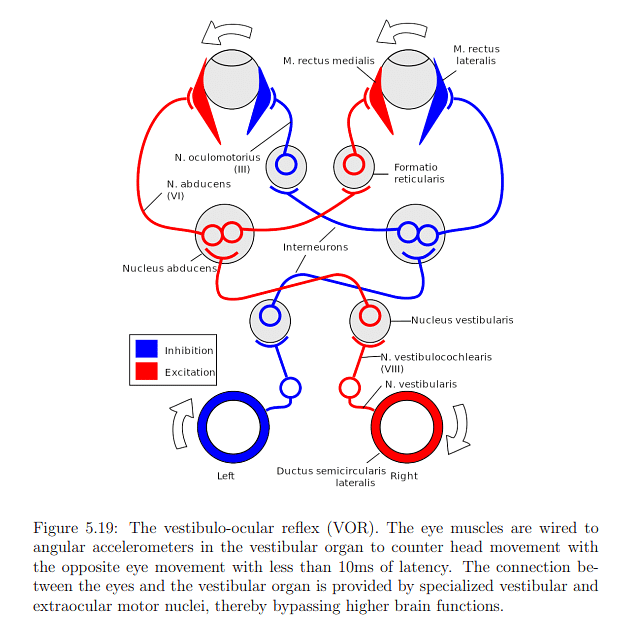

- Vestibulo-Ocular Reflex (VOR):

- Involuntary eye motion to counteract head rotation, ensuring a stable image.

- Reflex triggered by angular accelerations sensed by vestibular organs.

- Primary purpose: Image stabilization.

- Optokinetic Reflex:

- Occurs when observing a fast-moving object.

- Involves rapid and involuntary eye motions, alternating between smooth pursuit and saccade.

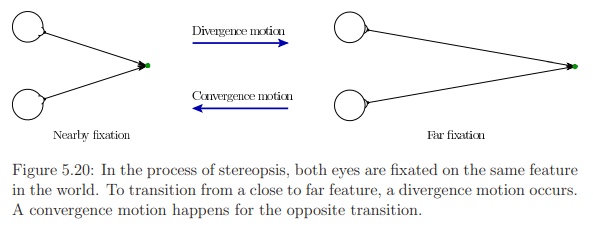

- Vergence:

- Two types: convergence (eyes move closer) and divergence (eyes move apart).

- Aligns both eyes with the same object for stereopsis.

- Provides crucial information about object distance.

- Microsaccades:

- Small, involuntary jerks of less than one degree, following an erratic path.

- Functions include control of fixations, reduction of perceptual fading, enhancement of visual acuity, and resolving perceptual ambiguities.

- Complex behavior, actively researched in perceptual psychology, biology, and neuroscience.

Eye and head movements together

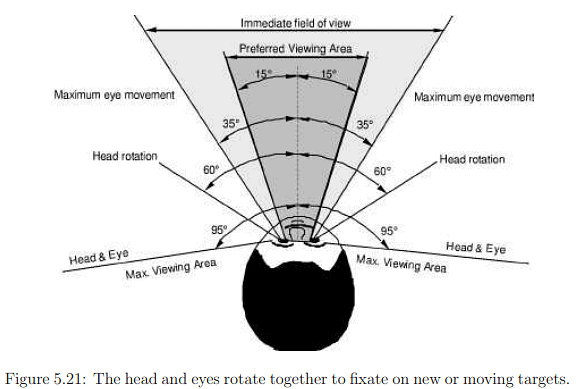

Eye and head movements typically occur in conjunction, and understanding this interaction is crucial. The angular range for yaw rotations of both the head and eyes is depicted in Figure 5.21. While eye yaw is symmetrical, allowing movement of 35° to the left or right, eye pitching is asymmetrical. Human eyes can pitch 20° upward and 25° downward, suggesting that it might be optimal to center a VR display slightly below the pupils when the eyes are looking directly forward. During the vestibulo-ocular reflex (VOR), eye rotation is coordinated to counteract head motion. In cases of smooth pursuit, the head and eyes may move together to maintain a moving target in the preferred viewing area.

Implications for VR

This chapter has focused on the human hardware for vision, exploring fundamental physiological properties that directly impact the engineering requirements for visual display hardware. Engineered systems must be sufficiently advanced to effectively deceive our senses, aligning with the performance limits of our receptors without surpassing them. Therefore, the ideal VR display should be designed to precisely match the capabilities of the sense it aims to deceive.

The necessary qualities for an effective VR visual display can be distilled into three crucial factors:

- Spatial resolution: This pertains to the number of pixels required per square area.

- Intensity resolution and range: This factor, which can also be termed color resolution and range, involves determining the number of intensity values that can be generated, along with establishing the minimum and maximum intensity values. It's worth noting that photoreceptors respond to a vast range of light intensities, spanning seven orders of magnitude, while current displays typically offer only 256 intensity levels per color. Achieving scotopic vision mode using existing display technology seems challenging due to the high intensity resolution required at extremely low light levels.

- Temporal resolution: The speed at which displays need to change their pixels is a critical consideration for effective VR, although this aspect will be further discussed in Section 6.2, within the context of motion perception.

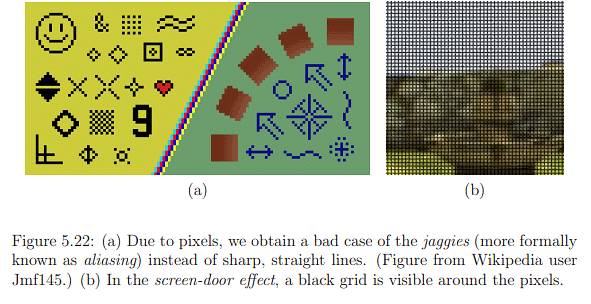

Determining the necessary pixel density is crucial, and this relates to the spatial resolution of a display. Valuable insights into the required spatial resolution can be derived from photoreceptor densities. When a display is highly magnified, individual lights become visible, as illustrated in Figure 4.36. Even when zoomed out, sharp diagonal lines may appear jagged, a phenomenon known as aliasing, as depicted in Figure 5.22(a). Another artifact, the screen-door effect shown in Figure 5.22(b), is often observed in images produced by digital LCD projectors. The question arises: What pixel density is needed to eliminate the perception of individual pixels? In 2010, Steve Jobs of Apple Inc. asserted that 326 pixels per linear inch (PPI) are sufficient, achieving what they termed a retina display. The adequacy of this claim and its relevance to virtual reality (VR) warrant further exploration.

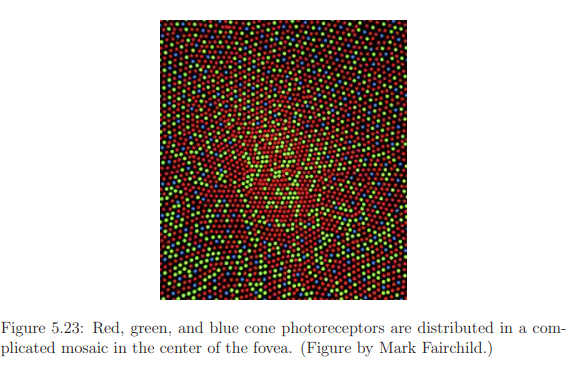

Assuming the fovea is directed straight at the display to optimize sensing, several factors impact the determination of the necessary spatial resolution. The arrangement of red, green, and blue cones in a mosaic pattern, as depicted in Figure 5.23, introduces irregularities different from engineered versions. Vision scientists and neurobiologists gauge effective or perceived input resolution through visual acuity measures in studies. These measures involve tasks like detecting the smallest perceivable dot or recognizing characters on an eye chart. Acuity tasks are influenced by factors such as brightness, contrast, eye movements, exposure time, and the stimulated part of the retina.

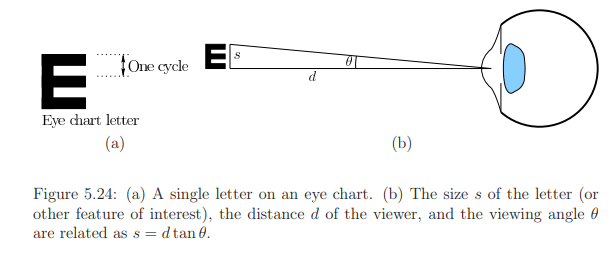

Cycles per degree is a widely used concept representing the number of visible stripes or sinusoidal peaks along a viewing arc, illustrated in Figure 5.24. The Snellen eye chart, commonly used by optometrists, requires patients to recognize letters from a specific distance. For individuals with "normal" 20/20 vision, the chart's 20/20 line corresponds to 30 cycles per degree when viewed from 20 feet away. The retina display concept can be applied by considering a person with 20/20 vision viewing a large screen 20 feet away. To achieve 30 cycles per degree, the screen needs at least 60 pixels per degree, resulting in a sufficient pixel density of 14.32 PPI.

However, for VR applications, where users don't view the screen directly like smartphones, the display is brought closer to the eye with lenses, as demonstrated in Figure 4.30. For instance, if a lens is placed 1.5 inches away from the screen, and considering the same 20/20 vision, the display would need at least 2291.6 PPI to achieve 60 cycles per degree. This emphasizes that the resolutions of current consumer VR headsets are insufficient, and achieving retinal display resolution would require a PPI several times higher. Additionally, factors like superior visual acuity in some individuals, reaching up to 60 cycles per degree, further complicate the determination of a satisfactory resolution for VR. In conclusion, achieving retinal display resolution in VR necessitates significantly higher PPI values than currently available.

How much field of view is enough?

If the screen is brought closer to the eye to encompass a larger field of view, considerations based on the photoreceptor density plot in Figure 5.5 and the constraints of eye rotations in Figure 5.21 arise. The maximum potential field of view appears to be approximately 270 degrees, surpassing what flat screens (limited to less than 180 degrees) can offer. Expanding the field of view by reducing the distance between the screen and the eye would demand even higher pixel density. However, the effectiveness of this approach may be hindered by lens aberrations at the periphery, as discussed in Section 4.3. Moreover, if the lens is excessively thick and close to the eye, there is a risk of eyelash interference; though Fresnel lenses offer a thinner alternative, they introduce their own set of artifacts. Consequently, achieving a VR retina display may involve striking a balance between the quality of the optical system and the inherent limitations of the human eye. The use of curved screens might mitigate some of these challenges.

Foveated rendering

The challenge in our analysis is the inability to capitalize on the fact that photoreceptor density diminishes as we move away from the fovea. Maintaining high pixel density across the entire display is necessary since we lack control over the user's gaze. If we could precisely track eye movements and employ a small, movable display consistently positioned in front of the pupil with zero delay, we could significantly reduce the required number of pixels. Such an approach would alleviate computational demands on graphical rendering systems. While physically moving a tiny screen might achieve this, the concept can be simulated by maintaining a fixed display and concentrating graphical rendering solely on the area where the eye is focused. This concept, known as foveated rendering, has demonstrated effectiveness. However, current limitations include high costs and discrepancies in timing and other factors between eye movements and display updates. As technology advances, foveated rendering may emerge as a practical solution for the mass market in the near future.

VOR gain adaptation

The VOR (Vestibulo-Ocular Reflex) gain is a measure that compares the rate of eye rotation (numerator) to counter the rate of rotation and translation of the head (denominator). Given that head motion involves six degrees of freedom (DOFs), the VOR gain is broken down into six components. Specifically, for head pitch and yaw, the VOR gain is approximately 1.0. For instance, if you rotate your head to the left at a rate of 10 degrees per second, your eyes will rotate at the same rate but in the opposite direction. The VOR roll gain is minimal due to the limited roll range of the eyes, and the VOR translational gain is contingent on the distance to the observed features.

Adaptation in sensory systems, the VOR gain is subject to adaptation. Individuals wearing eyeglasses experience VOR gain adaptation due to the optical transformations outlined in Section 4.2, where lenses influence the field of view, perceived size, and distance of objects. In the context of using a VR headset, potential flaws such as imperfections in the optical system, tracking latency, and inaccurately rendered objects on the screen may trigger adaptation. In such cases, the brain might adjust its perception of stability to compensate for these flaws. Consequently, individuals may perceive the VR experience as normal, and their perception of stability in the real world could become distorted until readaptation occurs. For instance, after a suboptimal VR experience, head movement in the real world might create a sensation that seemingly stationary objects are moving back and forth.

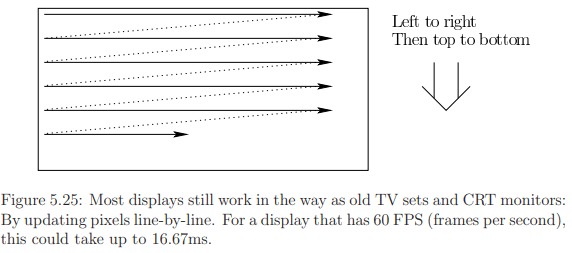

Display scanout

Cameras can have either a rolling or global shutter, depending on whether sensing elements are scanned line-by-line or in parallel. Similarly, displays, functioning as the output analog, employ rolling scanout, or raster scan, for the most part. This process involves updating pixels line by line, a legacy artifact from older TV sets and monitors with cathode ray tubes (CRTs) and phosphor elements that were refreshed by an electron beam.

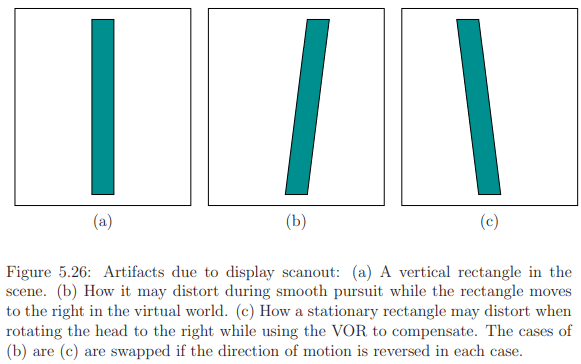

While the slow charge and response time of photoreceptors generally prevent the perception of the scanout pattern during regular use, it becomes noticeable when either our eyes, features in the scene, or both are in motion. An analogy can be drawn with a line-by-line printer, like a receipt printer on a cash register, where pulling on the tape during printing can stretch or slant the lines. Similarly, when our eyes or features in the scene are moving, the rolling scanout can lead to perceptible side effects. For instance, a rectangle may distort during smooth pursuit and Vestibulo-Ocular Reflex (VOR), as illustrated in Figure 5.26.

One proposed solution to address this distortion involves rendering a pre-distorted image that compensates for the effects induced by line-by-line scanout. Achieving this correction demands precise calculations of scanout timings. Another issue with displays is the potential delay in pixel switching (up to 20ms), leading to the perception of blurred edges. Further discussion on these problems will be continued in Section 6.2, focusing on motion perception, and Section 7.4, addressing rendering concerns.

Retinal image slip

Eye movements serve dual purposes: maintaining a target in a fixed position on the retina (smooth pursuit, VOR) and introducing slight changes in its location to prevent perceptual fading (microsaccades). In typical activities (outside of VR), the eyes move, causing the image of a feature to shift slightly on the retina due to various motions and optical distortions, referred to as retinal image slip.

However, when using a VR headset, the movements of image features on the retina may not align with real-world expectations. This discrepancy arises from factors like optical distortions, tracking latency, and display scanout. Consequently, the retinal image slip resulting from VR artifacts diverges from the retinal image slip experienced in the real world. The implications of this misalignment have only begun to be recognized and lack comprehensive scientific characterization. It is probable that these disparities could contribute to fatigue and potentially lead to VR sickness. To illustrate, there is evidence suggesting that microsaccades are triggered by the absence of retinal image slip. This implies that differences in retinal image slip due to VR use may disrupt microsaccade motions, a phenomenon that is not fully understood.

Vergence-accommodation mismatch

Accommodation involves adjusting the optical power of the eye lens to bring nearby objects into focus. Typically, this adjustment occurs when both eyes are fixated on the same object, creating a stereoscopic view that is appropriately focused. In the real world, the vergence motion of the eyes and the accommodation of the lens are closely linked. For instance, if you hold your finger 10cm in front of your face, your eyes will attempt to increase the lens power while strongly converging.

When a lens is positioned at its focal length from a screen, it remains in focus with relaxed eyes. However, if an object is rendered on the screen to appear only 10cm away, causing strong eye convergence, there might be an unnecessary attempt to accommodate, leading to a blurred perceived image. This situation is termed vergence-accommodation mismatch, as the VR stimulus contradicts real-world conditions. Even with user adaptation to the mismatch, prolonged use may induce additional strain or fatigue. Essentially, the eyes are being conditioned to separate vergence from accommodation, a departure from their usual coupling. While emerging display technologies may offer some solutions, they are currently expensive and imprecise. For instance, using eye tracking to estimate vergence and adjusting the optical system's power can significantly reduce the mismatch.

|

181 videos|346 docs

|

|

Explore Courses for UPSC exam

|

|