Chi-square and ANOVA | Management Optional Notes for UPSC PDF Download

Introduction

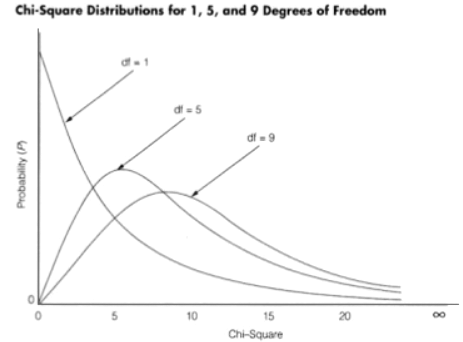

- Statistical procedures are applicable solely to numerical variables. The chi-square distribution, a theoretical or mathematical distribution, finds extensive application in statistical analyses. Among the array of significance tests devised by statisticians, the chi-square test holds particular significance. Originating from the work of Karl Pearson in 1900, this nonparametric test does not rely on any assumptions or distributions of variables. The term 'chi-square' (pronounced with a hard 'ch') derives from the use of the Greek letter χ to define this distribution. Notably, this distribution is characterized by squared elements, hence the symbol χ² is utilized to represent it.

- The chi-square (χ²) test stands as a pivotal tool for evaluating relationships between two categorical variables. It exemplifies a nonparametric test, wherein the sampling distribution of the test statistic conforms to a chi-squared distribution under the null hypothesis.

Chi Square Formula:

χ² = ∑ ((Observed Value - Expected Value)² / Expected Value)

In general, the chi-square test is employed to ascertain whether there exists a significant disparity between the expected frequencies and the observed frequencies across one or more categories.

Requirements for Chi Square Test:

- Quantitative data.

- One or more categories.

- Independent observations.

- Adequate sample size (at least 10).

- Simple random sample.

- Data in frequency form.

- Utilization of all observations.

Characteristics of a chi-square test:

- The chi-square test is based on frequencies rather than parameters like mean and standard deviation.

- It is utilized for hypothesis testing and is not applicable for estimation.

- The test can be applied to complex contingency tables with numerous classes, rendering it valuable in research endeavors.

- As a non-parametric test, it doesn't necessitate rigid assumptions regarding the population type or parameter values, and it involves relatively fewer mathematical intricacies.

The Chi Square Statistic:

- The χ² statistic diverges from other statistics employed in preceding hypothesis tests but shares some similarity with the theoretical chi-square distribution. Both the goodness of fit test and the test of independence utilize the chi-square statistic. In both tests, all categories into which the data are divided are considered.

- The data acquired from the sample represent the observed numbers of cases, which are the frequencies of occurrence for each class. The null hypothesis in chi-square tests specifies the expected number of cases in each category if the hypothesis holds true. Another application of the chi-square test is the test of homogeneity.

The Chi Square Goodness of Fit Test:

- This test commences by postulating that the distribution of a variable adheres to a specific pattern. Goodness of fit (GOF) tests gauge the conformity of a random sample to a theoretical probability distribution function. Typically, a test statistic is formulated as a function of the data, measuring the deviation between the hypothesis and the data.

- Subsequently, the probability of obtaining data with a larger value of this test statistic than the observed value is computed, assuming the hypothesis is accurate. This probability is referred to as the confidence level.

The chi-square goodness of fit test is appropriate under the following conditions:

- The sampling method is simple random sampling.

- The population is at least 10 times larger than the sample.

- The variable under study is categorical.

- The expected value of the number of sample observations in each level of the variable is at least 5.

Researchers are advised to employ the chi-square test of goodness-of-fit when they have one nominal variable with two or more values. They compare the observed counts of observations in each category with the expected counts, which are calculated using some form of theoretical expectation. If the expected number of observations in any category is too small, the chi-square test may yield inaccurate results, and researchers should utilize an exact test instead. This approach involves four steps: stating the hypotheses, formulating an analysis plan, analyzing sample data, and interpreting results.

Understanding the Chi-Square Test: Independence and Homogeneity

The independence test aims to determine whether two attributes are related. Also known as Pearson's Chi-Square, the Chi-Square Test of Independence is a nonparametric statistical analysis method commonly applied in experimental settings where data are in the form of frequencies or counts. Its primary purpose is to assess the likelihood of association or independence between variables.

The Chi-Square Test for Independence is employed under the following conditions:

- Simple random sampling is used as the sampling method.

- Each population is at least 10 times larger than its respective sample.

- The variables under investigation are categorical.

- In a contingency table displaying sample data, the expected frequency count for each table cell is at least.

The test of homogeneity, on the other hand, determines whether events occur uniformly across different populations. It involves comparing a single categorical variable from two distinct populations to ascertain whether frequency counts are distributed identically. The conditions for using the Chi-Square Test for Homogeneity are similar to those for independence testing. The main distinction between the test for independence and the homogeneity test lies in the formulation of the null hypothesis. The homogeneity test posits a null hypothesis asserting that various populations are homogeneous or equal concerning a particular characteristic, as opposed to the independence test, which assesses whether the variables are independent or not.

Calculation of Chi-Square:

Each component within the summation is defined as "the observed minus the expected, squared, and then divided by the expected." The overall chi-square value for the test is computed as "the sum of the observed minus the expected, squared, and then divided by the expected."

Limitations of the Chi-Square Test:

While the chi-square test is a valuable statistical tool, it does have some limitations:

- The chi-square test does not provide detailed information regarding the strength or substantive significance of the relationship in the population.

- The chi-square test is influenced by sample size. The calculated chi-square value increases with larger sample sizes, regardless of the strength of the relationship between variables.

- The chi-square test is sensitive to small expected frequencies in one or more table cells.

In summary, the chi-square test is a statistical tool used by data analysts to compare observed data with a specific hypothesis, with two primary types: the goodness of fit test and the test for independence. The goodness of fit test allows researchers to hypothesize about the behavior of a distribution, while the test for independence examines whether the observed distribution aligns with the model hypothesized in the null hypothesis. Despite being different types of hypothesis tests, both utilize the chi-square statistic and distribution.

ANOVA Test

- Analysis of Variance (ANOVA) is a statistical technique developed by R.A. Fisher in the 1920s, applicable when there are more than two groups. It assesses the impact of independent variables on dependent variables in a regression analysis, aiding in the identification of influential factors in a dataset. ANOVA compares the variance among groups with the variance within groups (random error). It provides a statistical test to determine if the means of several groups are equal, extending beyond t-tests to analyze more than two groups.

- The ANOVA F-test is employed to test for significant differences between means. The null hypothesis states that the means for all groups are equal, while the alternative hypothesis suggests that at least two means differ significantly. Assumptions for ANOVA include random and independent observations, normal distribution within groups (or large sample sizes if not met), and approximately equal variances between groups.

Steps in ANOVA:

- Data Description

- Assumptions: Model representation and discussion for each design.

- Hypothesis Formulation

- Test Statistic Computation

- Test Statistic Distribution

- Decision Rule

- Test Statistic Calculation: Results summarized in the ANOVA table, aiding in evaluation.

- Statistical Decision

- Conclusion

- Determination of p-value

Types of ANOVA

One-Way ANOVA:

- One-Way ANOVA, the simplest form of ANOVA, examines only one source of variation or factor. It is a common statistical technique used to determine differences among two or more groups. One-Way ANOVA extends the t-test procedure for comparing two independent samples to multiple groups. This analysis is applied when there is a single qualitative variable defining the groups and a single quantitative measurement variable.

- The purpose of One-Way ANOVA is to assess whether data from different sources converge toward a common mean. Assumptions for One-Way ANOVA include random sampling, equal variances across populations, and normal distribution of scores within each population.

Two-Way ANOVA:

- Two-Way ANOVA is employed when an experiment involves a quantitative outcome and two categorical explanatory variables. Each experimental unit can be exposed to any combination of one level of one explanatory variable and one level of the other explanatory variable.

- Two-Way ANOVA features two independent variables (factors), each with multiple conditions. Its objective is to determine whether data from different sources converge toward a common mean based on two defining characteristics.

- In Two-Way ANOVA, the error model adheres to the normal distribution with equal variance for all subjects sharing levels of both explanatory variables. This analysis is suitable for quantitative studies with two or more categorical explanatory variables, maintaining assumptions of normality, equal variance, and independent errors.

Advantages of a Two-Way ANOVA model include:

- Breakdown of the model's fit between each main component (factors) and an interaction effect, which cannot be tested with a series of one-way ANOVAs.

- Typically requires a smaller total sample size, as two factors are studied simultaneously.

- Reduces random variability.

- Allows examination of interactions between factors, where a significant interaction indicates that the effect of one variable changes depending on the level of the other factor.

In summary, Analysis of Variance (ANOVA) is utilized to test the hypothesis of no difference between two or more population means, particularly in comparative experiments where only outcome differences are of interest.

FAQs on Chi-square and ANOVA - Management Optional Notes for UPSC

| 1. What is ANOVA and how is it used in statistical analysis? |  |

| 2. What are the different types of ANOVA? |  |

| 3. How does ANOVA differ from a Chi-square test? |  |

| 4. How can ANOVA be applied in the field of education research? |  |

| 5. Can ANOVA be used with non-parametric data? |  |