Emerging Trends Chapter Notes | Computer Science for Class 11 - Humanities/Arts PDF Download

| Table of contents |

|

| Introduction to Emerging Trends |

|

| Artificial Intelligence (AI) |

|

| Big Data |

|

| Internet of Things (IOT) |

|

| Cloud Computing |

|

| Grid Computing |

|

| Blockchains |

|

Introduction to Emerging Trends

Computers have been a part of our lives for a long time, and with each passing day, new technologies and initiatives are being introduced. To grasp the current technologies and have a clearer perspective on the developments happening around us, it is crucial to keep an eye on these emerging trends.

Every day, numerous new technologies are launched. While some of these do not succeed and fade away, others thrive and gain lasting attention from users. Emerging trends represent cutting-edge technologies that gain popularity and set new standards among users. In this chapter, we will explore several emerging trends that are poised to make a significant impact on the digital economy and the way we interact in digital societies in the future.

Artificial Intelligence (AI)

- AI in Everyday Life: AI is behind features like smart maps that find the fastest routes by checking real-time data, and social media photo uploads that automatically tag friends.

- Digital Assistants: AI powers virtual assistants like Siri, Google Now, Cortana, and Alexa, making them capable of understanding and responding to user requests.

- Mimicking Human Intelligence: The goal of AI is to replicate human intelligence in machines, enabling them to learn, make decisions, and solve problems like humans do.

- Knowledge Base: AI systems use a knowledge base, which is a collection of information, facts, and rules, to make informed decisions. This knowledge can be updated and expanded over time.

- Learning from Experience: AI can learn from past experiences and outcomes, allowing it to improve its decision-making abilities in the future.

Machine Learning

Machine Learning is a branch of Artificial Intelligence where computers learn from data using statistical methods without being explicitly programmed. It involves algorithms that learn from data and make predictions. These algorithms, known as models, are trained and tested using different datasets. Once these models achieve an acceptable level of accuracy, they are used to make predictions on new, unknown data.

Natural Language Processing (NLP)

NLP is a technology that enables computers to understand and interact with humans using natural languages like Hindi and English. It powers features like predictive typing and spell checking in search engines. NLP allows voice-based web searches and device control, as well as text-to-speech and speech-to-text conversions.

- Machine Translation: Machines can now translate texts between languages with good accuracy.

- Automated Customer Service: Software can interact with customers to address their queries and complaints.

Immersive Experiences

Immersive experiences involve the use of technology to create environments that stimulate our senses, making interactions more engaging and realistic. This concept has been applied in various fields, including training, gaming, and entertainment.

(A) Virtual Reality

- Virtual Reality (VR) is a technology that creates a computer-generated, three-dimensional environment that simulates the real world. Users can interact with and explore this virtual environment, which can be experienced through VR headsets.

- VR works by presenting our senses with artificial information, altering our perception of reality. To enhance the realism of VR experiences, additional sensory inputs such as sound, smell, motion, and temperature can be incorporated.

- This technology is relatively new and has found applications in various fields, including:

- Gaming: VR provides immersive gaming experiences, allowing players to interact with virtual worlds in a realistic way.

- Military Training: VR is used to simulate real-life scenarios for training purposes, helping soldiers prepare for various situations.

- Medical Procedures: VR can be used for training medical professionals in surgical procedures and other medical interventions.

- Entertainment: VR offers new forms of entertainment, including virtual tours and experiences.

- Social Science and Psychology: Researchers use VR to study human behavior and conduct experiments in controlled virtual environments.

- Engineering: VR can assist engineers in visualizing and simulating projects before they are implemented.

(B) Augmented Reality

Augmented Reality (AR) involves overlaying computer-generated perceptual information onto the existing physical environment. This technology enhances the physical world by adding digital components along with the associated tactile and sensory elements, making the environment interactive and digitally manipulable.

- Information Access: Users can access information about nearby places based on their current location. AR apps provide details about locations, allowing users to make choices based on user reviews.

- Location-Based AR Apps: These apps are a major form of AR technology. Travelers can access real-time information about historical sites by simply pointing their camera at the subjects. This feature enhances the travel experience by providing instant information.

Unlike Virtual Reality, which creates an entirely new environment, Augmented Reality enhances the perception of the physical world by adding supplementary information. It does not create something new but rather augments the existing reality with additional data.

Robotics is a multidisciplinary field that combines mechanical engineering, electronics, computer science, and other disciplines. It focuses on the design, fabrication, operation, and application of robots. Robotics plays a crucial role in various industries, including manufacturing, healthcare, and space exploration.

Robotics

A robot is a machine designed to perform one or more tasks automatically with high accuracy and precision. What sets robots apart from other machines is their programmability; they can be instructed by a computer to follow specific guidelines. Robots were originally envisioned for repetitive industrial tasks that are either monotonous, stressful for humans, or require significant labor.

One of the essential components of a robot is its sensors, which allow it to perceive and interact with its environment. There are various types of robots, including:

- Wheeled Robots

- Legged Robots

- Manipulators

- Humanoids

Humanoids are robots designed to resemble human beings. Robots are increasingly being utilized in various fields such as industry, medical science, bionics, scientific research, and the military.

Some notable examples of robotic applications include:

- NASA’s Mars Exploration Rover (MER) mission, which involves robotic spacecraft studying the planet Mars.

- Sophia, a humanoid robot that utilizes artificial intelligence, visual data processing, and facial recognition to mimic human gestures and expressions.

- Drones, which are unmanned aircraft that can be remotely controlled or fly autonomously using pre-programmed flight plans. Drones are used in various fields such as journalism, aerial photography, disaster management, healthcare, and wildlife monitoring, among others.

Big Data

With technology penetrating nearly every aspect of our lives, data is being generated at an unprecedented rate. Currently, there are over a billion Internet users, with a significant portion of web traffic coming from smartphones. At this rate, approximately 2.5 quintillion bytes of data are created daily, a figure that is rapidly increasing with the ongoing expansion of the Internet of Things (IoT).

This phenomenon leads to the creation of data sets that are not only vast in volume but also complex in nature, known as Big Data. Traditional data processing tools are inadequate for handling such data due to its sheer size and unstructured form. Big Data encompasses various types of information such as social media posts, instant messages, photographs, tweets, blog articles, news articles, opinion polls and their comments, and audio/video chats.

Moreover, Big Data presents numerous challenges including integration, storage, analysis, search, processing, transfer, querying, and visualization. Despite these challenges, Big Data holds significant potential for valuable insights and knowledge, prompting ongoing efforts to develop effective software and methodologies for its processing and analysis.

Characteristics of Big Data

Big Data is characterized by the following five features that set it apart from traditional data:

- Volume: The most significant feature of big data is its massive size. When a dataset is so large that traditional Database Management System (DBMS) tools struggle to process it, it qualifies as big data.

- Velocity: This refers to the speed at which data is generated and stored. Big data is produced and stored at a vastly higher rate compared to traditional data sets.

- Variety: Big data encompasses a wide range of data types, including structured, semi-structured, and unstructured data. Examples include text, images, videos, and web pages.

- Veracity: Big data can sometimes be inconsistent, biased, noisy, or contain abnormalities due to issues in data collection methods. Veracity pertains to the trustworthiness of the data, as processing incorrect data can lead to misleading results and interpretations.

- Value: Big data is not just a vast amount of data; it also contains hidden patterns and valuable insights that can significantly benefit businesses. However, before investing resources in processing big data, it is essential to assess its potential for value discovery to avoid futile efforts.

Data Analytics

Data analytics involves the examination of data sets to draw conclusions about the information they contain, using specialized systems and software. The technologies and techniques for data analytics are gaining popularity and are used in various industries to facilitate informed business decisions. In scientific research, data analytics helps validate or refute models, theories, and hypotheses.

Pandas, a library in the Python programming language, is a useful tool for simplifying data analysis processes.

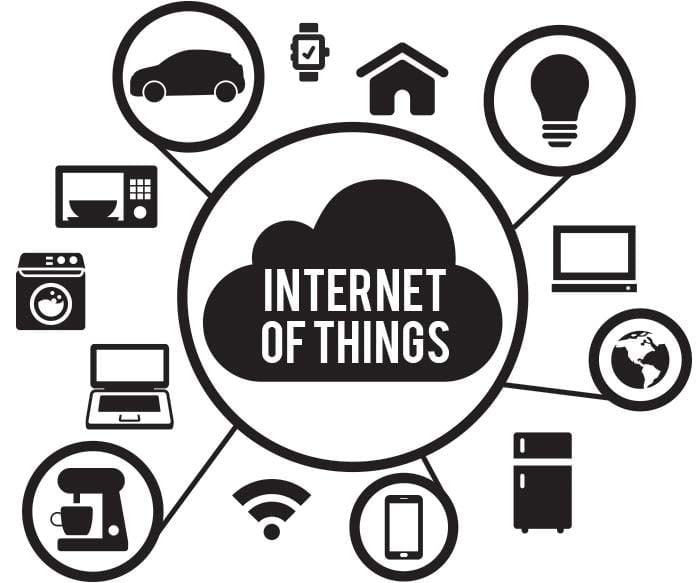

Internet of Things (IOT)

Internet of Things (IOT) refers to a network of devices that are connected through hardware and software that allows them to communicate and exchange data with each other. In a typical household, many devices like microwaves, air conditioners, door locks, and CCTV cameras have advanced microcontrollers and software, but they usually operate separately and require human intervention to function. IoT aims to bring these devices together to create an intelligent network where they can work collaboratively and assist each other. For instance, if these devices are enabled to connect to the Internet, users can access and control them remotely using their smartphones.

Web of Things (WoT)

- Internet of Things enables us to connect and control various devices using the Internet through our smartphones or computers, forming a personal network.

- To communicate with multiple devices, we typically need to download several different apps.

- Imagine the ease of managing all these devices from a single interface instead.

- The web is already a platform that allows people to communicate with one another.

- Could we leverage the web so that all devices can communicate efficiently with each other?

- Web of Things (WoT) makes it possible to use web services to connect anything from the physical world, in addition to human identities.

- This integration will lead to the development of smart homes, smart offices, smart cities, and beyond.

Sensors

When you change the orientation of your mobile phone from vertical to horizontal (or vice versa), the display automatically adjusts to match the new orientation. This feature is made possible by two sensors: the accelerometer and the gyroscope.

- The accelerometer sensor detects the phone's orientation, while the gyroscope sensor tracks the rotation or twist of your hand, providing additional information to the accelerometer.

Sensors are commonly used for monitoring and observing in real-world applications, and the development of smart electronic sensors is significantly contributing to the advancement of the Internet of Things (IoT). This progress will lead to the creation of new sensor-based intelligent systems.

A smart sensor is a device that gathers input from the physical environment and utilizes its built-in computing resources to perform predefined functions upon detecting specific input. It processes the data before passing it on.

Smart Cities

Smart cities are a response to the challenges posed by rapid urbanization, which increases the demand on various urban resources and infrastructure. As cities grow, they face difficulties in managing essential resources such as land, water, waste, air quality, health and sanitation, traffic congestion, public safety, and security. Additionally, the overall infrastructure of cities, including roads, railways, bridges, electricity, subways, and disaster management systems, is under strain.

To address these challenges and ensure that cities remain sustainable and livable, planners around the world are exploring smarter ways to manage urban resources and services.

A smart city leverages computer and communication technology, along with the Internet of Things (IoT), to efficiently manage and distribute resources. For example:

- Smart Buildings: These structures use sensors to detect earthquake tremors and alert nearby buildings to prepare for potential impacts.

- Smart Bridges: Wireless sensors monitor the condition of bridges by checking for loose bolts, damaged cables, or cracks. If any issues are detected, the system sends alerts to the relevant authorities via SMS.

- Smart Tunnels: Similar to smart bridges, smart tunnels utilize wireless sensors to identify leaks or congestion within the tunnel. This information is transmitted as wireless signals to a centralized computer for analysis.

In a smart city, various sectors such as transportation, power generation, water supply, waste management, law enforcement, information systems, education, healthcare, and community services work together seamlessly. This integrated approach optimizes the efficiency of city operations and services, ensuring that resources are used effectively and that residents have access to the services they need.

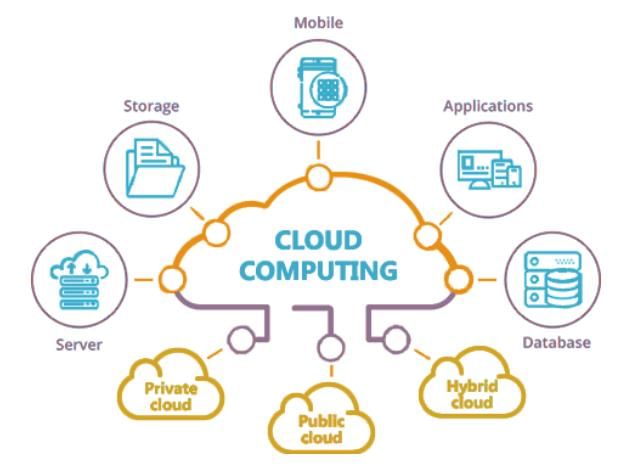

Cloud Computing

Cloud computing is a growing trend in information technology where services like software, hardware, databases, and storage are provided over the Internet, allowing users to access them from anywhere using any device. These services are offered by companies known as cloud service providers, usually on a pay-per-use basis, similar to how we pay for electricity.

With cloud computing, users can run large applications or process vast amounts of data without needing the necessary storage or processing power on their personal computers, as long as they have an Internet connection. This approach is cost-effective and provides on-demand resources, allowing users to access what they need at a reasonable price.

We already use cloud services when we store our photos and files online for backup or host a website on the Internet.

Cloud Services

To better understand the cloud, it's helpful to think of everything as a service. A "service" refers to any facility provided by the cloud. There are three standard models to categorize different computing services delivered through the cloud:

(A) Infrastructure as a Service (IaaS)

- IaaS providers offer various types of computing infrastructure, including servers, virtual machines (VMs), storage and backup facilities, network components, operating systems, and other hardware or software. By using IaaS from the cloud, users can access hardware infrastructure located remotely to configure, deploy, and run software applications. This allows them to outsource hardware and software on demand and pay based on usage, saving costs on software, hardware, setup, maintenance, and security.

(B) Platform as a Service (PaaS)

- PaaS offers a cloud-based platform where users can install and run applications without the hassle of managing the underlying infrastructure. It provides an environment for developing, testing, and deploying software applications. For instance, if we create a web application using MySQL and Python, PaaS allows us to run it on a pre-configured server with these technologies already installed, such as an Apache server with MySQL and Python. This eliminates the need for manual installation and configuration.

- With PaaS, users have full control over their deployed applications and configurations while benefiting from reduced costs and simplified management of hardware and software.

(C) Software as a Service (SaaS)

- SaaS offers on-demand access to application software, typically through licensing or subscription. When using platforms like Google Docs, Microsoft Office 365, or Dropbox for online document editing, users are utilizing SaaS from the cloud. In this model, users do not need to worry about software installation or configuration as long as the necessary software is accessible. Similar to PaaS, users are provided with access to the required configuration settings of the application software they are currently using.

- All these service models allow users to access infrastructure, platforms, or software on-demand and are usually charged based on usage. This eliminates the need for significant upfront investments, making it easier for new or evolving organizations. To leverage the benefits of cloud computing, the Government of India has initiated the "GI Cloud" project, known as 'MeghRaj' ( https://cloud.gov.in ).

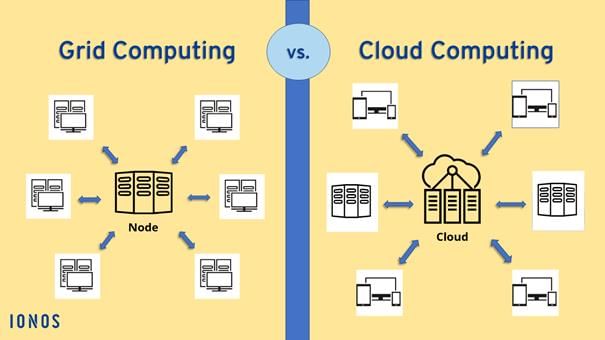

Grid Computing

Grid computing involves a network of computers that are spread out over different locations and have various types of hardware and software. The main idea is to combine these resources to work on a big task as if they were one powerful supercomputer. Each individual computer in the network is called a "node." These nodes come together temporarily to collaborate on a large project, pooling their processing power and storage capacity. This approach is different from cloud computing, which focuses on providing services. Grid computing is more specialized for specific applications and tasks that require significant computational resources.

Types of Grid Computing:

- Data Grid: This type of grid is used to manage large amounts of distributed data that need to be accessed by multiple users.

- CPU or Processor Grid: In this type, processing tasks are moved from one computer to another as needed. A large task can be divided into smaller subtasks and processed in parallel by different nodes.

Key Differences: Grid Computing vs. IaaS Cloud Service

- Grid Computing: In grid computing, multiple nodes come together to solve a common problem.

- IaaS Cloud Service: Here, a service provider rents out the necessary infrastructure to users.

Setting Up a Grid:

- To establish a grid, numerous nodes need to be connected for both data and CPU resources. Middleware is required to implement this distributed processing architecture.

- Globus Toolkit: One example of middleware used for building grids is the Globus Toolkit. This open-source software includes tools for security, resource management, data management, communication, and fault detection, among other functions.

Blockchains

Blockchain technology represents a significant shift from traditional digital transaction methods. Let's explore how it works and its potential applications in various fields.

Traditional Digital Transactions

- In traditional systems, digital transactions are managed through a centralized database.

- Organizations like ticket booking websites and banks update this central database with each transaction.

- However, storing data in one central location poses risks of hacking and data loss.

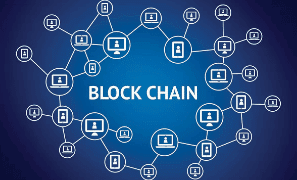

Blockchain Technology Overview

- Blockchain operates on the principle of a decentralized and shared database.

- Each computer (or node) in the network possesses a complete copy of the database.

- A block in the blockchain represents a secure chunk of data or a valid transaction.

- Each block contains a header with visible data, while the private data is accessible only to the block's owner.

- These blocks are linked together, forming a chain, hence the term "blockchain."

Transaction Process in Blockchain

- A transaction request is initiated.

- The request is broadcast to all nodes in the network.

- If verified by all nodes, the block is added to the existing chain.

- The transaction is considered complete.

Key Features of Blockchain

- Each participating computer maintains an updated and secure ledger.

- The ledger is "append-only," meaning it can only be updated after consensus from all nodes.

- This decentralized approach enhances safety and security, as no single member can alter the data.

Applications of Blockchain Technology

1. Digital Currency

- Blockchain is widely known for its role in supporting digital currencies like Bitcoin.

2. Healthcare

- Improved data sharing among healthcare providers can lead to accurate diagnoses, effective treatments, and cost-efficient care.

3. Land Registration

- Blockchain can help maintain land registration records, reducing disputes related to land ownership and encroachments.

4. Voting Systems

- A blockchain-based voting system can enhance transparency and authenticity by preventing vote alterations.

5. Diverse Sectors

- Blockchain technology has the potential to improve transparency, accountability, and efficiency across various sectors and governance systems.

|

33 docs|11 tests

|

FAQs on Emerging Trends Chapter Notes - Computer Science for Class 11 - Humanities/Arts

| 1. What are the key differences between Artificial Intelligence (AI) and Big Data? |  |

| 2. How does the Internet of Things (IoT) impact everyday life? |  |

| 3. What are the benefits of Cloud Computing in business? |  |

| 4. What role does Blockchain technology play in ensuring data security? |  |

| 5. How does Grid Computing differ from Cloud Computing? |  |