Cache Memory | Famous Books for UPSC Exam (Summary & Tests) PDF Download

| Table of contents |

|

| Introduction |

|

| Characteristics of Cache Memory |

|

| Levels of Memory |

|

| Cache Performance |

|

| Cache Mapping |

|

| Application of Cache Memory |

|

| Advantages of Cache Memory |

|

| Disadvantages of Cache Memory |

|

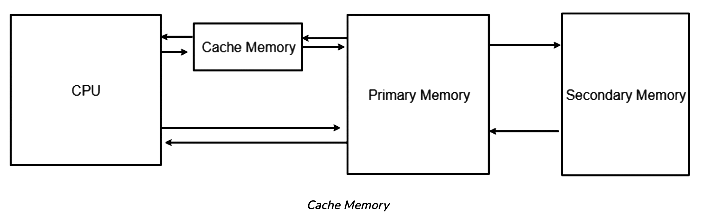

Introduction

- Cache memory stands out as a specialized, exceptionally high-speed memory unit.

- Acting as a swifter and more compact repository, it holds duplicates of data sourced from commonly accessed locations within the main memory.

- Within the CPU, multiple distinct caches operate autonomously, catering to both instructions and data.

- The primary function of cache memory revolves around optimizing the average duration required to access data from the main memory.

Characteristics of Cache Memory

- Cache memory functions as a rapid intermediary between the CPU and RAM.

- It retains frequently accessed data and instructions, ensuring their instant availability to the CPU.

- Although pricier than main or disk memory, cache memory remains more budget-friendly than CPU registers.

- Its purpose revolves around accelerating processes and maintaining synchronization with a high-speed CPU.

Levels of Memory

- Level 1 or Register: This memory type stores and immediately accepts data directly into the CPU. Common registers include the Accumulator, Program Counter, and Address Register.

- Level 2 or Cache Memory: The fastest memory with swift access times, it temporarily holds data for quicker retrieval.

- Level 3 or Main Memory: This memory is actively used by the computer, albeit smaller in size. Data does not persist once power is cut off.

- Level 4 or Secondary Memory: External memory with slower access compared to main memory. Data remains permanently stored here.

Cache Performance

- Processor's Interaction with Main Memory: Before accessing main memory, the processor checks for a matching entry in the cache.

- Cache Hit: When the processor locates the memory location in the cache, it signifies a Cache Hit, and data is retrieved from the cache.

- Cache Miss: If the memory location is not found in the cache, a Cache Miss occurs. In this case, the cache allocates a new entry and copies data from main memory, fulfilling the request from the cache contents.

- Performance Measurement: Cache memory performance is often evaluated using a metric called Hit ratio.

Hit Ratio(H) = hit / (hit + miss) = no. of hits/total accesses

Miss Ratio = miss / (hit + miss) = no. of miss/total accesses = 1 - hit ratio(H)

Enhanced Cache Performance Strategies:

- Increase Cache Block Size: Opting for a larger cache block size can enhance performance.

- Higher Associativity: Implementing higher associativity can improve cache performance.

- Minimize Miss Rate: Strategies should focus on reducing the cache miss rate.

- Decrease Miss Penalty: Efforts should be made to minimize the penalty incurred during cache misses.

- Reduce Time to Hit: Streamlining processes to decrease the time taken to access data in the cache can boost performance.

Cache Mapping

Cache Memory Mapping Types:

- Direct Mapping

- Associative Mapping

- Set-Associative Mapping

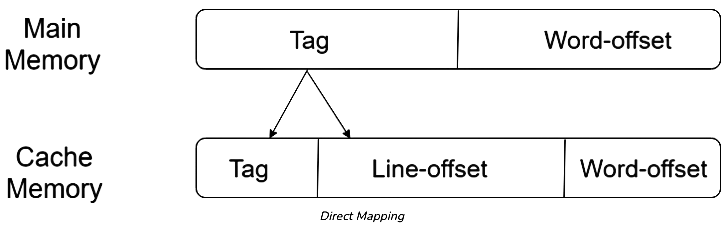

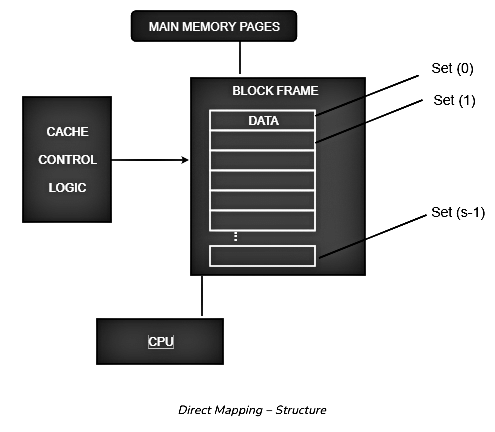

1. Direct Mapping

Direct mapping, the most basic technique, assigns each block of main memory to a single cache line. In this method, every memory block is mapped to a specific line in the cache. If a line already contains a memory block and a new block needs to be loaded, the existing block is replaced. The address space is divided into two components: the index field and the tag field. The cache stores the tag field, while the remaining information is stored in main memory. The performance of direct mapping is directly linked to the hit ratio.

i = j modulo m

where

i = cache line number

j = main memory block number

m = number of lines in the cache

In cache access operations, each main memory address can be seen as comprising three components. The least significant w bits pinpoint a specific word or byte within a main memory block. Typically, addresses operate at the byte level in modern machines. The remaining s bits designate one of the 2s blocks of main memory. These s bits are interpreted by the cache logic as a tag with s-r bits (the uppermost portion) and a line field with r bits. This line field distinguishes one of the m=2r cache lines. The line offset represents the index bits in the direct mapping approach.

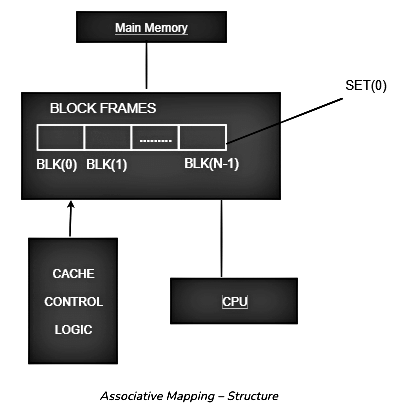

2. Associative Mapping

In this mapping scheme, associative memory stores both the content and addresses of memory words. Any block has the flexibility to reside in any cache line. Thus, the word ID bits are employed to specify which word within the block is required, while the tag encompasses all remaining bits. This architecture allows any word to be positioned in any location within the cache memory. It's regarded as the swiftest and most adaptable mapping method. In associative mapping, the index bits are rendered as zero.

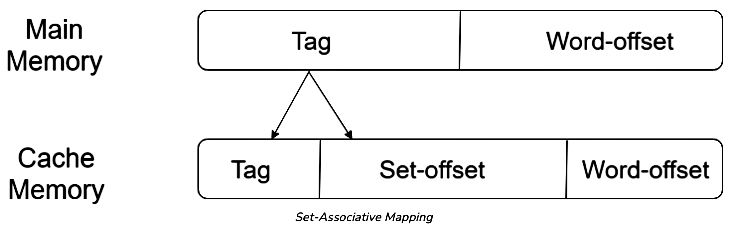

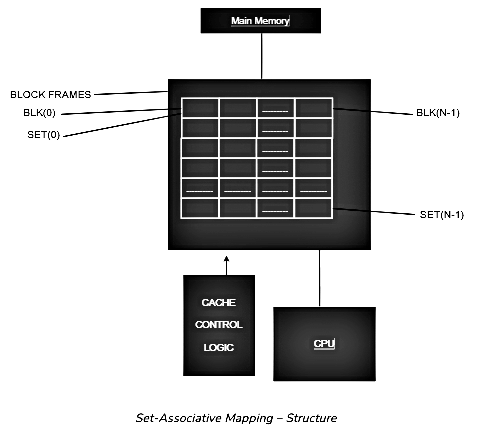

3. Set-Associative Mapping

This mapping approach is an advancement over direct mapping, aiming to eliminate its limitations. Set-associative mapping addresses the potential thrashing issue inherent in direct mapping. Instead of strictly assigning one line for a block in the cache, set-associative mapping organizes lines into sets. This means a block in memory can map to any line within a specific set. With set-associative mapping, each word in the cache can correspond to two or more words in main memory sharing the same index address. By blending aspects of both direct and associative cache mapping techniques, set-associative mapping offers an optimized solution. The index bits in set-associative mapping are determined by the set offset bits. In this setup, the cache comprises several sets, each containing multiple lines.

Relationships in the Set-Associative Mapping can be defined as:

m = v * k

i= j mod v

where

i = cache set number

j = main memory block number

v = number of sets

m = number of lines in the cache number of sets

k = number of lines in each set

Application of Cache Memory

Cache memory finds applications in various scenarios:

- Primary Cache: Positioned directly on the processor chip, primary cache boasts a small size and access times comparable to processor registers.

- Secondary Cache: Acting as an intermediary between primary cache and the rest of the memory hierarchy, the secondary cache, often referred to as Level 2 (L2) cache, is frequently integrated onto the processor chip.

- Spatial Locality of Reference: This principle suggests that elements tend to cluster near reference points. Subsequent searches are likely to find elements in close proximity to the original reference.

- Temporal Locality of Reference: Temporal Locality of Reference leverages the Least Recently Used (LRU) algorithm. In the event of a page fault, not only is the requested word loaded into main memory, but the entire page containing the faulting word is loaded. This is because of the spatial locality of reference principle, where accessing one word often leads to accessing nearby words. Hence, loading the entire page ensures efficient access to subsequent words in the same locality.

Advantages of Cache Memory

- Cache memory outperforms both main memory and secondary memory in terms of speed.

- Programs stored in cache memory can be executed more quickly.

- Cache memory offers shorter data access times compared to main memory.

- By storing frequently accessed data and instructions, cache memory enhances CPU performance.

Disadvantages of Cache Memory

- Cache memory comes at a higher cost compared to both primary and secondary memory.

- Cache memory temporarily stores data.

- Data and instructions stored in cache memory are lost when the system is powered off.

- The elevated cost of cache memory contributes to the overall price of the computer system.

|

745 videos|1444 docs|633 tests

|

FAQs on Cache Memory - Famous Books for UPSC Exam (Summary & Tests)

| 1. What are the characteristics of Cache Memory? |  |

| 2. What are the different levels of memory in a computer system? |  |

| 3. How does Cache Performance impact overall system performance? |  |

| 4. What is Cache Mapping and how does it work? |  |

| 5. What are some advantages and disadvantages of using Cache Memory in a computer system? |  |