All Exams >

Computer Science Engineering (CSE) >

Operating System >

All Questions

All questions of Process Management for Computer Science Engineering (CSE) Exam

A certain computation generates two arrays a and b such that a[i]=f(i) for 0 ≤ i < n and b[i]=g(a[i]) for 0 ≤ i < n. Suppose this computation is decomposed into two concurrent processes X and Y such that X computes the array a and Y computes the array b. The processes employ two binary semaphores R and S, both initialized to zero. The array a is shared by the two processes. The structures of the processes are shown below.Process X: Process Y:private i; private i;

for (i=0; i < n; i++) { for (i=0; i < n; i++) {

a[i] = f(i); EntryY(R, S);

ExitX(R, S); b[i]=g(a[i]);

} } Q. Which one of the following represents the CORRECT implementations of ExitX and EntryY?- a)ExitX(R, S) {

P(R);

V(S);

}

EntryY (R, S) {

P(S);

V(R);

} - b)ExitX(R, S) {

V(R);

V(S);

}

EntryY(R, S) {

P(R);

P(S);

} - c)ExitX(R, S) {

P(S);

V(R);

}

EntryY(R, S) {

V(S);

P(R);

} - d)ExitX(R, S) {

V(R);

P(S);

}

EntryY(R, S) {

V(S);

P(R);

}

Correct answer is option 'C'. Can you explain this answer?

A certain computation generates two arrays a and b such that a[i]=f(i) for 0 ≤ i < n and b[i]=g(a[i]) for 0 ≤ i < n. Suppose this computation is decomposed into two concurrent processes X and Y such that X computes the array a and Y computes the array b. The processes employ two binary semaphores R and S, both initialized to zero. The array a is shared by the two processes. The structures of the processes are shown below.

Process X: Process Y:

private i; private i;

for (i=0; i < n; i++) { for (i=0; i < n; i++) {

a[i] = f(i); EntryY(R, S);

ExitX(R, S); b[i]=g(a[i]);

} }

for (i=0; i < n; i++) { for (i=0; i < n; i++) {

a[i] = f(i); EntryY(R, S);

ExitX(R, S); b[i]=g(a[i]);

} }

Q. Which one of the following represents the CORRECT implementations of ExitX and EntryY?

a)

ExitX(R, S) {

P(R);

V(S);

}

EntryY (R, S) {

P(S);

V(R);

}

P(R);

V(S);

}

EntryY (R, S) {

P(S);

V(R);

}

b)

ExitX(R, S) {

V(R);

V(S);

}

EntryY(R, S) {

P(R);

P(S);

}

V(R);

V(S);

}

EntryY(R, S) {

P(R);

P(S);

}

c)

ExitX(R, S) {

P(S);

V(R);

}

EntryY(R, S) {

V(S);

P(R);

}

P(S);

V(R);

}

EntryY(R, S) {

V(S);

P(R);

}

d)

ExitX(R, S) {

V(R);

P(S);

}

EntryY(R, S) {

V(S);

P(R);

}

V(R);

P(S);

}

EntryY(R, S) {

V(S);

P(R);

}

|

Bijoy Kapoor answered |

The purpose here is neither the deadlock should occur nor the binary semaphores be assigned value greater

than one.

A leads to deadlock

B can increase value of semaphores b/w 1 to n

D may increase the value of semaphore R and S to 2 in some cases

The atomic fetch-and-set x, y instruction unconditionally sets the memory location x to 1 and fetches the old value of x in y without allowing any intervening access to the memory location x. consider the following implementation of P and V functions on a binary semaphore .void P (binary_semaphore *s) {

unsigned y;

unsigned *x = &(s->value);

do {

fetch-and-set x, y;

} while (y);

}

void V (binary_semaphore *s) {

S->value = 0;

} Q. Which one of the following is true?- a)The implementation may not work if context switching is disabled in P.

- b)Instead of using fetch-and-set, a pair of normal load/store can be used

- c)The implementation of V is wrong

- d)The code does not implement a binary semaphore

Correct answer is option 'A'. Can you explain this answer?

The atomic fetch-and-set x, y instruction unconditionally sets the memory location x to 1 and fetches the old value of x in y without allowing any intervening access to the memory location x. consider the following implementation of P and V functions on a binary semaphore .

void P (binary_semaphore *s) {

unsigned y;

unsigned *x = &(s->value);

do {

fetch-and-set x, y;

} while (y);

}

void V (binary_semaphore *s) {

S->value = 0;

}

unsigned y;

unsigned *x = &(s->value);

do {

fetch-and-set x, y;

} while (y);

}

void V (binary_semaphore *s) {

S->value = 0;

}

Q. Which one of the following is true?

a)

The implementation may not work if context switching is disabled in P.

b)

Instead of using fetch-and-set, a pair of normal load/store can be used

c)

The implementation of V is wrong

d)

The code does not implement a binary semaphore

|

Riverdale Learning Institute answered |

Let us talk about the operation P(). It stores the value of s in x, then it fetches the old value of x, stores it in y and sets x as 1. The while loop of a process will continue forever if some other process doesn't execute V() and sets the value of s as 0. If context switching is disabled in P, the while loop will run forever as no other process will be able to execute V().

Which of the following DMA transfer modes and interrupt handling mechanisms will enable the highest I/O band-width? - a)Transparent DMA and Polling interrupts

- b)Cycle-stealing and Vectored interrupts

- c)Block transfer and Vectored interrupts

- d)Block transfer and Polling interrupts

Correct answer is option 'C'. Can you explain this answer?

Which of the following DMA transfer modes and interrupt handling mechanisms will enable the highest I/O band-width?

a)

Transparent DMA and Polling interrupts

b)

Cycle-stealing and Vectored interrupts

c)

Block transfer and Vectored interrupts

d)

Block transfer and Polling interrupts

|

|

Yash Patel answered |

CPU get highest bandwidth in transparent DMA and polling. but it asked for I/O bandwidth not cpu bandwidth so option (A) is wrong.

In case of Cycle stealing, in each cycle time device send data then wait again after few CPU cycle it sends to memory . So option (B) is wrong.

In case of Polling CPU takes the initiative so I/O bandwidth can not be high so option (D) is wrong .

Consider Block transfer, in each single block device send data so bandwidth ( means the amount of data ) must be high . This makes option (C) correct.

In case of Cycle stealing, in each cycle time device send data then wait again after few CPU cycle it sends to memory . So option (B) is wrong.

In case of Polling CPU takes the initiative so I/O bandwidth can not be high so option (D) is wrong .

Consider Block transfer, in each single block device send data so bandwidth ( means the amount of data ) must be high . This makes option (C) correct.

Which one of the following is FALSE?- a)User level threads are not scheduled by the kernel.

- b)When a user level thread is blocked, all other threads of its process are blocked.

- c)Context switching between user level threads is faster than context switching between kernel level threads.

- d)Kernel level threads cannot share the code segmen

Correct answer is option 'D'. Can you explain this answer?

Which one of the following is FALSE?

a)

User level threads are not scheduled by the kernel.

b)

When a user level thread is blocked, all other threads of its process are blocked.

c)

Context switching between user level threads is faster than context switching between kernel level threads.

d)

Kernel level threads cannot share the code segmen

|

|

Rithika Tiwari answered |

A shared variable x, initialized to zero, is operated on by four concurrent processes W, X, Y, Z as follows. Each of the processes W and X reads x from memory, increments by one, stores it to memory, and then terminates. Each of the processes Y and Z reads x from memory, decrements by two, stores it to memory, and then terminates. Each process before reading x invokes the P operation (i.e., wait) on a counting semaphore S and invokes the V operation (i.e., signal) on the semaphore S after storing x to memory. Semaphore S is initialized to two. What is the maximum possible value of x after all processes complete execution?- a)-2

- b)-1

- c)1

- d)2

Correct answer is option 'D'. Can you explain this answer?

A shared variable x, initialized to zero, is operated on by four concurrent processes W, X, Y, Z as follows. Each of the processes W and X reads x from memory, increments by one, stores it to memory, and then terminates. Each of the processes Y and Z reads x from memory, decrements by two, stores it to memory, and then terminates. Each process before reading x invokes the P operation (i.e., wait) on a counting semaphore S and invokes the V operation (i.e., signal) on the semaphore S after storing x to memory. Semaphore S is initialized to two. What is the maximum possible value of x after all processes complete execution?

a)

-2

b)

-1

c)

1

d)

2

|

Sandeep Sen answered |

Processes can run in many ways, below is one of the cases in which x attains max value

Semaphore S is initialized to 2 Process W executes S=1, x=1 but it doesn't update the x variable.

Then process Y executes S=0, it decrements x, now x= -2 and signal semaphore S=1

Now process Z executes s=0, x=-4, signal semaphore S=1

Now process W updates x=1, S=2 Then process X executes X=2

Semaphore S is initialized to 2 Process W executes S=1, x=1 but it doesn't update the x variable.

Then process Y executes S=0, it decrements x, now x= -2 and signal semaphore S=1

Now process Z executes s=0, x=-4, signal semaphore S=1

Now process W updates x=1, S=2 Then process X executes X=2

So correct option is D

A thread is usually defined as a "light weight process" because an operating system (OS) maintains smaller data structures for a thread than for a process. In relation to this, which of the following is TRUE?- a)On per-thread basis, the OS maintains only CPU register state

- b)The OS does not maintain a separate stack for each thread

- c)On per-thread basis, the OS does not maintain virtual memory state

- d)On per-thread basis, the OS maintains only scheduling and accounting information

Correct answer is option 'C'. Can you explain this answer?

A thread is usually defined as a "light weight process" because an operating system (OS) maintains smaller data structures for a thread than for a process. In relation to this, which of the following is TRUE?

a)

On per-thread basis, the OS maintains only CPU register state

b)

The OS does not maintain a separate stack for each thread

c)

On per-thread basis, the OS does not maintain virtual memory state

d)

On per-thread basis, the OS maintains only scheduling and accounting information

|

|

Naina Shah answered |

Threads share address space of Process. Virtually memory is concerned with processes not with Threads. A thread is a basic unit of CPU utilization, consisting of a program counter, a stack, and a set of registers, (and a thread ID.) As you can see, for a single thread of control - there is one program counter, and one sequence of instructions that can be carried out at any given time and for multi-threaded applications-there are multiple threads within a single process, each having their own program counter, stack and set of registers, but sharing common code, data, and certain structures such as open files.

Option (A): as you can see in the above diagram, NOT ONLY CPU Register but stack and code files, data files are also maintained. So, option (A) is not correct as it says OS maintains only CPU register state.

Option (B): according to option (B), OS does not maintain a separate stack for each thread. But as you can see in above diagram, for each thread, separate stack is maintained. So this option is also incorrect.

Option (C): according to option (C), the OS does not maintain virtual memory state. And It is correct as Os does not maintain any virtual memory state for individual thread.

Option (D): according to option (D), the OS maintains only scheduling and accounting information. But it is not correct as it contains other information like cpu registers stack, program counters, data files, code files are also maintained.

Option (B): according to option (B), OS does not maintain a separate stack for each thread. But as you can see in above diagram, for each thread, separate stack is maintained. So this option is also incorrect.

Option (C): according to option (C), the OS does not maintain virtual memory state. And It is correct as Os does not maintain any virtual memory state for individual thread.

Option (D): according to option (D), the OS maintains only scheduling and accounting information. But it is not correct as it contains other information like cpu registers stack, program counters, data files, code files are also maintained.

A process executes the following codefor (i = 0; i < n; i++) fork();The total number of child processes created is- a)n

- b)2^n - 1

- c)2^n

- d)2^(n+1) - 1

Correct answer is option 'B'. Can you explain this answer?

A process executes the following code

for (i = 0; i < n; i++) fork();

The total number of child processes created is

a)

n

b)

2^n - 1

c)

2^n

d)

2^(n+1) - 1

|

|

Anshu Mehta answered |

If we sum all levels of above tree for i = 0 to n-1, we get 2^n - 1. So there will be 2^n – 1 child processes. Also see this post for more details.

Consider a computer C1 has n CPUs and k processes. Which of the following statements are False?- a)The maximum number of processes in ready state is K.

- b)The maximum number of processes in block state is K.

- c)The maximum number of processes in running state is K.

- d)The maximum number of processes in running state is n.

Correct answer is option 'B,C'. Can you explain this answer?

Consider a computer C1 has n CPUs and k processes. Which of the following statements are False?

a)

The maximum number of processes in ready state is K.

b)

The maximum number of processes in block state is K.

c)

The maximum number of processes in running state is K.

d)

The maximum number of processes in running state is n.

|

|

Baishali Reddy answered |

Understanding Process States in a Multi-CPU Environment

In a computer system with n CPUs and k processes, various states exist for processes: ready, blocked, and running. Let's analyze the statements to identify which are false.

Statement A: Maximum Processes in Ready State

- The ready state represents processes that are prepared to run but are not currently executing.

- Since there can be many processes waiting, the maximum number of processes in the ready state is indeed k.

Statement B: Maximum Processes in Block State

- The blocked state comprises processes waiting for resources (like I/O operations) to become available.

- Unlike the ready state, there can be many processes blocked, but the number of blocked processes is not constrained by k in a direct manner.

- Processes can be blocked without necessarily competing for a CPU, meaning this statement is false.

Statement C: Maximum Processes in Running State

- The running state indicates processes currently being executed by CPUs.

- In a system with n CPUs, only n processes can be running simultaneously. Hence, the maximum number of processes in the running state is not k but n. This statement is false.

Statement D: Maximum Processes in Running State is n

- This statement accurately reflects the system's constraints, as only n CPUs can execute n processes at any given time.

Conclusion

- The false statements are B and C because:

- B incorrectly implies an upper limit on blocked processes based solely on k.

- C inaccurately suggests that k processes can run simultaneously when limited by the number of CPUs, n.

Understanding these distinctions is crucial in process management within operating systems.

In a computer system with n CPUs and k processes, various states exist for processes: ready, blocked, and running. Let's analyze the statements to identify which are false.

Statement A: Maximum Processes in Ready State

- The ready state represents processes that are prepared to run but are not currently executing.

- Since there can be many processes waiting, the maximum number of processes in the ready state is indeed k.

Statement B: Maximum Processes in Block State

- The blocked state comprises processes waiting for resources (like I/O operations) to become available.

- Unlike the ready state, there can be many processes blocked, but the number of blocked processes is not constrained by k in a direct manner.

- Processes can be blocked without necessarily competing for a CPU, meaning this statement is false.

Statement C: Maximum Processes in Running State

- The running state indicates processes currently being executed by CPUs.

- In a system with n CPUs, only n processes can be running simultaneously. Hence, the maximum number of processes in the running state is not k but n. This statement is false.

Statement D: Maximum Processes in Running State is n

- This statement accurately reflects the system's constraints, as only n CPUs can execute n processes at any given time.

Conclusion

- The false statements are B and C because:

- B incorrectly implies an upper limit on blocked processes based solely on k.

- C inaccurately suggests that k processes can run simultaneously when limited by the number of CPUs, n.

Understanding these distinctions is crucial in process management within operating systems.

Consider two processors P1 and P2 executing the same instruction set. Assume that under identical conditions, for the same input, a program running on P2 takes 25% less time but incurs 20% more CPI (clock cycles per instruction) as compared to the program running on P1. If the clock frequency of P1 is 1GHz, then the clock frequency of P2 (in GHz) is _________.- a)1.6

- b)3.2

- c)1.2

- d)0.8

Correct answer is option 'A'. Can you explain this answer?

Consider two processors P1 and P2 executing the same instruction set. Assume that under identical conditions, for the same input, a program running on P2 takes 25% less time but incurs 20% more CPI (clock cycles per instruction) as compared to the program running on P1. If the clock frequency of P1 is 1GHz, then the clock frequency of P2 (in GHz) is _________.

a)

1.6

b)

3.2

c)

1.2

d)

0.8

|

|

Megha Yadav answered |

For P1 clock period = 1ns Let clock period for P2 be t.

Now consider following equation based on specification 7.5 ns = 12*t ns

We get t and inverse of t will be 1.6GHz

Now consider following equation based on specification 7.5 ns = 12*t ns

We get t and inverse of t will be 1.6GHz

Time taken to switch between user and kernel models is _______ the time taken to switch between two processes.- a)More than

- b)Independent of

- c)Less than

- d)Equal to

Correct answer is option 'C'. Can you explain this answer?

Time taken to switch between user and kernel models is _______ the time taken to switch between two processes.

a)

More than

b)

Independent of

c)

Less than

d)

Equal to

|

Crack Gate answered |

- Switching from kernel to user mode is a very fast operation, OS has to just change single bit at hardware level.

- Switching from one process to another process is time consuming process, first we have to move to kernel mode then we have to save PCB and some registers.

Time taken to switch between user and kernel models is less than the time taken to switch between two processes, so option 3 is the correct answer.

Three concurrent processes X, Y, and Z execute three different code segments that access and update certain shared variables. Process X executes the P operation (i.e., wait) on semaphores a, b and c; process Y executes the P operation on semaphores b, c and d; process Z executes the P operation on semaphores c, d, and a before entering the respective code segments. After completing the execution of its code segment, each process invokes the V operation (i.e., signal) on its three semaphores. All semaphores are binary semaphores initialized to one. Which one of the following represents a deadlockfree order of invoking the P operations by the processes? - a)X: P(a)P(b)P(c) Y:P(b)P(c)P(d) Z:P(c)P(d)P(a)

- b)X: P(b)P(a)P(c) Y:P(b)P(c)P(d) Z:P(a)P(c)P(d)

- c)X: P(b)P(a)P(c) Y:P(c)P(b)P(d) Z:P(a)P(c)P(d)

- d)X: P(a)P(b)P(c) Y:P(c)P(b)P(d) Z:P(c)P(d)P(a)

Correct answer is option 'B'. Can you explain this answer?

Three concurrent processes X, Y, and Z execute three different code segments that access and update certain shared variables. Process X executes the P operation (i.e., wait) on semaphores a, b and c; process Y executes the P operation on semaphores b, c and d; process Z executes the P operation on semaphores c, d, and a before entering the respective code segments. After completing the execution of its code segment, each process invokes the V operation (i.e., signal) on its three semaphores. All semaphores are binary semaphores initialized to one. Which one of the following represents a deadlockfree order of invoking the P operations by the processes?

a)

X: P(a)P(b)P(c) Y:P(b)P(c)P(d) Z:P(c)P(d)P(a)

b)

X: P(b)P(a)P(c) Y:P(b)P(c)P(d) Z:P(a)P(c)P(d)

c)

X: P(b)P(a)P(c) Y:P(c)P(b)P(d) Z:P(a)P(c)P(d)

d)

X: P(a)P(b)P(c) Y:P(c)P(b)P(d) Z:P(c)P(d)P(a)

|

|

Mira Rane answered |

Deadlock and Concurrent Processes

In a concurrent system, multiple processes run simultaneously and access shared resources. Deadlock occurs when two or more processes are unable to proceed because each is waiting for the other to release a resource. To avoid deadlock, it is important to carefully schedule the order of operations and resource allocation.

Given Scenario

In this scenario, we have three concurrent processes X, Y, and Z. Each process executes a code segment and accesses shared variables. The processes perform P (wait) and V (signal) operations on semaphores to control resource access. The initial values of all semaphores are 1.

Process X performs P operations on semaphores a, b, and c.

Process Y performs P operations on semaphores b, c, and d.

Process Z performs P operations on semaphores c, d, and a.

After completing their code segments, each process performs V operations on its three semaphores.

Deadlock-Free Order

To ensure a deadlock-free order, we need to schedule the P operations in a way that prevents circular waiting and ensures progress for each process.

From the given options, option B represents a deadlock-free order:

X: P(b)P(a)P(c)

Process X first performs P(b), then P(a), and finally P(c).

Y: P(b)P(c)P(d)

Process Y performs P(b), then P(c), and finally P(d).

Z: P(a)P(c)P(d)

Process Z performs P(a), then P(c), and finally P(d).

This order ensures that no process is waiting indefinitely for a resource held by another process. Each process releases its acquired resources before requesting new ones, preventing circular waiting and deadlock.

By following this order, the processes can execute their code segments without deadlock and progress can be made by each process.

Therefore, option B represents a deadlock-free order of invoking the P operations by the processes.

In a concurrent system, multiple processes run simultaneously and access shared resources. Deadlock occurs when two or more processes are unable to proceed because each is waiting for the other to release a resource. To avoid deadlock, it is important to carefully schedule the order of operations and resource allocation.

Given Scenario

In this scenario, we have three concurrent processes X, Y, and Z. Each process executes a code segment and accesses shared variables. The processes perform P (wait) and V (signal) operations on semaphores to control resource access. The initial values of all semaphores are 1.

Process X performs P operations on semaphores a, b, and c.

Process Y performs P operations on semaphores b, c, and d.

Process Z performs P operations on semaphores c, d, and a.

After completing their code segments, each process performs V operations on its three semaphores.

Deadlock-Free Order

To ensure a deadlock-free order, we need to schedule the P operations in a way that prevents circular waiting and ensures progress for each process.

From the given options, option B represents a deadlock-free order:

X: P(b)P(a)P(c)

Process X first performs P(b), then P(a), and finally P(c).

Y: P(b)P(c)P(d)

Process Y performs P(b), then P(c), and finally P(d).

Z: P(a)P(c)P(d)

Process Z performs P(a), then P(c), and finally P(d).

This order ensures that no process is waiting indefinitely for a resource held by another process. Each process releases its acquired resources before requesting new ones, preventing circular waiting and deadlock.

By following this order, the processes can execute their code segments without deadlock and progress can be made by each process.

Therefore, option B represents a deadlock-free order of invoking the P operations by the processes.

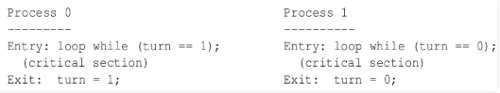

Consider the methods used by processes P1 and P2 for accessing their critical sections whenever needed, as given below. The initial values of shared boolean variables S1 and S2 are randomly assigned.Method Used by P1

while (S1 == S2) ;

Critica1 Section

S1 = S2;Method Used by P2

while (S1 != S2) ;

Critica1 Section

S2 = not (S1); Q. Which one of the following statements describes the properties achieved?- a)Mutual exclusion but not progress

- b)Progress but not mutual exclusion

- c)Neither mutual exclusion nor progress

- d)Both mutual exclusion and progress

Correct answer is option 'A'. Can you explain this answer?

Consider the methods used by processes P1 and P2 for accessing their critical sections whenever needed, as given below. The initial values of shared boolean variables S1 and S2 are randomly assigned.

Method Used by P1

while (S1 == S2) ;

Critica1 Section

S1 = S2;

while (S1 == S2) ;

Critica1 Section

S1 = S2;

Method Used by P2

while (S1 != S2) ;

Critica1 Section

S2 = not (S1);

while (S1 != S2) ;

Critica1 Section

S2 = not (S1);

Q. Which one of the following statements describes the properties achieved?

a)

Mutual exclusion but not progress

b)

Progress but not mutual exclusion

c)

Neither mutual exclusion nor progress

d)

Both mutual exclusion and progress

|

Saptarshi Nair answered |

Mutual Exclusion: A way of making sure that if one process is using a shared modifiable data, the other processes will be excluded from doing the same thing. while one process executes the shared variable, all other processes desiring to do so at the same time moment should be kept waiting; when that process has finished executing the shared variable, one of the processes waiting; while that process has finished executing the shared variable, one of the processes waiting to do so should be allowed to proceed. In this fashion, each process executing the shared data (variables) excludes all others from doing so simultaneously. This is called Mutual Exclusion.

Progress Requirement: If no process is executing in its critical section and there exist some processes that wish to enter their critical section, then the selection of the processes that will enter the critical section next cannot be postponed indefinitely.

Solution: It can be easily observed that the Mutual Exclusion requirement is satisfied by the above solution, P1 can enter critical section only if S1 is not equal to S2, and P2 can enter critical section only if S1 is equal to S2. But here Progress Requirement is not satisfied. Suppose when s1=1 and s2=0 and process p1 is not interested to enter into critical section but p2 want to enter critical section. P2 is not able to enter critical section in this as only when p1 finishes execution, then only p2 can enter (then only s1 = s2 condition be satisfied). Progress will not be satisfied when any process which is not interested to enter into the critical section will not allow other interested process to enter into the critical section.

Progress Requirement: If no process is executing in its critical section and there exist some processes that wish to enter their critical section, then the selection of the processes that will enter the critical section next cannot be postponed indefinitely.

Solution: It can be easily observed that the Mutual Exclusion requirement is satisfied by the above solution, P1 can enter critical section only if S1 is not equal to S2, and P2 can enter critical section only if S1 is equal to S2. But here Progress Requirement is not satisfied. Suppose when s1=1 and s2=0 and process p1 is not interested to enter into critical section but p2 want to enter critical section. P2 is not able to enter critical section in this as only when p1 finishes execution, then only p2 can enter (then only s1 = s2 condition be satisfied). Progress will not be satisfied when any process which is not interested to enter into the critical section will not allow other interested process to enter into the critical section.

Fetch_And_Add(X,i) is an atomic Read-Modify-Write instruction that reads the value of memory location X, increments it by the value i, and returns the old value of X. It is used in the pseudocode shown below to implement a busy-wait lock. L is an unsigned integer shared variable initialized to 0. The value of 0 corresponds to lock being available, while any non-zero value corresponds to the lock being not available.AcquireLock(L){

while (Fetch_And_Add(L,1))

L = 1;

}

ReleaseLock(L){

L = 0;

} This implementation- a)fails as L can overflow

- b)fails as L can take on a non-zero value when the lock is actually available

- c)works correctly but may starve some processes

- d)works correctly without starvation

Correct answer is option 'B'. Can you explain this answer?

Fetch_And_Add(X,i) is an atomic Read-Modify-Write instruction that reads the value of memory location X, increments it by the value i, and returns the old value of X. It is used in the pseudocode shown below to implement a busy-wait lock. L is an unsigned integer shared variable initialized to 0. The value of 0 corresponds to lock being available, while any non-zero value corresponds to the lock being not available.

AcquireLock(L){

while (Fetch_And_Add(L,1))

L = 1;

}

ReleaseLock(L){

L = 0;

}

while (Fetch_And_Add(L,1))

L = 1;

}

ReleaseLock(L){

L = 0;

}

This implementation

a)

fails as L can overflow

b)

fails as L can take on a non-zero value when the lock is actually available

c)

works correctly but may starve some processes

d)

works correctly without starvation

|

|

Mahesh Pillai answered |

Take closer look the below while loop.

while (Fetch_And_Add(L,1))

L = 1; // A waiting process can be here just after

// the lock is released, and can make L = 1.

L = 1; // A waiting process can be here just after

// the lock is released, and can make L = 1.

Consider a situation where a process has just released the lock and made L = 0. Let there be one more process waiting for the lock, means executing the AcquireLock() function. Just after the L was made 0, let the waiting processes executed the line L = 1. Now, the lock is available and L = 1. Since L is 1, the waiting process (and any other future coming processes) can not come out of the while loop. The above problem can be resolved by changing the AcuireLock() to following.

AcquireLock(L){

while (Fetch_And_Add(L,1))

{ // Do Nothing }

}

while (Fetch_And_Add(L,1))

{ // Do Nothing }

}

A _________ process is moved to the ready state when its time allocation expires.- a)Blocked

- b)New

- c)Running

- d)Suspended

Correct answer is option 'C'. Can you explain this answer?

A _________ process is moved to the ready state when its time allocation expires.

a)

Blocked

b)

New

c)

Running

d)

Suspended

|

|

Hrishikesh Unni answered |

Understanding Process States

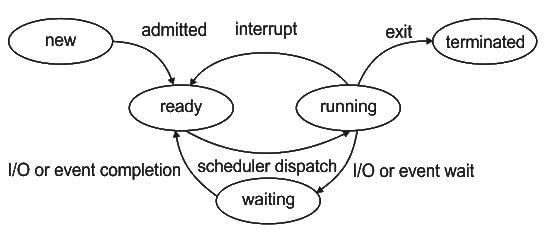

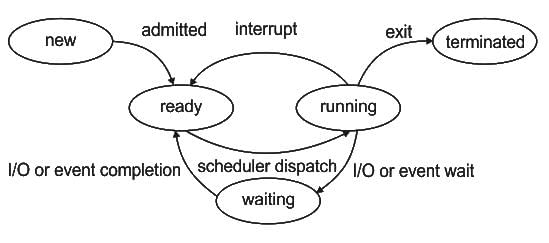

In operating systems, processes transition through various states during their execution. The main states include new, ready, running, blocked, and suspended.

Key States of a Process

- New: The process is created and is in the admission phase waiting to be moved to the ready state.

- Ready: The process is prepared to run but is waiting for CPU time.

- Running: The process is currently executing on the CPU.

- Blocked: The process cannot continue because it is waiting for some event (like I/O operations).

- Suspended: The process is temporarily halted and can be moved back to ready or terminated later.

Time Allocation Expiry

When a process is in the running state, it has a specific time slice or quantum allocated by the CPU scheduler. Once this time allocation expires, the process cannot continue executing.

Transition to Ready State

- Upon expiration of the time slice, the running process is preempted.

- The operating system then moves it back to the ready state, allowing other processes to utilize the CPU.

- This ensures fair scheduling, preventing any single process from monopolizing CPU time.

Conclusion

Thus, the correct answer to the question is option 'C' (Running). A running process transitions to the ready state when its time allocation expires, allowing for efficient multitasking and resource management in the operating system.

In operating systems, processes transition through various states during their execution. The main states include new, ready, running, blocked, and suspended.

Key States of a Process

- New: The process is created and is in the admission phase waiting to be moved to the ready state.

- Ready: The process is prepared to run but is waiting for CPU time.

- Running: The process is currently executing on the CPU.

- Blocked: The process cannot continue because it is waiting for some event (like I/O operations).

- Suspended: The process is temporarily halted and can be moved back to ready or terminated later.

Time Allocation Expiry

When a process is in the running state, it has a specific time slice or quantum allocated by the CPU scheduler. Once this time allocation expires, the process cannot continue executing.

Transition to Ready State

- Upon expiration of the time slice, the running process is preempted.

- The operating system then moves it back to the ready state, allowing other processes to utilize the CPU.

- This ensures fair scheduling, preventing any single process from monopolizing CPU time.

Conclusion

Thus, the correct answer to the question is option 'C' (Running). A running process transitions to the ready state when its time allocation expires, allowing for efficient multitasking and resource management in the operating system.

The enter_CS() and leave_CS() functions to implement critical section of a process are realized using test-and-set instruction as follows:void enter_CS(X)

{

while test-and-set(X) ;

}

void leave_CS(X)

{

X = 0;

} Q. In the above solution, X is a memory location associated with the CS and is initialized to 0. Now consider the following statements: I. The above solution to CS problem is deadlock-free II. The solution is starvation free. III. The processes enter CS in FIFO order. IV More than one process can enter CS at the same time. Which of the above statements is TRUE?- a)I only

- b)I and II

- c)II and III

- d)IV only

Correct answer is option 'A'. Can you explain this answer?

The enter_CS() and leave_CS() functions to implement critical section of a process are realized using test-and-set instruction as follows:

void enter_CS(X)

{

while test-and-set(X) ;

}

void leave_CS(X)

{

X = 0;

}

{

while test-and-set(X) ;

}

void leave_CS(X)

{

X = 0;

}

Q. In the above solution, X is a memory location associated with the CS and is initialized to 0. Now consider the following statements: I. The above solution to CS problem is deadlock-free II. The solution is starvation free. III. The processes enter CS in FIFO order. IV More than one process can enter CS at the same time. Which of the above statements is TRUE?

a)

I only

b)

I and II

c)

II and III

d)

IV only

|

Bhargavi Kulkarni answered |

The above solution is a simple test-and-set solution that makes sure that deadlock doesn’t occur, but it doesn’t use any queue to avoid starvation or to have FIFO order.

Consider two processes P1 and P2 accessing the shared variables X and Y protected by two binary semaphores SX and SY respectively, both initialized to 1. P and V denote the usual semaphone operators, where P decrements the semaphore value, and V increments the semaphore value. The pseudo-code of P1 and P2 is as follows : P1 :While true do {

L1 : ................

L2 : ................

X = X + 1;

Y = Y - 1;

V(SX);

V(SY);

}P2 :While true do {

L3 : ................

L4 : ................

Y = Y + 1;

X = Y - 1;

V(SY);

V(SX);

} Q. In order to avoid deadlock, the correct operators at L1, L2, L3 and L4 are respectively- a)P(SY), P(SX); P(SX), P(SY)

- b)P(SX), P(SY); P(SY), P(SX)

- c)P(SX), P(SX); P(SY), P(SY)

- d)P(SX), P(SY); P(SX), P(SY)

Correct answer is option 'D'. Can you explain this answer?

Consider two processes P1 and P2 accessing the shared variables X and Y protected by two binary semaphores SX and SY respectively, both initialized to 1. P and V denote the usual semaphone operators, where P decrements the semaphore value, and V increments the semaphore value. The pseudo-code of P1 and P2 is as follows : P1 :

While true do {

L1 : ................

L2 : ................

X = X + 1;

Y = Y - 1;

V(SX);

V(SY);

}

L1 : ................

L2 : ................

X = X + 1;

Y = Y - 1;

V(SX);

V(SY);

}

P2 :

While true do {

L3 : ................

L4 : ................

Y = Y + 1;

X = Y - 1;

V(SY);

V(SX);

}

L3 : ................

L4 : ................

Y = Y + 1;

X = Y - 1;

V(SY);

V(SX);

}

Q. In order to avoid deadlock, the correct operators at L1, L2, L3 and L4 are respectively

a)

P(SY), P(SX); P(SX), P(SY)

b)

P(SX), P(SY); P(SY), P(SX)

c)

P(SX), P(SX); P(SY), P(SY)

d)

P(SX), P(SY); P(SX), P(SY)

|

|

Garima Dasgupta answered |

Option A: In line L1 (p(Sy)) i.e. process p1 wants lock on Sy that is held by process p2 and line L3 (p(Sx)) p2 wants lock on Sx which held by p1. So here circular and wait condition exist means deadlock.

Option B : In line L1 (p(Sx)) i.e. process p1 wants lock on Sx that is held by process p2 and line L3 (p(Sy)) p2 wants lock on Sx which held by p1. So here circular and wait condition exist means deadlock.

Option C: In line L1 (p(Sx)) i.e. process p1 wants lock on Sx and line L3 (p(Sy)) p2 wants lock on Sx . But Sx and Sy can’t be released by its processes p1 and p2.

Please read the following to learn more about process synchronization and semaphores: Process Synchronization Set 1

The P and V operations on counting semaphores, where s is a counting semaphore, are defined as follows:P(s) : s = s - 1;

if (s < 0) then wait;

V(s) : s = s + 1;

if (s <= 0) then wakeup a process waiting on s;Assume that Pb and Vb the wait and signal operations on binary semaphores are provided. Two binary semaphores Xb and Yb are used to implement the semaphore operations P(s) and V(s) as follows:P(s) : Pb(Xb);

s = s - 1;

if (s < 0) {

Vb(Xb) ;

Pb(Yb) ;

}

else Vb(Xb);

V(s) : Pb(Xb) ;

s = s + 1;

if (s <= 0) Vb(Yb) ;

Vb(Xb) ;

The initial values of Xb and Yb are respectively- a)0 and 0

- b)0 and 1

- c)1 and 0

- d)1 and 1

Correct answer is option 'C'. Can you explain this answer?

The P and V operations on counting semaphores, where s is a counting semaphore, are defined as follows:

P(s) : s = s - 1;

if (s < 0) then wait;

V(s) : s = s + 1;

if (s <= 0) then wakeup a process waiting on s;

if (s < 0) then wait;

V(s) : s = s + 1;

if (s <= 0) then wakeup a process waiting on s;

Assume that Pb and Vb the wait and signal operations on binary semaphores are provided. Two binary semaphores Xb and Yb are used to implement the semaphore operations P(s) and V(s) as follows:

P(s) : Pb(Xb);

s = s - 1;

if (s < 0) {

Vb(Xb) ;

Pb(Yb) ;

}

else Vb(Xb);

V(s) : Pb(Xb) ;

s = s + 1;

if (s <= 0) Vb(Yb) ;

Vb(Xb) ;

s = s - 1;

if (s < 0) {

Vb(Xb) ;

Pb(Yb) ;

}

else Vb(Xb);

V(s) : Pb(Xb) ;

s = s + 1;

if (s <= 0) Vb(Yb) ;

Vb(Xb) ;

The initial values of Xb and Yb are respectively

a)

0 and 0

b)

0 and 1

c)

1 and 0

d)

1 and 1

|

|

Priyanka Chopra answered |

Suppose Xb = 0, then because of P(s): Pb(Xb) operation, Xb will be -1 and processs will get blocked as it will enter into waiting section. So, Xb will be one. Suppose s=2(means 2 process are accessing shared resource), taking Xb as 1,

first P(s): Pb(Xb) operation will make Xb as zero. s will be 1 and Then Vb(Xb) operation will be executed which will increase the count of Xb as one. Then same process will be repeated making Xb as one and s as zero.

Now suppose one more process comes, then Xb will be 1 but s will be -1 which will make this process go into loop (s <0) and will result into calling Vb(Xb) and Pb(Yb) operations. Vb(Xb) will result into Xb as 2 and Pb(Yb) will result into decrementing the value of Yb.

first P(s): Pb(Xb) operation will make Xb as zero. s will be 1 and Then Vb(Xb) operation will be executed which will increase the count of Xb as one. Then same process will be repeated making Xb as one and s as zero.

Now suppose one more process comes, then Xb will be 1 but s will be -1 which will make this process go into loop (s <0) and will result into calling Vb(Xb) and Pb(Yb) operations. Vb(Xb) will result into Xb as 2 and Pb(Yb) will result into decrementing the value of Yb.

case 1: if Yb has value as 0, it will be -1 and it will go into waiting and will be blocked.total 2 process will access shared resource (according to counting semaphore, max 3 process can access shared resource) and value of s is -1 means only 1 process will be waiting for resources and just now, one process got blocked. So it is still true.

case 2: if Yb has value as 1, it will be 0. Total 3 process will access shared resource (according to counting semaphore, max 3 process can access shared resource) and value of s is -1 means only 1 process will be waiting for resources and but there is no process waiting for resources.So it is false.

case 2: if Yb has value as 1, it will be 0. Total 3 process will access shared resource (according to counting semaphore, max 3 process can access shared resource) and value of s is -1 means only 1 process will be waiting for resources and but there is no process waiting for resources.So it is false.

The semaphore variables full, empty and mutex are initialized to 0, n and 1, respectively. Process P1 repeatedly adds one item at a time to a buffer of size n, and process P2 repeatedly removes one item at a time from the same buffer using the programs given below. In the programs, K, L, M and N are unspecified statements. P1while (1) { K; P(mutex); Add an item to the buffer; V(mutex); L; } P2 while (1) { M; P(mutex); Remove an item from the buffer; V(mutex); N; } The statements K, L, M and N are respectively- a)P(full), V(empty), P(full), V(empty)

- b)P(full), V(empty), P(empty), V(full)

- c)P(empty), V(full), P(empty), V(full)

- d)P(empty), V(full), P(full), V(empty)

Correct answer is option 'D'. Can you explain this answer?

The semaphore variables full, empty and mutex are initialized to 0, n and 1, respectively. Process P1 repeatedly adds one item at a time to a buffer of size n, and process P2 repeatedly removes one item at a time from the same buffer using the programs given below. In the programs, K, L, M and N are unspecified statements.

P1

while (1) { K; P(mutex); Add an item to the buffer; V(mutex); L; } P2 while (1) { M; P(mutex); Remove an item from the buffer; V(mutex); N; } The statements K, L, M and N are respectively

a)

P(full), V(empty), P(full), V(empty)

b)

P(full), V(empty), P(empty), V(full)

c)

P(empty), V(full), P(empty), V(full)

d)

P(empty), V(full), P(full), V(empty)

|

|

Nilesh Saha answered |

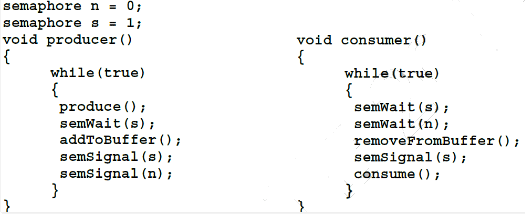

Process P1 is the producer and process P2 is the consumer.

Semaphore ‘full’ is initialized to '0'. This means there is no item in the buffer. Semaphore ‘empty’ is initialized to 'n'. This means there is space for n items in the buffer.

In process P1, wait on semaphore 'empty' signifies that if there is no space in buffer then P1 can not produce more items. Signal on semaphore 'full' is to signify that one item has been added to the buffer.

In process P2, wait on semaphore 'full' signifies that if the buffer is empty then consumer can’t not consume any item. Signal on semaphore 'empty' increments a space in the buffer after consumption of an item.

Thus, option (D) is correct.

Please comment below if you find anything wrong in the above post.

Semaphore ‘full’ is initialized to '0'. This means there is no item in the buffer. Semaphore ‘empty’ is initialized to 'n'. This means there is space for n items in the buffer.

In process P1, wait on semaphore 'empty' signifies that if there is no space in buffer then P1 can not produce more items. Signal on semaphore 'full' is to signify that one item has been added to the buffer.

In process P2, wait on semaphore 'full' signifies that if the buffer is empty then consumer can’t not consume any item. Signal on semaphore 'empty' increments a space in the buffer after consumption of an item.

Thus, option (D) is correct.

Please comment below if you find anything wrong in the above post.

The atomic fetch-and-set x, y instruction unconditionally sets the memory location x to 1 and fetches the old value of x n y without allowing any intervening access to the memory location x. consider the following implementation of P and V functions on a binary semaphore S.void P (binary_semaphore *s)

{

unsigned y;

unsigned *x = &(s->value);

do

{

fetch-and-set x, y;

}

while (y);

}

void V (binary_semaphore *s)

{

S->value = 0;

} Q. Which one of the following is true?- a)The implementation may not work if context switching is disabled in P

- b)Instead of using fetch-and –set, a pair of normal load/store can be used

- c)The implementation of V is wrong

- d)The code does not implement a binary semaphore

Correct answer is option 'A'. Can you explain this answer?

The atomic fetch-and-set x, y instruction unconditionally sets the memory location x to 1 and fetches the old value of x n y without allowing any intervening access to the memory location x. consider the following implementation of P and V functions on a binary semaphore S.

void P (binary_semaphore *s)

{

unsigned y;

unsigned *x = &(s->value);

do

{

fetch-and-set x, y;

}

while (y);

}

void V (binary_semaphore *s)

{

S->value = 0;

}

{

unsigned y;

unsigned *x = &(s->value);

do

{

fetch-and-set x, y;

}

while (y);

}

void V (binary_semaphore *s)

{

S->value = 0;

}

Q. Which one of the following is true?

a)

The implementation may not work if context switching is disabled in P

b)

Instead of using fetch-and –set, a pair of normal load/store can be used

c)

The implementation of V is wrong

d)

The code does not implement a binary semaphore

|

|

Yash Patel answered |

Let us talk about the operation P(). It stores the value of s in x, then it fetches the old value of x, stores it in y and sets x as 1. The while loop of a process will continue forever if some other process doesn’t execute V() and sets the value of s as 0. If context switching is disabled in P, the while loop will run forever as no other process will be able to execute V().

A process waiting to be assigned to a processor is considered to be in ___ state.- a)waiting

- b)ready

- c)terminated

- d)running

Correct answer is option 'B'. Can you explain this answer?

A process waiting to be assigned to a processor is considered to be in ___ state.

a)

waiting

b)

ready

c)

terminated

d)

running

|

Gate Gurus answered |

Whenever a process executes, it goes through several phases or states. These states have their functions.

New – in this state, process is being created

Running – instructions are being executed

Waiting: the process is waiting for some event to occur such as i/o completion

Ready – The process is waiting to be assigned to a processor

Terminated – The process has finished execution.

Consider the following statements about user level threads and kernel level threads. Which one of the following statement is FALSE?- a)Context switch time is longer for kernel level threads than for user level threads.

- b)User level threads do not need any hardware support.

- c)Related kernel level threads can be scheduled on different processors in a multi-processor system.

- d)Blocking one kernel level thread blocks all related threads.

Correct answer is option 'D'. Can you explain this answer?

Consider the following statements about user level threads and kernel level threads. Which one of the following statement is FALSE?

a)

Context switch time is longer for kernel level threads than for user level threads.

b)

User level threads do not need any hardware support.

c)

Related kernel level threads can be scheduled on different processors in a multi-processor system.

d)

Blocking one kernel level thread blocks all related threads.

|

|

Aniket Kulkarni answered |

Kernel level threads are managed by the OS, therefore, thread operations are implemented in the kernel code. Kernel level threads can also utilize multiprocessor systems by splitting threads on different processors. If one thread blocks it does not cause the entire process to block. Kernel level threads have disadvantages as well. They are slower than user level threads due to the management overhead. Kernel level context switch involves more steps than just saving some registers. Finally, they are not portable because the implementation is operating system dependent.

Option (A): Context switch time is longer for kernel level threads than for user level threads. True, As User level threads are managed by user and Kernel level threads are managed by OS. There are many overheads involved in Kernel level thread management, which are not present in User level thread management. So context switch time is longer for kernel level threads than for user level threads.

Option (B): User level threads do not need any hardware support True, as User level threads are managed by user and implemented by Libraries, User level threads do not need any hardware support.

Option (C): Related kernel level threads can be scheduled on different processors in a multi- processor system. This is true.

Option (D): Blocking one kernel level thread blocks all related threads. false, since kernel level threads are managed by operating system, if one thread blocks, it does not cause all threads or entire process to block.

Option (A): Context switch time is longer for kernel level threads than for user level threads. True, As User level threads are managed by user and Kernel level threads are managed by OS. There are many overheads involved in Kernel level thread management, which are not present in User level thread management. So context switch time is longer for kernel level threads than for user level threads.

Option (B): User level threads do not need any hardware support True, as User level threads are managed by user and implemented by Libraries, User level threads do not need any hardware support.

Option (C): Related kernel level threads can be scheduled on different processors in a multi- processor system. This is true.

Option (D): Blocking one kernel level thread blocks all related threads. false, since kernel level threads are managed by operating system, if one thread blocks, it does not cause all threads or entire process to block.

The following two functions P1 and P2 that share a variable B with an initial value of 2 execute concurrently.P1()

{

C = B – 1;

B = 2*C;

}P2()

{

D = 2 * B;

B = D - 1;

}The number of distinct values that B can possibly take after the execution is- a)3

- b)2

- c)5

- d)4

Correct answer is option 'A'. Can you explain this answer?

The following two functions P1 and P2 that share a variable B with an initial value of 2 execute concurrently.

P1()

{

C = B – 1;

B = 2*C;

}

{

C = B – 1;

B = 2*C;

}

P2()

{

D = 2 * B;

B = D - 1;

}

{

D = 2 * B;

B = D - 1;

}

The number of distinct values that B can possibly take after the execution is

a)

3

b)

2

c)

5

d)

4

|

|

Tushar Unni answered |

There are following ways that concurrent processes can follow.

There are 3 different possible values of B: 2, 3 and 4.

With respect to operating systems, which of the following is NOT a valid process state?- a)Ready

- b)Waiting

- c)Running

- d)Starving

Correct answer is option 'D'. Can you explain this answer?

With respect to operating systems, which of the following is NOT a valid process state?

a)

Ready

b)

Waiting

c)

Running

d)

Starving

|

Crack Gate answered |

Process States:

Each process goes through different states in its life cycle,

- New (Create) – In this step, the process is about to be created but not yet created, it is the program that is present in secondary memory.

- Ready – New -> Ready to run. After the creation of a process, the process enters the ready state. i.e. the process is loaded into the main memory

- Run – The process is chosen by CPU for execution and the instructions within the process are executed by any one of the available CPU cores.

- Blocked or wait – Whenever the process requests access to I/O or needs input from the user or needs access to a critical region it enters the blocked or wait state.

- Terminated or completed – Process is killed as well as PCB is deleted.

Hence the correct answer is Starving.

Consider the following code fragment:

if (fork() == 0)

{ a = a + 5; printf("%d,%d", a, &a); }

else { a = a –5; printf("%d, %d", a, &a); }Let u, v be the values printed by the parent process, and x, y be the values printed by the child process. Which one of the following is TRUE?- a)u = x + 10 and v = y

- b)u = x + 10 and v != y

- c)u + 10 = x and v = y

- d)u + 10 = x and v != y

Correct answer is option 'C'. Can you explain this answer?

Consider the following code fragment:

if (fork() == 0)

{ a = a + 5; printf("%d,%d", a, &a); }

else { a = a –5; printf("%d, %d", a, &a); }

if (fork() == 0)

{ a = a + 5; printf("%d,%d", a, &a); }

else { a = a –5; printf("%d, %d", a, &a); }

Let u, v be the values printed by the parent process, and x, y be the values printed by the child process. Which one of the following is TRUE?

a)

u = x + 10 and v = y

b)

u = x + 10 and v != y

c)

u + 10 = x and v = y

d)

u + 10 = x and v != y

|

Anirban Khanna answered |

When a fork() system call is issued, a copy of all the pages corresponding to the parent process is created, loaded into a separate memory location by the OS for the child process. But this is not needed in certain cases. When the child is needed just to execute a command for the parent process, there is no need for copying the parent process’ pages, since exec replaces the address space of the process which invoked it with the command to be executed. In such cases, a technique called copy-on-write (COW) is used. With this technique, when a fork occurs, the parent process’s pages are not copied for the child process. Instead, the pages are shared between the child and the parent process. Whenever a process (parent or child) modifies a page, a separate copy of that particular page alone is made for that process (parent or child) which performed the modification. This process will then use the newly copied page rather than the shared one in all future references.

fork() returns 0 in child process and process ID of child process in parent process.

In Child (x), a = a + 5

In Parent (u), a = a – 5;

Child process will execute the if part and parent process will execute the else part. Assume that the initial value of a = 6. Then the value of a printed by the child process will be 11, and the value of a printed by the parent process in 1. Therefore u+10=x Now the second part. The answer is v = y.

We know that, the fork operation creates a separate address space for the child. But the child process has an exact copy of all the memory segments of the parent process. Hence the virtual addresses and the mapping (initially) will be the same for both parent process as well as child process.

PS: the virtual address is same but virtual addresses exist in different processes’ virtual address space and when we print &a, it’s actually printing the virtual address. Hence the answer is v = y.

Two processes, P1 and P2, need to access a critical section of code. Consider the following synchronization construct used by the processes:Here, wants1 and wants2 are shared variables, which are initialized to false. Which one of the following statements is TRUE about the above construct?v /* P1 */

while (true) {

wants1 = true;

while (wants2 == true);

/* Critical

Section */

wants1=false;

}

/* Remainder section *//* P2 */

while (true) {

wants2 = true;

while (wants1==true);

/* Critical

Section */

wants2 = false;

}

/* Remainder section */- a)It does not ensure mutual exclusion.

- b)It does not ensure bounded waiting.

- c)It requires that processes enter the critical section in strict alternation.

- d)It does not prevent deadlocks, but ensures mutual exclusion.

Correct answer is option 'D'. Can you explain this answer?

Two processes, P1 and P2, need to access a critical section of code. Consider the following synchronization construct used by the processes:Here, wants1 and wants2 are shared variables, which are initialized to false. Which one of the following statements is TRUE about the above construct?v

/* P1 */

while (true) {

wants1 = true;

while (wants2 == true);

/* Critical

Section */

wants1=false;

}

/* Remainder section */

while (true) {

wants1 = true;

while (wants2 == true);

/* Critical

Section */

wants1=false;

}

/* Remainder section */

/* P2 */

while (true) {

wants2 = true;

while (wants1==true);

/* Critical

Section */

wants2 = false;

}

/* Remainder section */

while (true) {

wants2 = true;

while (wants1==true);

/* Critical

Section */

wants2 = false;

}

/* Remainder section */

a)

It does not ensure mutual exclusion.

b)

It does not ensure bounded waiting.

c)

It requires that processes enter the critical section in strict alternation.

d)

It does not prevent deadlocks, but ensures mutual exclusion.

|

Nidhi Tiwari answered |

Bounded waiting :There exists a bound, or limit, on the number of times other processes are allowed to enter their critical sections after a process has made request to enter its critical section and before that request is granted. mutual exclusion prevents simultaneous access to a shared resource. This concept is used in concurrent programming with a critical section, a piece of code in which processes or threads access a shared resource. Solution: Two processes, P1 and P2, need to access a critical section of code. Here, wants1 and wants2 are shared variables, which are initialized to false. Now, when both wants1 and wants2 become true, both process p1 and p2 enter in while loop and waiting for each other to finish. This while loop run indefinitely which leads to deadlock. Now, Assume P1 is in critical section (it means wants1=true, wants2 can be anything, true or false). So this ensures that p2 won’t enter in critical section and vice versa. This satisfies the property of mutual exclusion. Here bounded waiting condition is also satisfied as there is a bound on the number of process which gets access to critical section after a process request access to it.

Barrier is a synchronization construct where a set of processes synchronizes globally i.e. each process in the set arrives at the barrier and waits for all others to arrive and then all processes leave the barrier. Let the number of processes in the set be three and S be a binary semaphore with the usual P and V functions. Consider the following C implementation of a barrier with line numbers shown on left.void barrier (void) {

1: P(S);

2: process_arrived++;

3. V(S);

4: while (process_arrived !=3);

5: P(S);

6: process_left++;

7: if (process_left==3) {

8: process_arrived = 0;

9: process_left = 0;

10: }

11: V(S);

} Q. The variables process_arrived and process_left are shared among all processes and are initialized to zero. In a concurrent program all the three processes call the barrier function when they need to synchronize globally. The above implementation of barrier is incorrect. Which one of the following is true?- a)The barrier implementation is wrong due to the use of binary semaphore S

- b)The barrier implementation may lead to a deadlock if two barrier in invocations are used in immediate succession.

- c)Lines 6 to 10 need not be inside a critical section

- d)The barrier implementation is correct if there are only two processes instead of three.

Correct answer is option 'B'. Can you explain this answer?

Barrier is a synchronization construct where a set of processes synchronizes globally i.e. each process in the set arrives at the barrier and waits for all others to arrive and then all processes leave the barrier. Let the number of processes in the set be three and S be a binary semaphore with the usual P and V functions. Consider the following C implementation of a barrier with line numbers shown on left.

void barrier (void) {

1: P(S);

2: process_arrived++;

3. V(S);

4: while (process_arrived !=3);

5: P(S);

6: process_left++;

7: if (process_left==3) {

8: process_arrived = 0;

9: process_left = 0;

10: }

11: V(S);

}

1: P(S);

2: process_arrived++;

3. V(S);

4: while (process_arrived !=3);

5: P(S);

6: process_left++;

7: if (process_left==3) {

8: process_arrived = 0;

9: process_left = 0;

10: }

11: V(S);

}

Q. The variables process_arrived and process_left are shared among all processes and are initialized to zero. In a concurrent program all the three processes call the barrier function when they need to synchronize globally. The above implementation of barrier is incorrect. Which one of the following is true?

a)

The barrier implementation is wrong due to the use of binary semaphore S

b)

The barrier implementation may lead to a deadlock if two barrier in invocations are used in immediate succession.

c)

Lines 6 to 10 need not be inside a critical section

d)

The barrier implementation is correct if there are only two processes instead of three.

|

|

Sanchita Chauhan answered |

It is possible that process_arrived becomes greater than 3. It will not be possible for process arrived to become 3 again, hence deadlock.

Barrier is a synchronization construct where a set of processes synchronizes globally i.e. each process in the set arrives at the barrier and waits for all others to arrive and then all processes leave the barrier. Let the number of processes in the set be three and S be a binary semaphore with the usual P and V functions. Consider the following C implementation of a barrier with line numbers shown on left.void barrier (void) {1: P(S);2: process_arrived++;3. V(S);4: while (process_arrived !=3);5: P(S);6: process_left++;7: if (process_left==3) {8: process_arrived = 0;9: process_left = 0;10: }11: V(S);} Q. The variables process_arrived and process_left are shared among all processes and are initialized to zero. In a concurrent program all the three processes call the barrier function when they need to synchronize globally. Which one of the following rectifies the problem in the implementation?- a)Lines 6 to 10 are simply replaced by process_arrived--

- b)At the beginning of the barrier the first process to enter the barrier waits until process_arrived becomes zero before proceeding to execute P(S).

- c)Context switch is disabled at the beginning of the barrier and re-enabled at the end.

- d)The variable process_left is made private instead of shared

Correct answer is option 'B'. Can you explain this answer?

Barrier is a synchronization construct where a set of processes synchronizes globally i.e. each process in the set arrives at the barrier and waits for all others to arrive and then all processes leave the barrier. Let the number of processes in the set be three and S be a binary semaphore with the usual P and V functions. Consider the following C implementation of a barrier with line numbers shown on left.

void barrier (void) {

1: P(S);

2: process_arrived++;

3. V(S);

4: while (process_arrived !=3);

5: P(S);

6: process_left++;

7: if (process_left==3) {

8: process_arrived = 0;

9: process_left = 0;

10: }

11: V(S);

}

Q. The variables process_arrived and process_left are shared among all processes and are initialized to zero. In a concurrent program all the three processes call the barrier function when they need to synchronize globally. Which one of the following rectifies the problem in the implementation?

a)

Lines 6 to 10 are simply replaced by process_arrived--

b)

At the beginning of the barrier the first process to enter the barrier waits until process_arrived becomes zero before proceeding to execute P(S).

c)

Context switch is disabled at the beginning of the barrier and re-enabled at the end.

d)

The variable process_left is made private instead of shared

|

|

Hrishikesh Saini answered |

Step ‘2’ should not be executed when the process enters the barrier second time till other two processes have not completed their 7th step. This is to prevent variable process_arrived becoming greater than 3.

So, when variable process_arrived becomes zero and variable process_left also becomes zero then the problem of deadlock will be resolved.

Thus, at the beginning of the barrier the first process to enter the barrier waits until process_arrived becomes zero before proceeding to execute P(S).

Thus, option (B) is correct.

Please comment below if you find anything wrong in the above post.

So, when variable process_arrived becomes zero and variable process_left also becomes zero then the problem of deadlock will be resolved.

Thus, at the beginning of the barrier the first process to enter the barrier waits until process_arrived becomes zero before proceeding to execute P(S).

Thus, option (B) is correct.

Please comment below if you find anything wrong in the above post.

Suppose we want to synchronize two concurrent processes P and Q using binary semaphores S and T. The code for the processes P and Q is shown below.Process P:

while (1) {

W:

print '0';

print '0';

X:

}Process Q:

while (1) {

Y:

print '1';

print '1';

Z:

} Q. Synchronization statements can be inserted only at points W, X, Y and Z Which of the following will ensure that the output string never contains a substring of the form 01n0 or 10n1 where n is odd?- a)P(S) at W, V(S) at X, P(T) at Y, V(T) at Z, S and T initially 1

- b)P(S) at W, V(T) at X, P(T) at Y, V(S) at Z, S and T initially 1

- c)P(S) at W, V(S) at X, P(S) at Y, V(S) at Z, S initially 1

- d)V(S) at W, V(T) at X, P(S) at Y, P(T) at Z, S and T initially 1

Correct answer is option 'C'. Can you explain this answer?

Suppose we want to synchronize two concurrent processes P and Q using binary semaphores S and T. The code for the processes P and Q is shown below.

Process P:

while (1) {

W:

print '0';

print '0';

X:

}

while (1) {

W:

print '0';

print '0';

X:

}

Process Q:

while (1) {

Y:

print '1';

print '1';

Z:

}

while (1) {

Y:

print '1';

print '1';

Z:

}

Q. Synchronization statements can be inserted only at points W, X, Y and Z Which of the following will ensure that the output string never contains a substring of the form 01n0 or 10n1 where n is odd?

a)

P(S) at W, V(S) at X, P(T) at Y, V(T) at Z, S and T initially 1

b)

P(S) at W, V(T) at X, P(T) at Y, V(S) at Z, S and T initially 1

c)

P(S) at W, V(S) at X, P(S) at Y, V(S) at Z, S initially 1

d)

V(S) at W, V(T) at X, P(S) at Y, P(T) at Z, S and T initially 1

|

|

Rajveer Chatterjee answered |

P(S) means wait on semaphore ’S’ and V(S) means signal on semaphore ‘S’. The definition of these functions are :

Wait(S) {

while (i <= 0) ;

S-- ;

}

Wait(S) {

while (i <= 0) ;

S-- ;

}

Signal(S) {

S++ ;

}

S++ ;

}

Initially S = 1 and T = 0 to support mutual exclusion in process ‘P’ and ‘Q’.

Since, S = 1 , process ‘P’ will be executed and function Wait(S) will decrement the value of ‘S’. So, S = 0 now.

Simultaneously, in process ‘Q’ , T = 0 . Therefore, in process ‘Q’ control will be stuck in while loop till the time process ‘P’ prints ‘00’ and increments the value of ‘T’ by calling function V(T).

While the control is in process ‘Q’, S = 0 and process ‘P’ will be stuck in while loop. Process ‘P’ will not execute till the time process ‘Q’ prints ‘11’ and makes S = 1 by calling function V(S).

Thus, process 'P' and 'Q' will keep on repeating to give the output ‘00110011 …… ‘ .

Please comment below if you find anything wrong in the above post.

Suppose we want to synchronize two concurrent processes P and Q using binary semaphores S and T. The code for the processes P and Q is shown below.Process P:

while (1) {

W:

print '0';

print '0';

X:

}Process Q:

while (1) {

Y:

print '1';

print '1';

Z:

} Q. Synchronization statements can be inserted only at points W, X, Y and Z. Which of the following will always lead to an output staring with '001100110011' ?- a)P(S) at W, V(S) at X, P(T) at Y, V(T) at Z, S and T initially 1

- b)P(S) at W, V(T) at X, P(T) at Y, V(S) at Z, S initially 1, and T initially 0

- c)P(S) at W, V(T) at X, P(T) at Y, V(S) at Z, S and T initially 1

- d)P(S) at W, V(S) at X, P(T) at Y, V(T) at Z, S initially 1, and T initially 0

Correct answer is option 'B'. Can you explain this answer?

Suppose we want to synchronize two concurrent processes P and Q using binary semaphores S and T. The code for the processes P and Q is shown below.

Process P:

while (1) {

W:

print '0';

print '0';

X:

}

while (1) {

W:

print '0';

print '0';

X:

}

Process Q:

while (1) {

Y:

print '1';

print '1';

Z:

}

while (1) {

Y:

print '1';

print '1';

Z:

}

Q. Synchronization statements can be inserted only at points W, X, Y and Z. Which of the following will always lead to an output staring with '001100110011' ?

a)

P(S) at W, V(S) at X, P(T) at Y, V(T) at Z, S and T initially 1

b)

P(S) at W, V(T) at X, P(T) at Y, V(S) at Z, S initially 1, and T initially 0

c)

P(S) at W, V(T) at X, P(T) at Y, V(S) at Z, S and T initially 1

d)

P(S) at W, V(S) at X, P(T) at Y, V(T) at Z, S initially 1, and T initially 0

|

|

Milan Mukherjee answered |

P(S) means wait on semaphore ‘S’ and V(S) means signal on semaphore ‘S’. [sourcecode] Wait(S) { while (i <= 0) --S;} Signal(S) { S++; } [/sourcecode] Initially, we assume S = 1 and T = 0 to support mutual exclusion in process P and Q. Since S = 1, only process P will be executed and wait(S) will decrement the value of S. Therefore, S = 0. At the same instant, in process Q, value of T = 0. Therefore, in process Q, control will be stuck in while loop till the time process P prints 00 and increments the value of T by calling the function V(T). While the control is in process Q, semaphore S = 0 and process P would be stuck in while loop and would not execute till the time process Q prints 11 and makes the value of S = 1 by calling the function V(S). This whole process will repeat to give the output 00 11 00 11 … .

Thus, B is the correct choice.

Please comment below if you find anything wrong in the above post.

Thus, B is the correct choice.

Please comment below if you find anything wrong in the above post.

A shared variable x, initialized to zero, is operated on by four concurrent processes W, X, Y, Z as follows. Each of the processes W and X reads x from memory, increments by one, stores it to memory, and then terminates. Each of the processes Y and Z reads x from memory, decrements by two, stores it to memory, and then terminates. Each process before reading x invokes the P operation (i.e., wait) on a counting semaphore S and invokes the V operation (i.e., signal) on the semaphore S after storing x to memory. Semaphore S is initialized to two. What is the maximum possible value of x after all processes complete execution?- a)-2

- b)-1

- c)1

- d)2

Correct answer is option 'D'. Can you explain this answer?

A shared variable x, initialized to zero, is operated on by four concurrent processes W, X, Y, Z as follows. Each of the processes W and X reads x from memory, increments by one, stores it to memory, and then terminates. Each of the processes Y and Z reads x from memory, decrements by two, stores it to memory, and then terminates. Each process before reading x invokes the P operation (i.e., wait) on a counting semaphore S and invokes the V operation (i.e., signal) on the semaphore S after storing x to memory. Semaphore S is initialized to two. What is the maximum possible value of x after all processes complete execution?

a)

-2

b)

-1

c)

1

d)

2

|

|

Pallabi Sharma answered |