All Exams >

Computer Science Engineering (CSE) >

Operating System >

All Questions

All questions of CPU Scheduling for Computer Science Engineering (CSE) Exam

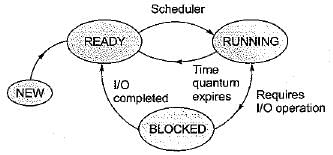

In a time-sharing operating system, when the time slot given to a process is completed, the process goes from the RUNNING state to the- a)BLOCKED state

- b)READY state

- c)SUSPENDED state

- d)TERMINATED state

Correct answer is option 'B'. Can you explain this answer?

In a time-sharing operating system, when the time slot given to a process is completed, the process goes from the RUNNING state to the

a)

BLOCKED state

b)

READY state

c)

SUSPENDED state

d)

TERMINATED state

|

|

Yash Patel answered |

In time-sharing operating system (example in Round-Robin), whenever the time slot given to a process expires, it goes back to READY state and if it requests for same I/O operation, then it goes to BLOCKED state.

Consider three CPU-intensive processes, which require 10, 20 and 30 time units and arrive at times 0, 2 and 6, respectively. How many context switches are needed if the operating system implements a shortest remaining time first scheduling algorithm? Do not count the context switches at time zero and at the end.- a)1

- b)2

- c)3

- d)4

Correct answer is option 'B'. Can you explain this answer?

Consider three CPU-intensive processes, which require 10, 20 and 30 time units and arrive at times 0, 2 and 6, respectively. How many context switches are needed if the operating system implements a shortest remaining time first scheduling algorithm? Do not count the context switches at time zero and at the end.

a)

1

b)

2

c)

3

d)

4

|

Rahul Chatterjee answered |

Explanation:

Shortest remaining time, also known as shortest remaining time first (SRTF), is a scheduling method that is a pre-emptive version of shortest job next scheduling. In this scheduling algorithm, the process with the smallest amount of time remaining until completion is selected to execute. Since the currently executing process is the one with the shortest amount of time remaining by definition, and since that time should only reduce as execution progresses, processes will always run until they complete or a new process is added that requires a smaller amount of time.

Solution:

Let three process be P0, P1 and P2 with arrival times 0, 2 and 6 respectively and CPU burst times 10, 20 and 30 respectively. At time 0, P0 is the only available process so it runs. At time 2, P1 arrives, but P0 has the shortest remaining time, so it continues. At time 6, P2 also arrives, but P0 still has the shortest remaining time, so it continues. At time 10, P1 is scheduled as it is the shortest remaining time process. At time 30, P2 is scheduled. Only two context switches are needed. P0 to P1 and P1 to P2.

A system has 6 identical resources and N processes competing for them. Each process can request atmost 2 resources. Which one of the following values of N could lead to a deadlock?- a)1

- b)2

- c)3

- d)4

Correct answer is option 'D'. Can you explain this answer?

A system has 6 identical resources and N processes competing for them. Each process can request atmost 2 resources. Which one of the following values of N could lead to a deadlock?

a)

1

b)

2

c)

3

d)

4

|

|

Ravi Singh answered |

It seems to be wrong question in GATE exam. Below seems to be the worst case situation. In below situation P1 and P2 can continue as they have 2 resources each

P1 --> 2

P2 --> 2

P3 --> 1

P4 --> 1

P1 --> 2

P2 --> 2

P3 --> 1

P4 --> 1

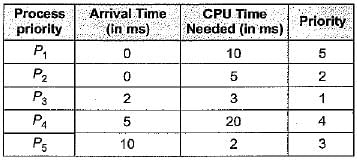

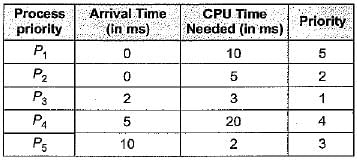

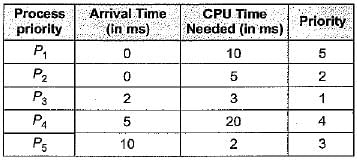

Consider a set of 5 processes whose arrival time, CPU time needed and the priority are given below:

Note: Smaller the number, higher the priority.If the CPU scheduling policy is FCFS, the average waiting time will be - a)12.8 ms

- b)8 ms

- c)16 ms

- d)None of the above

Correct answer is option 'A'. Can you explain this answer?

Consider a set of 5 processes whose arrival time, CPU time needed and the priority are given below:

Note: Smaller the number, higher the priority.

Note: Smaller the number, higher the priority.

If the CPU scheduling policy is FCFS, the average waiting time will be

a)

12.8 ms

b)

8 ms

c)

16 ms

d)

None of the above

|

Crack Gate answered |

According to FCFS process solve are p1 p2 p3 p4 p5 so

For p1 waiting time = 0 process time = 10 then

For p2 waiting time = (process time of p1-arrival time of p2) = 10 - 0 = 10 then

For p3 waiting time = (pr. time of (p1+p2) - arrival time of p3) = (10 + 5) - 2 = 13 and

Same for p4 waiting time = 18 - 5 = 13

Same for p5 waiting time = 38 - 10 = 28

So total average waiting time = (0 + 10 + 13 + 13 + 28) / 5

= 12.8

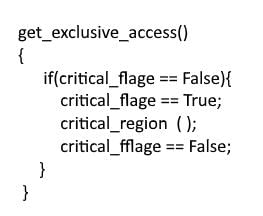

Processes P1 and P2 use critical_flag in the following routine to achieve mutual exclusion. Assume that critical_flag is initialized to FALSE in the main program.

get_exclusive_access ( ) { if (critical _flag == FALSE) { critical_flag = TRUE ; critical_region () ; critical_flag = FALSE; } }Consider the following statements.

i. It is possible for both P1 and P2 to access critical_region concurrently.

ii. This may lead to a deadlock.

Which of the following holds?- a)(i) is false and (ii) is true

- b)Both (i) and (ii) are false

- c)(i) is true and (ii) is false

- d)Both (i) and (ii) are true

Correct answer is option 'C'. Can you explain this answer?

Processes P1 and P2 use critical_flag in the following routine to achieve mutual exclusion. Assume that critical_flag is initialized to FALSE in the main program.

get_exclusive_access ( ) { if (critical _flag == FALSE) { critical_flag = TRUE ; critical_region () ; critical_flag = FALSE; } }

get_exclusive_access ( ) { if (critical _flag == FALSE) { critical_flag = TRUE ; critical_region () ; critical_flag = FALSE; } }

Consider the following statements.

i. It is possible for both P1 and P2 to access critical_region concurrently.

ii. This may lead to a deadlock.

Which of the following holds?

i. It is possible for both P1 and P2 to access critical_region concurrently.

ii. This may lead to a deadlock.

Which of the following holds?

a)

(i) is false and (ii) is true

b)

Both (i) and (ii) are false

c)

(i) is true and (ii) is false

d)

Both (i) and (ii) are true

|

|

Sanya Agarwal answered |

Say P1 starts first and executes statement 1, after that system context switches to P2 (before executing statement 2), and it enters inside if statement, since the flag is still false. So now both processes are in critical section!! so (i) is true.. (ii) is false By no way it happens that flag is true and no process' are inside the if clause, if someone enters the critical section, it will definitely make flag = false. So no deadlock.

Which of the following scheduling algorithms is non-preemptive?- a)Round Robin

- b)First-In First-Out

- c)Multilevel Queue Scheduling

- d)Multilevel Queue Scheduling with Feedback

Correct answer is option 'B'. Can you explain this answer?

Which of the following scheduling algorithms is non-preemptive?

a)

Round Robin

b)

First-In First-Out

c)

Multilevel Queue Scheduling

d)

Multilevel Queue Scheduling with Feedback

|

|

Ravi Singh answered |

Round Robin - Preemption takes place when the time quantum expires First In First Out - No Preemption, the process once started completes before the other process takes over Multi Level Queue Scheduling - Preemption takes place when a process of higher priority arrives Multi Level Queue Scheduling with Feedback - Preemption takes a place when process of higher priority arrives or when the quantum of high priority queue expires and we need to move the process to low priority queue So, B is the correct choice.

A scheduling algorithm assigns priority proportional to the waiting time of a process. Every process starts with priority zero (the lowest priority). The scheduler re-evaluates the process priorities every T time units and decides the next process to schedule. Which one of the following is TRUE if the processes have no I/O operations and all arrive at time zero?- a)This algorithm is equivalent to the first-come-first-serve algorithm

- b)This algorithm is equivalent to the round-robin algorithm.

- c)This algorithm is equivalent to the shortest-job-first algorithm..

- d)This algorithm is equivalent to the shortest-remaining-time-first algorithm

Correct answer is option 'B'. Can you explain this answer?

A scheduling algorithm assigns priority proportional to the waiting time of a process. Every process starts with priority zero (the lowest priority). The scheduler re-evaluates the process priorities every T time units and decides the next process to schedule. Which one of the following is TRUE if the processes have no I/O operations and all arrive at time zero?

a)

This algorithm is equivalent to the first-come-first-serve algorithm

b)

This algorithm is equivalent to the round-robin algorithm.

c)

This algorithm is equivalent to the shortest-job-first algorithm..

d)

This algorithm is equivalent to the shortest-remaining-time-first algorithm

|

|

Tanishq Joshi answered |

The scheduling algorithm works as round robin with quantum time equals to T. After a process's turn comes and it has executed for T units, its waiting time becomes least and its turn comes again after every other process has got the token for T units.

An operating system uses Shortest Remaining Time first (SRT) process scheduling algorithm. Consider the arrival times and execution times for the following processes:Process Execution time Arrival time

P1 20 0

P2 25 15

P3 10 30

P4 15 45Q. What is the total waiting time for process P2?- a)5

- b)15

- c)40

- d)55

Correct answer is option 'B'. Can you explain this answer?

An operating system uses Shortest Remaining Time first (SRT) process scheduling algorithm. Consider the arrival times and execution times for the following processes:

Process Execution time Arrival time

P1 20 0

P2 25 15

P3 10 30

P4 15 45

P1 20 0

P2 25 15

P3 10 30

P4 15 45

Q. What is the total waiting time for process P2?

a)

5

b)

15

c)

40

d)

55

|

|

Nidhi Desai answered |

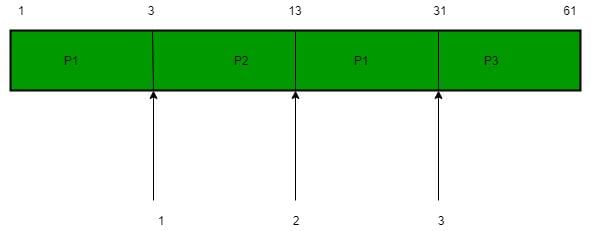

Shortest remaining time, also known as shortest remaining time first (SRTF), is a scheduling method that is a pre-emptive version of shortest job next scheduling. In this scheduling algorithm, the process with the smallest amount of time remaining until completion is selected to execute. Since the currently executing process is the one with the shortest amount of time remaining by definition, and since that time should only reduce as execution progresses, processes will always run until they complete or a new process is added that requires a smaller amount of time. The Gantt chart of execution of processes:

At time 0, P1 is the only process, P1 runs for 15 time units. At time 15, P2 arrives, but P1 has the shortest remaining time. So P1 continues for 5 more time units. At time 20, P2 is the only process. So it runs for 10 time units. at time 30, P3 is the shortest remaining time process. So it runs for 10 time units. at time 40, P2 runs as it is the only process. P2 runs for 5 time units. At time 45, P3 arrives, but P2 has the shortest remaining time. So P2 continues for 10 more time units. P2 completes its execution at time 55.

As we know, turn around time is total time between submission of the process and its completion. Waiting time is the time The amount of time that is taken by a process in ready queue and waiting time is the difference between Turn around time and burst time. Total turnaround time for P2 = Completion time - Arrival time = 55 - 15 = 40 Total Waiting Time for P2= turn around time - Burst time = 40 – 25 = 15

In Round Robin CPU scheduling, as the time quantum is increased, the average turn around time- a)Increases

- b)Decreases

- c)Remains constant

- d)Varies irregularly

Correct answer is option 'D'. Can you explain this answer?

In Round Robin CPU scheduling, as the time quantum is increased, the average turn around time

a)

Increases

b)

Decreases

c)

Remains constant

d)

Varies irregularly

|

|

Tanishq Chakraborty answered |

Explanation:

In Round Robin CPU scheduling, the time quantum is the maximum amount of time a process can run before it is preempted and moved to the back of the ready queue. When the time quantum is increased, it affects the scheduling of the processes and can have an impact on the average turnaround time.

Definition of Average Turnaround Time:

The average turnaround time is the total amount of time it takes for a process to complete, including both the waiting time and the execution time.

Effect of Increasing Time Quantum on Average Turnaround Time:

When the time quantum is increased, the following effects can be observed:

1. Decreased Context Switching: A larger time quantum means that each process is allowed to run for a longer period before being preempted. This reduces the frequency of context switching, which is the overhead involved in switching between processes. As a result, the average turnaround time may decrease.

2. Increased Waiting Time: With a larger time quantum, each process is allowed to run for a longer period. This can lead to an increase in the waiting time for processes that are waiting in the ready queue. As a result, the average turnaround time may increase.

3. Variation in Burst Times: Processes typically have varying burst times, which is the amount of time they require to complete their execution. When the time quantum is increased, processes with shorter burst times may be able to complete their execution within the time quantum, while processes with longer burst times may be preempted multiple times. This can lead to irregular variations in the average turnaround time.

Conclusion:

In conclusion, as the time quantum is increased in Round Robin CPU scheduling, the average turnaround time can vary irregularly. The effects of increasing the time quantum include decreased context switching, increased waiting time, and variations in burst times, which can impact the overall average turnaround time of the processes.

In Round Robin CPU scheduling, the time quantum is the maximum amount of time a process can run before it is preempted and moved to the back of the ready queue. When the time quantum is increased, it affects the scheduling of the processes and can have an impact on the average turnaround time.

Definition of Average Turnaround Time:

The average turnaround time is the total amount of time it takes for a process to complete, including both the waiting time and the execution time.

Effect of Increasing Time Quantum on Average Turnaround Time:

When the time quantum is increased, the following effects can be observed:

1. Decreased Context Switching: A larger time quantum means that each process is allowed to run for a longer period before being preempted. This reduces the frequency of context switching, which is the overhead involved in switching between processes. As a result, the average turnaround time may decrease.

2. Increased Waiting Time: With a larger time quantum, each process is allowed to run for a longer period. This can lead to an increase in the waiting time for processes that are waiting in the ready queue. As a result, the average turnaround time may increase.

3. Variation in Burst Times: Processes typically have varying burst times, which is the amount of time they require to complete their execution. When the time quantum is increased, processes with shorter burst times may be able to complete their execution within the time quantum, while processes with longer burst times may be preempted multiple times. This can lead to irregular variations in the average turnaround time.

Conclusion:

In conclusion, as the time quantum is increased in Round Robin CPU scheduling, the average turnaround time can vary irregularly. The effects of increasing the time quantum include decreased context switching, increased waiting time, and variations in burst times, which can impact the overall average turnaround time of the processes.

Concurrent processes are processes that- a)Do not overlap in time

- b)Overlap in time

- c)Are executed by a processor at the same time

- d)None of the above

Correct answer is option 'B'. Can you explain this answer?

Concurrent processes are processes that

a)

Do not overlap in time

b)

Overlap in time

c)

Are executed by a processor at the same time

d)

None of the above

|

|

Roshni Kumar answered |

Concurrent processes are processes that share the CPU and memory. They do overlap in time while execution. At a time CPU entertain only one process but it can switch to other without completing it as a whole.

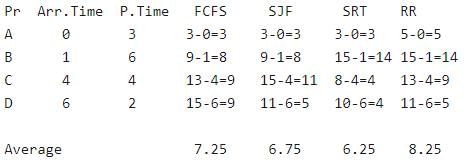

For the processes listed in the following table, which of the following scheduling schemes will give the lowest average turnaround time?Process Arrival Time Processing Time

A 0 3

B 1 6

C 4 4

D 6 2- a)First Come First Serve

- b)Non-preemptive Shortest Job First

- c)Shortest Remaining Time

- d)Round Robin with Quantum value two

Correct answer is option 'C'. Can you explain this answer?

For the processes listed in the following table, which of the following scheduling schemes will give the lowest average turnaround time?

Process Arrival Time Processing Time

A 0 3

B 1 6

C 4 4

D 6 2

A 0 3

B 1 6

C 4 4

D 6 2

a)

First Come First Serve

b)

Non-preemptive Shortest Job First

c)

Shortest Remaining Time

d)

Round Robin with Quantum value two

|

|

Sanya Agarwal answered |

Turnaround time is the total time taken between the submission of a program/process/thread/task (Linux) for execution and the return of the complete output to the customer/user. Turnaround Time = Completion Time - Arrival Time. FCFS = First Come First Serve (A, B, C, D) SJF = Non-preemptive Shortest Job First (A, B, D, C) SRT = Shortest Remaining Time (A(3), B(1), C(4), D(2), B(5)) RR = Round Robin with Quantum value 2 (A(2), B(2), A(1),C(2),B(2),D(2),C(2),B(2)

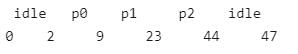

Consider three processes, all arriving at time zero, with total execution time of 10, 20 and 30 units, respectively. Each process spends the first 20% of execution time doing I/O, the next 70% of time doing computation, and the last 10% of time doing I/O again. The operating system uses a shortest remaining compute time first scheduling algorithm and schedules a new process either when the running process gets blocked on I/O or when the running process finishes its compute burst. Assume that all I/O operations can be overlapped as much as possible. For what percentage of time does the CPU remain idle?- a)0%

- b)10.6%

- c)30.0%

- d)89.4%

Correct answer is option 'B'. Can you explain this answer?

Consider three processes, all arriving at time zero, with total execution time of 10, 20 and 30 units, respectively. Each process spends the first 20% of execution time doing I/O, the next 70% of time doing computation, and the last 10% of time doing I/O again. The operating system uses a shortest remaining compute time first scheduling algorithm and schedules a new process either when the running process gets blocked on I/O or when the running process finishes its compute burst. Assume that all I/O operations can be overlapped as much as possible. For what percentage of time does the CPU remain idle?

a)

0%

b)

10.6%

c)

30.0%

d)

89.4%

|

|

Ravi Singh answered |

Let three processes be p0, p1 and p2. Their execution time is 10, 20 and 30 respectively. p0 spends first 2 time units in I/O, 7 units of CPU time and finally 1 unit in I/O. p1 spends first 4 units in I/O, 14 units of CPU time and finally 2 units in I/O. p2 spends first 6 units in I/O, 21 units of CPU time and finally 3 units in I/O.

Total time spent = 47 Idle time = 2 + 3 = 5 Percentage of idle time = (5/47)*100 = 10.6 %

Which of the following statements are true?I. Shortest remaining time first scheduling may cause starvation

II. Preemptive scheduling may cause starvation

III. Round robin is better than FCFS in terms of response time- a)I only

- b)I and III only

- c)II and III only

- d)I, II and III

Correct answer is option 'D'. Can you explain this answer?

Which of the following statements are true?

I. Shortest remaining time first scheduling may cause starvation

II. Preemptive scheduling may cause starvation

III. Round robin is better than FCFS in terms of response time

II. Preemptive scheduling may cause starvation

III. Round robin is better than FCFS in terms of response time

a)

I only

b)

I and III only

c)

II and III only

d)

I, II and III

|

|

Shubham Ghoshal answered |

I) Shortest remaining time first scheduling is a pre-emptive version of shortest job scheduling. In SRTF, job with the shortest CPU burst will be scheduled first. Because of this process, It may cause starvation as shorter processes may keep coming and a long CPU burst process never gets CPU.

II) Pre-emptive just means a process before completing its execution is stopped and other process can start execution. The stopped process can later come back and continue from where it was stopped. In pre-emptive scheduling, suppose process P1 is executing in CPU and after some time process P2 with high priority then P1 will arrive in ready queue then p1 is pre-empted and p2 will brought into CPU for execution. In this way if process which is arriving in ready queue is of higher priority then p1, then p1 is always pre-empted and it may possible that it suffer from starvation.

III) round robin will give better response time then FCFS ,in FCFS when process is executing ,it executed up to its complete burst time, but in round robin it will execute up to time quantum. So Round Robin Scheduling improves response time as all processes get CPU after a specified time. So, I,II,III are true which is option (D). Option (D) is correct answer.

II) Pre-emptive just means a process before completing its execution is stopped and other process can start execution. The stopped process can later come back and continue from where it was stopped. In pre-emptive scheduling, suppose process P1 is executing in CPU and after some time process P2 with high priority then P1 will arrive in ready queue then p1 is pre-empted and p2 will brought into CPU for execution. In this way if process which is arriving in ready queue is of higher priority then p1, then p1 is always pre-empted and it may possible that it suffer from starvation.

III) round robin will give better response time then FCFS ,in FCFS when process is executing ,it executed up to its complete burst time, but in round robin it will execute up to time quantum. So Round Robin Scheduling improves response time as all processes get CPU after a specified time. So, I,II,III are true which is option (D). Option (D) is correct answer.

Consider a uniprocessor system executing three tasks T1, T2 and T3, each of which is composed of an infinite sequence of jobs (or instances) which arrive periodically at intervals of 3, 7 and 20 milliseconds, respectively. The priority of each task is the inverse of its period and the available tasks are scheduled in order of priority, with the highest priority task scheduled first. Each instance of T1, T2 and T3 requires an execution time of 1, 2 and 4 milliseconds, respectively. Given that all tasks initially arrive at the beginning of the 1st milliseconds and task preemptions are allowed, the first instance of T3 completes its execution at the end of ______________ milliseconds.- a)5

- b)10

- c)12

- d)15

Correct answer is option 'C'. Can you explain this answer?

Consider a uniprocessor system executing three tasks T1, T2 and T3, each of which is composed of an infinite sequence of jobs (or instances) which arrive periodically at intervals of 3, 7 and 20 milliseconds, respectively. The priority of each task is the inverse of its period and the available tasks are scheduled in order of priority, with the highest priority task scheduled first. Each instance of T1, T2 and T3 requires an execution time of 1, 2 and 4 milliseconds, respectively. Given that all tasks initially arrive at the beginning of the 1st milliseconds and task preemptions are allowed, the first instance of T3 completes its execution at the end of ______________ milliseconds.

a)

5

b)

10

c)

12

d)

15

|

|

Yash Patel answered |

Periods of T1, T2 and T3 are 3ms, 7ms and 20ms. Since priority is inverse of period, T1 is the highest priority task, then T2 and finally T3 Every instance of T1 requires 1ms, that of T2 requires 2ms and that of T3 requires 4ms Initially all T1, T2 and T3 are ready to get processor, T1 is preferred Second instances of T1, T2, and T3 shall arrive at 3, 7, and 20 respectively. Third instance of T1, T2 and T3 shall arrive at 6, 14, and 40 respectively.

Time-Interval Tasks

0-1 T1

1-2 T2

2-3 T2

3-4 T1 [Second Instance of T1 arrives]

4-5 T3

5-6 T3

6-7 T1 [Third Instance of T1 arrives]

Time-Interval Tasks

0-1 T1

1-2 T2

2-3 T2

3-4 T1 [Second Instance of T1 arrives]

4-5 T3

5-6 T3

6-7 T1 [Third Instance of T1 arrives]

[Therefore T3 is preempted]

7-8 T2 [Second instance of T2 arrives]

8-9 T2

9-10 T1 [Fourth Instance of T1 arrives]

7-8 T2 [Second instance of T2 arrives]

8-9 T2

9-10 T1 [Fourth Instance of T1 arrives]

10-11 T3

11-12 T3 [First Instance of T3 completed]

11-12 T3 [First Instance of T3 completed]

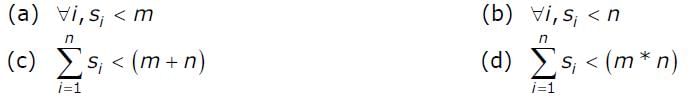

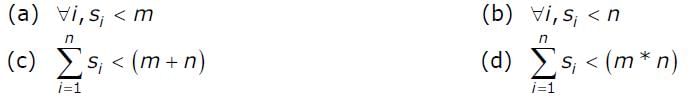

Suppose n processes, P1, …. Pn share m identical resource units, which can be reserved and released one at a time. The maximum resource requirement of process Pi is Si, where Si > 0. Which one of the following is a sufficient condition for ensuring that deadlock does not occur?

- a)A

- b)B

- c)C

- d)D

Correct answer is option 'C'. Can you explain this answer?

Suppose n processes, P1, …. Pn share m identical resource units, which can be reserved and released one at a time. The maximum resource requirement of process Pi is Si, where Si > 0. Which one of the following is a sufficient condition for ensuring that deadlock does not occur?

a)

A

b)

B

c)

C

d)

D

|

Cstoppers Instructors answered |

In the extreme condition, all processes acquire Si-1 resources and need 1 more resource. So following condition must be true to make sure that deadlock never occurs.

< m The above expression can be written as following.

< (m + n)

Which of the following algorithms favour CPU bound processes?

1. Round-robin

2. Rirst-come-first-served

3. Multilevel feedback queues- a)1 only

- b)2 only

- c)1 and 2 only

- d)1 and 3 only

Correct answer is option 'B'. Can you explain this answer?

Which of the following algorithms favour CPU bound processes?

1. Round-robin

2. Rirst-come-first-served

3. Multilevel feedback queues

1. Round-robin

2. Rirst-come-first-served

3. Multilevel feedback queues

a)

1 only

b)

2 only

c)

1 and 2 only

d)

1 and 3 only

|

|

Saanvi Bajaj answered |

Only FCFS and non-preempting algorithms favour CPU bound processes.

An operating system uses shortest remaining time first scheduling algorithm for pre-emptive scheduling of processes. Consider the following set of processes with their arrival times and CPU burst times (in milliseconds):Process Arrival Time Burst Time

P1 0 12

P2 2 4

P3 3 6

P4 8 5The average waiting time (in milliseconds) of the processes is _________.- a)4.5

- b)5.0

- c)5.5

- d)6.5

Correct answer is option 'C'. Can you explain this answer?

An operating system uses shortest remaining time first scheduling algorithm for pre-emptive scheduling of processes. Consider the following set of processes with their arrival times and CPU burst times (in milliseconds):

Process Arrival Time Burst Time

P1 0 12

P2 2 4

P3 3 6

P4 8 5

P1 0 12

P2 2 4

P3 3 6

P4 8 5

The average waiting time (in milliseconds) of the processes is _________.

a)

4.5

b)

5.0

c)

5.5

d)

6.5

|

|

Ravi Singh answered |

Process Arrival Time Burst Time

P1 0 12

P2 2 4

P3 3 6

P4 8 5

P1 0 12

P2 2 4

P3 3 6

P4 8 5

Burst Time - The total time needed by a process from the CPU for its complete execution. Waiting Time - How much time processes spend in the ready queue waiting their turn to get on the CPU Now, The Gantt chart for the above processes is :

P1 - 0 to 2 milliseconds

P2 - 2 to 6 milliseconds

P3 - 6 to 12 milliseconds

P4 - 12 to 17 milliseconds

P1 - 17 to 27 milliseconds

Process p1 arrived at time 0, hence cpu started executing it. After 2 units of time P2 arrives and burst time of P2 was 4 units, and the remaining time of the process p1 was 10 units,hence cpu started executing P2, putting P1 in waiting state(Pre-emptive and Shortest remaining time first scheduling). Due to P1's highest remaining time it was executed by the cpu in the end.

Now calculating the waiting time of each process:

P1 -> 17 -2 = 15

P2 -> 0

P3 -> 6 - 3 = 3

P4 -> 12 - 8 = 4

P1 -> 17 -2 = 15

P2 -> 0

P3 -> 6 - 3 = 3

P4 -> 12 - 8 = 4

Hence total waiting time of all the processes is

= 15+0+3+4=22

Total no of processes = 4

Average waiting time = 22 / 4 = 5.5

Hence C is the answer.

= 15+0+3+4=22

Total no of processes = 4

Average waiting time = 22 / 4 = 5.5

Hence C is the answer.

Which of the following is not true with respect to deadlock prevention and deadlock avoidance schemes?- a)In deadlock prevention, the request for resources is always granted if resulting state is safe

- b)In deadlock avoidance, the request for resources is always granted, if the resulting state is safe

- c)Deadlock avoidance requires knowledge of resource requirements a priori

- d)Deadlock prevention is more restrictive than deadlock avoidance

Correct answer is option 'A'. Can you explain this answer?

Which of the following is not true with respect to deadlock prevention and deadlock avoidance schemes?

a)

In deadlock prevention, the request for resources is always granted if resulting state is safe

b)

In deadlock avoidance, the request for resources is always granted, if the resulting state is safe

c)

Deadlock avoidance requires knowledge of resource requirements a priori

d)

Deadlock prevention is more restrictive than deadlock avoidance

|

Cstoppers Instructors answered |

Deadlock Prevention: Deadlocks can be prevented by preventing at least one of the four required

conditions:

1. Mutual Exclusion – not required for sharable resources; must hold for non-sharable resources.

2. Hold and Wait – must guarantee that whenever a process requests a resource, it does not hold any other resources. Require process to request and be allocated all its sources before it begins execution, or allow process to request resources only when the process has none. Low resource utilization; starvation possible. Restrain the ways request can be made.

3. No Pre-emption – If a process that is holding some resources requests another resource that cannot be immediately allocated to it, and then all resources currently being held are released. Pre-empted resources are added to the list of resources for which the process is waiting. Process will be restarted only when it can regain its old resources, as well as the new ones that it is requesting.

4. Circular Wait – impose a total ordering of all resource types, and require that each process requests resources in an increasing order of enumeration.

Which of the following is FALSE about SJF (Shortest Job First Scheduling)?

S1: It causes minimum average waiting time

S2: It can cause starvation- a)Only S1

- b)Only S2

- c)Both S1 and S2

- d)Neither S1 nor S2

Correct answer is option 'D'. Can you explain this answer?

Which of the following is FALSE about SJF (Shortest Job First Scheduling)?

S1: It causes minimum average waiting time

S2: It can cause starvation

S1: It causes minimum average waiting time

S2: It can cause starvation

a)

Only S1

b)

Only S2

c)

Both S1 and S2

d)

Neither S1 nor S2

|

Riverdale Learning Institute answered |

- Both SJF and Shortest Remaining time first algorithms may cause starvation. Consider a situation when long process is there in ready queue and shorter processes keep coming.

- SJF is optimal in terms of average waiting time for a given set of processes, but problems with SJF is how to know/predict time of next job.

Consider a system with 4 types of resources R1 (3 units), R2 (2 units), R3 (3 units), R4 (2 units). A non-preemptive resource allocation policy is used. At any given instance, a request is not entertained if it cannot be completely satisfied. Three processes P1, P2, P3 request the sources as follows if executed independently.Process P1:

t=0: requests 2 units of R2

t=1: requests 1 unit of R3

t=3: requests 2 units of R1

t=5: releases 1 unit of R2

and 1 unit of R1.

t=7: releases 1 unit of R3

t=8: requests 2 units of R4

t=10: FinishesProcess P2:

t=0: requests 2 units of R3

t=2: requests 1 unit of R4

t=4: requests 1 unit of R1

t=6: releases 1 unit of R3

t=8: FinishesProcess P3:

t=0: requests 1 unit of R4

t=2: requests 2 units of R1

t=5: releases 2 units of R1

t=7: requests 1 unit of R2

t=8: requests 1 unit of R3

t=9: FinishesWhich one of the following statements is TRUE if all three processes run concurrently starting at time t=0? - a)All processes will finish without any deadlock

- b)Only P1 and P2 will be in deadlock.

- c)Only P1 and P3 will be in a deadlock.

- d)All three processes will be in deadlock

Correct answer is option 'A'. Can you explain this answer?

Consider a system with 4 types of resources R1 (3 units), R2 (2 units), R3 (3 units), R4 (2 units). A non-preemptive resource allocation policy is used. At any given instance, a request is not entertained if it cannot be completely satisfied. Three processes P1, P2, P3 request the sources as follows if executed independently.

Process P1:

t=0: requests 2 units of R2

t=1: requests 1 unit of R3

t=3: requests 2 units of R1

t=5: releases 1 unit of R2

and 1 unit of R1.

t=7: releases 1 unit of R3

t=8: requests 2 units of R4

t=10: Finishes

t=0: requests 2 units of R2

t=1: requests 1 unit of R3

t=3: requests 2 units of R1

t=5: releases 1 unit of R2

and 1 unit of R1.

t=7: releases 1 unit of R3

t=8: requests 2 units of R4

t=10: Finishes

Process P2:

t=0: requests 2 units of R3

t=2: requests 1 unit of R4

t=4: requests 1 unit of R1

t=6: releases 1 unit of R3

t=8: Finishes

t=0: requests 2 units of R3

t=2: requests 1 unit of R4

t=4: requests 1 unit of R1

t=6: releases 1 unit of R3

t=8: Finishes

Process P3:

t=0: requests 1 unit of R4

t=2: requests 2 units of R1

t=5: releases 2 units of R1

t=7: requests 1 unit of R2

t=8: requests 1 unit of R3

t=9: Finishes

t=0: requests 1 unit of R4

t=2: requests 2 units of R1

t=5: releases 2 units of R1

t=7: requests 1 unit of R2

t=8: requests 1 unit of R3

t=9: Finishes

Which one of the following statements is TRUE if all three processes run concurrently starting at time t=0?

a)

All processes will finish without any deadlock

b)

Only P1 and P2 will be in deadlock.

c)

Only P1 and P3 will be in a deadlock.

d)

All three processes will be in deadlock

|

|

Ravi Singh answered |

We can apply the following Deadlock Detection algorithm and see that there is no process waiting indefinitely for a resource.

The maximum number of processes that can be in Ready state for a computer system with n CPUs is- a)n

- b)n2

- c)2n

- d)Independent of n

Correct answer is option 'D'. Can you explain this answer?

The maximum number of processes that can be in Ready state for a computer system with n CPUs is

a)

n

b)

n2

c)

2n

d)

Independent of n

|

|

Sanya Agarwal answered |

The size of ready queue doesn't depend on number of processes. A single processor system may have a large number of processes waiting in ready queue.

Consider three CPU intensive processes P1, P2, P3 which require 20, 10 and 30 units of time, arrive at times 1, 3 and 7 respectively. Suppose operating system is implementing Shortest Remaining Time first (preemptive scheduling) algorithm, then _____ context switches are required (suppose context switch at the beginning of Ready queue and at the end of Ready queue are not counted).- a)3

- b)2

- c)4

- d)5

Correct answer is option 'A'. Can you explain this answer?

Consider three CPU intensive processes P1, P2, P3 which require 20, 10 and 30 units of time, arrive at times 1, 3 and 7 respectively. Suppose operating system is implementing Shortest Remaining Time first (preemptive scheduling) algorithm, then _____ context switches are required (suppose context switch at the beginning of Ready queue and at the end of Ready queue are not counted).

a)

3

b)

2

c)

4

d)

5

|

|

Sanya Agarwal answered |

There are 3 context switch. So. option (A) is correct.

Consider three processes (process id 0, 1, 2 respectively) with compute time bursts 2, 4 and 8 time units. All processes arrive at time zero. Consider the longest remaining time first (LRTF) scheduling algorithm. In LRTF ties are broken by giving priority to the process with the lowest process id. The average turn around time is:- a)13 units

- b)14 units

- c)15 units

- d)16 units

Correct answer is option 'A'. Can you explain this answer?

Consider three processes (process id 0, 1, 2 respectively) with compute time bursts 2, 4 and 8 time units. All processes arrive at time zero. Consider the longest remaining time first (LRTF) scheduling algorithm. In LRTF ties are broken by giving priority to the process with the lowest process id. The average turn around time is:

a)

13 units

b)

14 units

c)

15 units

d)

16 units

|

|

Ravi Singh answered |

Let the processes be p0, p1 and p2. These processes will be executed in following order.

Turn around time of a process is total time between submission of the process and its completion. Turn around time of p0 = 12 (12-0) Turn around time of p1 = 13 (13-0) Turn around time of p2 = 14 (14-0) Average turn around time is (12+13+14)/3 = 13.

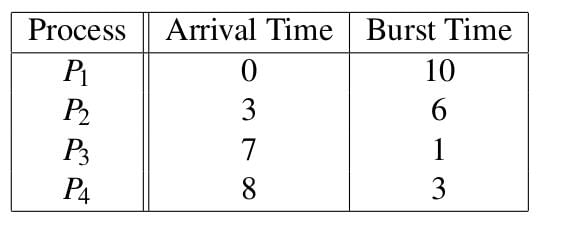

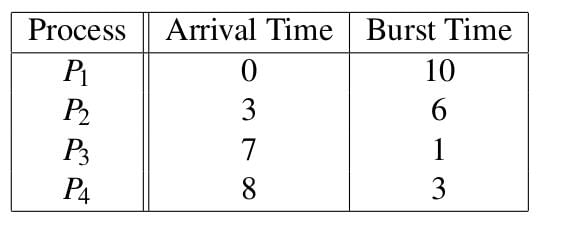

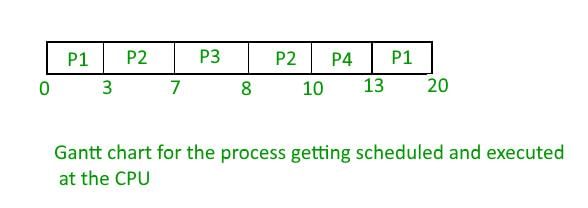

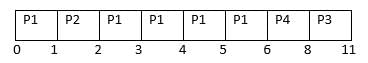

Consider the following processes, with the arrival time and the length of the CPU burst given in milliseconds. The scheduling algorithm used is preemptive shortest remaining-time first. The average turn around time of these processes is ___________ milliseconds. Note : This question was asked as Numerical Answer Type.

The average turn around time of these processes is ___________ milliseconds. Note : This question was asked as Numerical Answer Type.- a)8.25

- b)10.25

- c)6.35

- d)4.25

Correct answer is option 'A'. Can you explain this answer?

Consider the following processes, with the arrival time and the length of the CPU burst given in milliseconds. The scheduling algorithm used is preemptive shortest remaining-time first.

The average turn around time of these processes is ___________ milliseconds. Note : This question was asked as Numerical Answer Type.

a)

8.25

b)

10.25

c)

6.35

d)

4.25

|

|

Ravi Singh answered |

PreEmptive Shortest Remaining time first scheduling, i.e. that processes will be scheduled on the CPU which will be having least remaining burst time( required time at the CPU). The processes are scheduled and executed as given in the below Gantt chart.

Turn Around Time(TAT) = Completion Time(CT) - Arrival Time(AT) TAT for P1 = 20 - 0 = 20 TAT for P2 = 10 - 3 = 7 TAT for P3 = 8- 7 = 1 TAT for P4 = 13 - 8 = 5 Hence, Average TAT = Total TAT of all the processes / no of processes = ( 20 + 7 + 1 + 5 ) / 4 = 33 / 4 = 8.25 Thus, A is the correct choice.

In a multi-user operating system, 20 requests are made to use a particular resource per hour, on an average. The probability that no requests are made in 45 minutes is- a)e-15

- b)e-5

- c)1 - e-5

- d)1 - e-10

Correct answer is option 'A'. Can you explain this answer?

In a multi-user operating system, 20 requests are made to use a particular resource per hour, on an average. The probability that no requests are made in 45 minutes is

a)

e-15

b)

e-5

c)

1 - e-5

d)

1 - e-10

|

|

Arpita Gupta answered |

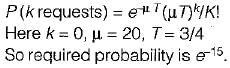

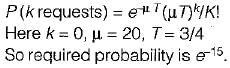

The arrival pattern is a Poission distribution.

Suppose that a process is in ‘BLOCKED’ state waiting for some I/O service. When the service is completed, it goes to the- a)RUNNING state

- b)READY state

- c)SUSPENDED state

- d)TERMINATED state

Correct answer is option 'B'. Can you explain this answer?

Suppose that a process is in ‘BLOCKED’ state waiting for some I/O service. When the service is completed, it goes to the

a)

RUNNING state

b)

READY state

c)

SUSPENDED state

d)

TERMINATED state

|

|

Shivam Dasgupta answered |

When process in blocked state waiting for some I/O services, whenever the services is completed it goes in the ready queue of the ready state.

In which of the following scheduling policies doe context switching never take place?

1. Round-robin

2. Shortest job first(non pre-emptive)

3. Pre-emptive

4. First-cum-first-served- a)4 only

- b)1 and 3

- c)2 and 3

- d)2 and 4

Correct answer is option 'D'. Can you explain this answer?

In which of the following scheduling policies doe context switching never take place?

1. Round-robin

2. Shortest job first(non pre-emptive)

3. Pre-emptive

4. First-cum-first-served

1. Round-robin

2. Shortest job first(non pre-emptive)

3. Pre-emptive

4. First-cum-first-served

a)

4 only

b)

1 and 3

c)

2 and 3

d)

2 and 4

|

|

Mahi Datta answered |

Explanation:

In the given options, the scheduling policies are as follows:

1. Round-robin: In round-robin scheduling, each process is given a fixed time quantum to execute. When a time quantum expires, the currently executing process is preempted and moved to the end of the ready queue. This policy involves context switching as the processor switches between processes at regular intervals.

2. Shortest job first (non pre-emptive): In shortest job first scheduling, the process with the shortest burst time is executed first. Once a process starts execution, it is not preempted until it completes. Since there is no preemption, context switching does not occur in this policy.

3. Preemptive: Preemptive scheduling policies allow processes to be interrupted and moved out of the running state before they complete. This is usually based on priority or time quantum. Context switching is an essential part of preemptive scheduling as it involves moving the running process out and bringing another process in.

4. First-come-first-served: In first-come-first-served scheduling, the processes are executed in the order they arrive in the ready queue. Once a process starts execution, it is not preempted until it completes. Similar to shortest job first, there is no preemption in this policy, and hence, context switching does not occur.

Conclusion:

Considering the above explanations, the scheduling policies where context switching never takes place are:

- Shortest job first (non pre-emptive) - Option 2

- First-come-first-served - Option 4

Therefore, the correct answer is option 'D' - 2 and 4.

In the given options, the scheduling policies are as follows:

1. Round-robin: In round-robin scheduling, each process is given a fixed time quantum to execute. When a time quantum expires, the currently executing process is preempted and moved to the end of the ready queue. This policy involves context switching as the processor switches between processes at regular intervals.

2. Shortest job first (non pre-emptive): In shortest job first scheduling, the process with the shortest burst time is executed first. Once a process starts execution, it is not preempted until it completes. Since there is no preemption, context switching does not occur in this policy.

3. Preemptive: Preemptive scheduling policies allow processes to be interrupted and moved out of the running state before they complete. This is usually based on priority or time quantum. Context switching is an essential part of preemptive scheduling as it involves moving the running process out and bringing another process in.

4. First-come-first-served: In first-come-first-served scheduling, the processes are executed in the order they arrive in the ready queue. Once a process starts execution, it is not preempted until it completes. Similar to shortest job first, there is no preemption in this policy, and hence, context switching does not occur.

Conclusion:

Considering the above explanations, the scheduling policies where context switching never takes place are:

- Shortest job first (non pre-emptive) - Option 2

- First-come-first-served - Option 4

Therefore, the correct answer is option 'D' - 2 and 4.

Which of the following are real-time systems?

1. An on-line railway reservation system ,

2. A process control system

3. Aircraft control system

4. Payroll processing system- a)1 and 2

- b)3 and 4

- c)2 and 3

- d)1,2, 3 and 4

Correct answer is option 'C'. Can you explain this answer?

Which of the following are real-time systems?

1. An on-line railway reservation system ,

2. A process control system

3. Aircraft control system

4. Payroll processing system

1. An on-line railway reservation system ,

2. A process control system

3. Aircraft control system

4. Payroll processing system

a)

1 and 2

b)

3 and 4

c)

2 and 3

d)

1,2, 3 and 4

|

|

Nabanita Basak answered |

Real-time system are very fast and quick respondents systems. Response time of such systems is very low, to the tune of 10 ms or 100 ms or even less.

- Such systems are used where real-life scenario are being implemented like missile system, aircraft system extra.

- In process control system, the time quantum in reality is very less (to give a feel of multiprocessing so real time system is needed.

Which of the following is an example of a SPOOLED device?- a)The terminal used to enter the input data for a program being executed.

- b)The secondary memory device in a virtual memory system.

- c)A line printer used to print the output of a no. of Jobs.

- d)One tries to divide a number by 0.

Correct answer is option 'C'. Can you explain this answer?

Which of the following is an example of a SPOOLED device?

a)

The terminal used to enter the input data for a program being executed.

b)

The secondary memory device in a virtual memory system.

c)

A line printer used to print the output of a no. of Jobs.

d)

One tries to divide a number by 0.

|

|

Malavika Banerjee answered |

Spool means simultaneous peri-pheral operations on line, a printer is a spooling device.

Consider the following statements with respect to user-level threads and Kernel-supported threads

(i) Context switching is faster with Kernel- supported threads.

(ii) For user-level threads, a system call can block the entire process.

(iii) Kernel-supported threads can be scheduled independently.

(iv) User-level threads are transparent to the Kernel.

Which of the above statements are true?- a)(ii), (iii), (iv) only

- b)(ii) and (iii) only

- c)(i) and (iii) only

- d)(i) and (ii) only

Correct answer is option 'B'. Can you explain this answer?

Consider the following statements with respect to user-level threads and Kernel-supported threads

(i) Context switching is faster with Kernel- supported threads.

(ii) For user-level threads, a system call can block the entire process.

(iii) Kernel-supported threads can be scheduled independently.

(iv) User-level threads are transparent to the Kernel.

Which of the above statements are true?

(i) Context switching is faster with Kernel- supported threads.

(ii) For user-level threads, a system call can block the entire process.

(iii) Kernel-supported threads can be scheduled independently.

(iv) User-level threads are transparent to the Kernel.

Which of the above statements are true?

a)

(ii), (iii), (iv) only

b)

(ii) and (iii) only

c)

(i) and (iii) only

d)

(i) and (ii) only

|

|

Suyash Chauhan answered |

Kernel level threads can be scheduled independently. For user level threads a system call can block the entire process and are not transparent to Kernel.

A critical section is a program segment- a)which should run in a certain specified amount of time

- b)which avoids deadlocks

- c)where shared resources are accessed

- d)which must be enclosed by a pair of semaphore operations, P and V

Correct answer is option 'C'. Can you explain this answer?

A critical section is a program segment

a)

which should run in a certain specified amount of time

b)

which avoids deadlocks

c)

where shared resources are accessed

d)

which must be enclosed by a pair of semaphore operations, P and V

|

|

Anshu Mehta answered |

A critical section is a specific section of a program where shared resources are accessed and modified by multiple threads or processes. It is important to ensure that only one thread or process can access the critical section at a time to avoid data inconsistencies or conflicts.

Shared Resources:

In a multi-threaded or multi-process environment, multiple threads or processes may need to access certain resources, such as variables, files, or devices. These resources are typically shared among the threads or processes. However, when multiple threads or processes try to access and modify the shared resources simultaneously, it can lead to data inconsistencies or conflicts.

Need for Synchronization:

To avoid such issues, synchronization mechanisms are used to control the access to shared resources. A critical section is a part of the program where shared resources are accessed, and it needs to be synchronized to ensure that only one thread or process can access it at a time.

Avoiding Data Inconsistencies:

By ensuring that only one thread or process can access the critical section at a time, we can prevent data inconsistencies. For example, if two threads try to increment a shared variable simultaneously, they may both read the variable's value, increment it, and write it back, resulting in a lost update. By synchronizing the critical section, we can ensure that only one thread increments the variable at a time, avoiding data inconsistencies.

Using Semaphores:

One way to implement synchronization in a critical section is by using semaphores. Semaphores are variables that can be used for signaling and controlling access to shared resources. In many cases, a pair of semaphore operations, usually referred to as P and V, are used to control access to the critical section.

The P operation, also known as wait or acquire, is used to acquire the semaphore and enter the critical section. If the semaphore value is greater than zero, it is decremented, and the thread or process can proceed to access the critical section. If the semaphore value is zero, the thread or process is blocked until the semaphore becomes available.

The V operation, also known as signal or release, is used to release the semaphore and exit the critical section. It increments the semaphore value, allowing another thread or process to acquire it and enter the critical section.

Conclusion:

In summary, a critical section is a program segment where shared resources are accessed and modified. It is important to synchronize the access to the critical section to avoid data inconsistencies or conflicts. Semaphore operations, such as P and V, can be used to control access to the critical section and ensure that only one thread or process can access it at a time.

Shared Resources:

In a multi-threaded or multi-process environment, multiple threads or processes may need to access certain resources, such as variables, files, or devices. These resources are typically shared among the threads or processes. However, when multiple threads or processes try to access and modify the shared resources simultaneously, it can lead to data inconsistencies or conflicts.

Need for Synchronization:

To avoid such issues, synchronization mechanisms are used to control the access to shared resources. A critical section is a part of the program where shared resources are accessed, and it needs to be synchronized to ensure that only one thread or process can access it at a time.

Avoiding Data Inconsistencies:

By ensuring that only one thread or process can access the critical section at a time, we can prevent data inconsistencies. For example, if two threads try to increment a shared variable simultaneously, they may both read the variable's value, increment it, and write it back, resulting in a lost update. By synchronizing the critical section, we can ensure that only one thread increments the variable at a time, avoiding data inconsistencies.

Using Semaphores:

One way to implement synchronization in a critical section is by using semaphores. Semaphores are variables that can be used for signaling and controlling access to shared resources. In many cases, a pair of semaphore operations, usually referred to as P and V, are used to control access to the critical section.

The P operation, also known as wait or acquire, is used to acquire the semaphore and enter the critical section. If the semaphore value is greater than zero, it is decremented, and the thread or process can proceed to access the critical section. If the semaphore value is zero, the thread or process is blocked until the semaphore becomes available.

The V operation, also known as signal or release, is used to release the semaphore and exit the critical section. It increments the semaphore value, allowing another thread or process to acquire it and enter the critical section.

Conclusion:

In summary, a critical section is a program segment where shared resources are accessed and modified. It is important to synchronize the access to the critical section to avoid data inconsistencies or conflicts. Semaphore operations, such as P and V, can be used to control access to the critical section and ensure that only one thread or process can access it at a time.

Which of the following scheduling algorithms is non-preemptive?- a)Round Robin

- b)First-In First-Out

- c)Multilevel Queue Scheduling

- d)Multilevel Queue Scheduling with Feedback

Correct answer is option 'B'. Can you explain this answer?

Which of the following scheduling algorithms is non-preemptive?

a)

Round Robin

b)

First-In First-Out

c)

Multilevel Queue Scheduling

d)

Multilevel Queue Scheduling with Feedback

|

|

Preethi Iyer answered |

Round Robin - Preemption takes place when the time quantum expires First In First Out - No Preemption, the process once started completes before the other process takes over Multi Level Queue Scheduling - Preemption takes place when a process of higher priority arrives Multi Level Queue Scheduling with Feedback - Preemption takes a place when process of higher priority arrives or when the quantum of high priority queue expires and we need to move the process to low priority queue So, B is the correct choice. Please comment below if you find anything wrong in the above post.

Consider a uniprocessor system executing three tasks T1, T2 and T3, each of which is composed of an infinite sequence of jobs (or instances) which arrive periodically at intervals of 3, 7 and 20 milliseconds, respectively. The priority of each task is the inverse of its period and the available tasks are scheduled in order of priority, with the highest priority task scheduled first. Each instance of T1, T2 and T3 requires an execution time of 1, 2 and 4 milliseconds, respectively. Given that all tasks initially arrive at the beginning of the 1st milliseconds and task preemptions are allowed, the first instance of T3 completes its execution at the end of ______________ milliseconds.- a)5

- b)10

- c)12

- d)15

Correct answer is option 'C'. Can you explain this answer?

Consider a uniprocessor system executing three tasks T1, T2 and T3, each of which is composed of an infinite sequence of jobs (or instances) which arrive periodically at intervals of 3, 7 and 20 milliseconds, respectively. The priority of each task is the inverse of its period and the available tasks are scheduled in order of priority, with the highest priority task scheduled first. Each instance of T1, T2 and T3 requires an execution time of 1, 2 and 4 milliseconds, respectively. Given that all tasks initially arrive at the beginning of the 1st milliseconds and task preemptions are allowed, the first instance of T3 completes its execution at the end of ______________ milliseconds.

a)

5

b)

10

c)

12

d)

15

|

|

Prasad Unni answered |

Periods of T1, T2 and T3 are 3ms, 7ms and 20ms

Since priority is inverse of period, T1 is the highest priority task, then T2 and finally T3

Every instance of T1 requires 1ms, that of T2 requires 2ms and that of T3 requires 4ms

Initially all T1, T2 and T3 are ready to get processor, T1 is preferred

Second instances of T1, T2, and T3 shall arrive at 3, 7, and 20 respectively.

Third instance of T1, T2 and T3 shall arrive at 6, 14, and 49 respectively.

Since priority is inverse of period, T1 is the highest priority task, then T2 and finally T3

Every instance of T1 requires 1ms, that of T2 requires 2ms and that of T3 requires 4ms

Initially all T1, T2 and T3 are ready to get processor, T1 is preferred

Second instances of T1, T2, and T3 shall arrive at 3, 7, and 20 respectively.

Third instance of T1, T2 and T3 shall arrive at 6, 14, and 49 respectively.

A uni-processor computer system only has two processes, both of which alternate 10ms CPU bursts with 90ms I/O bursts. Both the processes were created at nearly the same time. The I/O of both processes can proceed in parallel. Which of the following scheduling strategies will result in the least CPU utilization (over a long period of time) for this system ?- a)First come first served scheduling

- b)Shortest remaining time first scheduling

- c)Static priority scheduling with different priorities for the two processes

- d)Round robin scheduling with a time quantum of 5 ms

Correct answer is option 'D'. Can you explain this answer?

A uni-processor computer system only has two processes, both of which alternate 10ms CPU bursts with 90ms I/O bursts. Both the processes were created at nearly the same time. The I/O of both processes can proceed in parallel. Which of the following scheduling strategies will result in the least CPU utilization (over a long period of time) for this system ?

a)

First come first served scheduling

b)

Shortest remaining time first scheduling

c)

Static priority scheduling with different priorities for the two processes

d)

Round robin scheduling with a time quantum of 5 ms

|

|

Yash Patel answered |

When Round Robin scheduling is used We are given that the time slice is 5ms. Consider process P and Q. Say P utilizes 5ms of CPU and then Q utilizes 5ms of CPU. Hence after 15ms P starts with I/O And after 20ms Q also starts with I/O. Since I/O can be done in parallel, P finishes I\O at 105th ms (15 + 90) and Q finishes its I\O at 110th ms (20 + 90). Therefore we can see that CPU remains idle from 20th to 105th ms. That is when Round Robin scheduling is used, Idle time of CPU = 85ms CPU Utilization = 20/105 = 19.05% When First Come First Served scheduling scheduling or Shortest Remaining Time First is used Say P utilizes 10ms of CPU and then starts its I/O. At 11th ms Q starts processing. Q utilizes 10ms of CPU. P completes its I/O at 100ms (10 + 90) Q completes its I/O at 110ms (20 + 90) At 101th ms P again utilizes CPU. Hence, Idle time of CPU = 80ms CPU Utilization = 20/100 = 20% Since only two processes are involved and I\O time is much more than CPU time, "Static priority scheduling with different priorities" for the two processes reduces to FCFS or Shortest remaining time first. Therefore, Round robin will result in least CPU utilization.

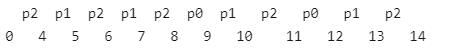

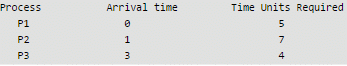

Consider the 3 processes, P1, P2 and P3 shown in the table.

The completion order of the 3 processes under the policies FCFS and RR2 (round robin scheduling with CPU quantum of 2 time units) are- a)FCFS: P1, P2, P3

RR2: P1, P2, P3 - b)FCFS: P1, P3, P2

RR2: P1, P3, P2 - c)FCFS: P1, P2, P3

RR2: P1, P3, P2 - d)FCFS: P1, P3, P2

RR2: P1, P2, P3

Correct answer is option 'C'. Can you explain this answer?

Consider the 3 processes, P1, P2 and P3 shown in the table.

The completion order of the 3 processes under the policies FCFS and RR2 (round robin scheduling with CPU quantum of 2 time units) are

a)

FCFS: P1, P2, P3

RR2: P1, P2, P3

RR2: P1, P2, P3

b)

FCFS: P1, P3, P2

RR2: P1, P3, P2

RR2: P1, P3, P2

c)

FCFS: P1, P2, P3

RR2: P1, P3, P2

RR2: P1, P3, P2

d)

FCFS: P1, P3, P2

RR2: P1, P2, P3

RR2: P1, P2, P3

|

Crack Gate answered |

FCFS Scheduling:

In FCFS, processes are executed in the order of their arrival times.

- P1 arrives at time 0 and requires 5 time units. It runs from time 0 to 5.

- P2 arrives at time 1 but must wait until P1 finishes. It runs from time 5 to 12 (5 + 7 = 12).

- P3 arrives at time 3 but must wait until P2 finishes. It runs from time 12 to 16 (12 + 4 = 16).

Completion order for FCFS: P1, P2, P3.

RR2 Scheduling:

In Round Robin with a quantum of 2, each process gets 2 time units before moving to the next process in the queue.

- Time 0: P1 arrives and starts execution.

- P1 runs for 2 units (time 0-2). Remaining time for P1: 5 - 2 = 3.

-

- Time 1: P2 arrives but P1 is already running.

- Time 2: P1 is preempted. Queue: P2, P1.

- P2 runs for 2 units (time 2-4). Remaining time for P2: 7 - 2 = 5.

-

- Time 3: P3 arrives during P2's execution.

- Time 4: P2 is preempted. Queue: P1, P3, P2.

- P1 runs for 2 units (time 4-6). Remaining time for P1: 3 - 2 = 1.

-

- Time 6: P1 is preempted. Queue: P3, P2, P1.

- P3 runs for 2 units (time 6-8). Remaining time for P3: 4 - 2 = 2.

-

- Time 8: P3 is preempted. Queue: P2, P1, P3.

- P2 runs for 2 units (time 8-10). Remaining time for P2: 5 - 2 = 3.

-

- Time 10: P2 is preempted. Queue: P1, P3, P2.

- P1 runs for 1 unit (time 10-11). P1 completes.

-

- Time 11: P1 is done. Queue: P3, P2.

- P3 runs for 2 units (time 11-13). P3 completes.

-

- Time 13: P3 is done. Queue: P2.

- P2 runs for 3 units (time 13-16). P2 completes.

-

Completion order for RR2: P1, P3, P2.

Final Answer:

The correct completion orders are:

- FCFS: P1, P2, P3

- RR2: P1, P3, P2

Option c)

An operating system uses shortest remaining time first scheduling algorithm for pre-emptive scheduling of processes. Consider the following set of processes with their arrival times and CPU burst times (in milliseconds):Process Arrival Time Burst Time

P1 0 12

P2 2 4

P3 3 6

P4 8 5 Q. The average waiting time (in milliseconds) of the processes is _________.- a)4.5

- b)5.0

- c)5.5

- d)6.5

Correct answer is option 'C'. Can you explain this answer?

An operating system uses shortest remaining time first scheduling algorithm for pre-emptive scheduling of processes. Consider the following set of processes with their arrival times and CPU burst times (in milliseconds):

Process Arrival Time Burst Time

P1 0 12

P2 2 4

P3 3 6

P4 8 5

P1 0 12

P2 2 4

P3 3 6

P4 8 5

Q. The average waiting time (in milliseconds) of the processes is _________.

a)

4.5

b)

5.0

c)

5.5

d)

6.5

|

|

Harshitha Banerjee answered |

Process Arrival Time Burst Time

P1 0 12

P2 2 4

P3 3 6

P4 8 5

P1 0 12

P2 2 4

P3 3 6

P4 8 5

Burst Time - The total time needed by a process from the CPU for its complete execution. Waiting Time - How much time processes spend in the ready queue waiting their turn to get on the CPU Now, The Gantt chart for the above processes is :

P1 - 0 to 2 milliseconds

P2 - 2 to 6 milliseconds

P3 - 6 to 12 milliseconds

P4 - 12 to 17 milliseconds

P1 - 17 to 27 milliseconds

P2 - 2 to 6 milliseconds

P3 - 6 to 12 milliseconds

P4 - 12 to 17 milliseconds

P1 - 17 to 27 milliseconds

Process p1 arrived at time 0, hence cpu started executing it. After 2 units of time P2 arrives and burst time of P2 was 4 units, and the remaining time of the process p1 was 10 units,hence cpu started executing P2, putting P1 in waiting state(Pre-emptive and Shortest remaining time first scheduling). Due to P1's highest remaining time it was executed by the cpu in the end.

Now calculating the waiting time of each process:

P1 -> 17 -2 = 15

P2 -> 0

P3 -> 6 - 3 = 3

P4 -> 12 - 8 = 4

P1 -> 17 -2 = 15

P2 -> 0

P3 -> 6 - 3 = 3

P4 -> 12 - 8 = 4

Hence total waiting time of all the processes is = 15+0+3+4=22

Total no of processes = 4

Average waiting time = 22 / 4 = 5.5

Hence C is the answer.

Total no of processes = 4

Average waiting time = 22 / 4 = 5.5

Hence C is the answer.

In a multiprogramming environment- a)The processor executes more than one process at a time

- b)The programs are developed by more than one person

- c)More than one process resides in the memory

- d)A single user can execute many programs at the same time

Correct answer is option 'C'. Can you explain this answer?

In a multiprogramming environment

a)

The processor executes more than one process at a time

b)

The programs are developed by more than one person

c)

More than one process resides in the memory

d)

A single user can execute many programs at the same time

|

|

Naina Sharma answered |

Multiprogramming environment means processor is executing multiple processes simultaneously by continuously switching between one-another. Therefore, multiple processes should reside in memory. However, processor can't executes more than one process at a time.

Consider the following CPU processes with arrival times (in milliseconds) and length of CPU bursts (in milliseconds) except for process P4 as given below:

Process Arrival Time Burst Time

P1 0 5

P2 1 1

P3 3 3

P4 4 x

If the average waiting time across all processes is 2 milliseconds and pre-emptive shortest remaining time first scheduling algorithm is used to schedule the processes, then find the value of x ?- a)1

- b)2

- c)4

- d)None of these

Correct answer is option 'B'. Can you explain this answer?

Consider the following CPU processes with arrival times (in milliseconds) and length of CPU bursts (in milliseconds) except for process P4 as given below:

Process Arrival Time Burst Time

P1 0 5

P2 1 1

P3 3 3

P4 4 x

If the average waiting time across all processes is 2 milliseconds and pre-emptive shortest remaining time first scheduling algorithm is used to schedule the processes, then find the value of x ?

Process Arrival Time Burst Time

P1 0 5

P2 1 1

P3 3 3

P4 4 x

If the average waiting time across all processes is 2 milliseconds and pre-emptive shortest remaining time first scheduling algorithm is used to schedule the processes, then find the value of x ?

a)

1

b)

2

c)

4

d)

None of these

|

|

Sanya Agarwal answered |

If we take value of x is 2, then we have gantt chart as

So, completion time of P1, P2, P3, and P4 are 6, 2, 11, and 8 respectively. Turn around time of P1, P2, P3, and P4 are 6, 1, 8, and 4 respectively. Waiting time of P1, P2, P3, and P4 are 1, 0, 5, and 2 resectively. Therefore, average waiting time = (1+0+5+2) / 4 = 8/2 = 2 Option (B) is correct.

Which of the following scheduling algorithms may cause starvation ? a. First-come-first-served b. Round Robin c. Priority d. Shortest process next e. Shortest remaining time first- a)a, c and e

- b)c, d and e

- c)b, d and e

- d)b, c and d

Correct answer is option 'B'. Can you explain this answer?

Which of the following scheduling algorithms may cause starvation ? a. First-come-first-served b. Round Robin c. Priority d. Shortest process next e. Shortest remaining time first

a)

a, c and e

b)

c, d and e

c)

b, d and e

d)

b, c and d

|

|

Sanya Agarwal answered |

- In First Come First Serve(FCFS) if a process with a very large Burst Time comes before other processes, the other process will have to wait for a long time but it is clear that other process will definitely get their chance to execute, so it will not suffer from starvation.

- In Round Robin there is a fixed time quant and every process will get their chance to be executed, so no starvation is here.

- In Priority based scheduling if higher priority process keep on coming then low priority process will suffer from starvation.

- In Shortest Job First(SJF) if process with short process time keep on coming continuously then process with higher burst time will do wait and suffer from starvation.

- In Shortest remaining time first(SRTF) process with shortest burst time will execute first because of this process with high burst time may suffer from starvation.

So, option (C) is correct.

Which of the following process scheduling algorithm may lead to starvation- a)FIFO

- b)Round Robin

- c)Shortest Job Next

- d)None of the above

Correct answer is option 'C'. Can you explain this answer?

Which of the following process scheduling algorithm may lead to starvation

a)

FIFO

b)

Round Robin

c)

Shortest Job Next

d)

None of the above

|

|

Sanya Agarwal answered |

Shortest job next may lead to process starvation for processes which will require a long time to complete if short processes are continually added.

Consider three CPU-intensive processes, which require 10, 20 and 30 time units and arrive at times 0, 2 and 6, respectively. How many context switches are needed if the operating system implements a shortest remaining time first scheduling algorithm? Do not count the context switches at time zero and at the end.- a)1

- b)2

- c)3

- d)4

Correct answer is option 'B'. Can you explain this answer?

Consider three CPU-intensive processes, which require 10, 20 and 30 time units and arrive at times 0, 2 and 6, respectively. How many context switches are needed if the operating system implements a shortest remaining time first scheduling algorithm? Do not count the context switches at time zero and at the end.

a)

1

b)

2

c)

3

d)

4

|

|

Sanya Agarwal answered |

Shortest remaining time, also known as shortest remaining time first (SRTF), is a scheduling method that is a pre-emptive version of shortest job next scheduling. In this scheduling algorithm, the process with the smallest amount of time remaining until completion is selected to execute. Since the currently executing process is the one with the shortest amount of time remaining by definition, and since that time should only reduce as execution progresses, processes will always run until they complete or a new process is added that requires a smaller amount of time. Solution: Let three process be P0, P1 and P2 with arrival times 0, 2 and 6 respectively and CPU burst times 10, 20 and 30 respectively. At time 0, P0 is the only available process so it runs. At time 2, P1 arrives, but P0 has the shortest remaining time, so it continues. At time 6, P2 also arrives, but P0 still has the shortest remaining time, so it continues. At time 10, P1 is scheduled as it is the shortest remaining time process. At time 30, P2 is scheduled. Only two context switches are needed. P0 to P1 and P1 to P2.

Which of the following statements is not true for Multi Level Feedback Queue processor scheduling algorithm?- a)Queues have different priorities

- b)Each queue may have different scheduling algorithm

- c)Processes are permanently assigned to a queue

- d)This algorithm can be configured to match a specific system under design

Correct answer is option 'C'. Can you explain this answer?

Which of the following statements is not true for Multi Level Feedback Queue processor scheduling algorithm?

a)

Queues have different priorities

b)

Each queue may have different scheduling algorithm

c)

Processes are permanently assigned to a queue

d)

This algorithm can be configured to match a specific system under design

|

|

Sanya Agarwal answered |

For Multi Level Feedback Queue processor scheduling algorithm:

- Queues have different priorities

- Each queue may have different scheduling algorithm

- Processes are not permanently assigned to a queue.

- This algorithm can be configured to match a specific system under design

When an interrupt occurs, an operating system- a)ignores the interrupt

- b)always changes state of interrupted process to 'blocked' and schedules another process

- c)always resumes execution of interrupted process after processing the interrupt

- d)may change the state of interrupted process to 'blocked'and schedule another process

Correct answer is option 'D'. Can you explain this answer?

When an interrupt occurs, an operating system

a)

ignores the interrupt

b)

always changes state of interrupted process to 'blocked' and schedules another process

c)

always resumes execution of interrupted process after processing the interrupt

d)

may change the state of interrupted process to 'blocked'and schedule another process

|

|

Naina Sharma answered |

Explanation:

When an interrupt occurs, the operating system needs to handle it appropriately to ensure the smooth functioning of the system. The correct answer is option 'D' because the operating system may change the state of the interrupted process to blocked and schedule another process. Let's understand this in detail:

Interrupts:

Interrupts are signals sent to the processor from either hardware devices or software exceptions. They are used to indicate events that require immediate attention from the operating system. When an interrupt occurs, the processor temporarily stops executing the current process and transfers control to a specific interrupt handler routine.

Handling Interrupts:

When an interrupt occurs, the operating system follows a specific sequence of steps to handle it:

1. Saving the state: The operating system saves the current state of the interrupted process, including the program counter, registers, and other relevant information. This allows the system to resume the interrupted process later.