All Exams >

Computer Science Engineering (CSE) >

Operating System >

All Questions

All questions of Virtual Memory for Computer Science Engineering (CSE) Exam

Which of the following is a service not supported by the operating system?- a)Protection

- b)Accounting

- c)Compilation

- d)I/O operation

Correct answer is option 'C'. Can you explain this answer?

Which of the following is a service not supported by the operating system?

a)

Protection

b)

Accounting

c)

Compilation

d)

I/O operation

|

|

Sanchita Chauhan answered |

Code compilation is being done by compiler and not by operating system, since it is language specific.

Consider a virtual memory of 256 terabytes. The page size is 4K. This logical space is mapped into a physical memory of 256 megabytes.How many bits are there in the virtual memory?- a)28

- b)48

- c)38

- d)58

Correct answer is option 'B'. Can you explain this answer?

Consider a virtual memory of 256 terabytes. The page size is 4K. This logical space is mapped into a physical memory of 256 megabytes.

How many bits are there in the virtual memory?

a)

28

b)

48

c)

38

d)

58

|

|

Yash Patel answered |

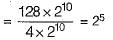

Virtual address space = 256 terabytes

= 256 x 240 bytes

= 248 bytes [1 terabyte = 240 bytes] ∴ Number of bits to represent the virtual memory

= 48 bits

= 256 x 240 bytes

= 248 bytes [1 terabyte = 240 bytes] ∴ Number of bits to represent the virtual memory

= 48 bits

Consider a fully associative cache with 8 cache blocks (numbered 0-7) and the following sequence of memory block requests: 4, 3, 25, 8, 19, 6, 25, 8, 16, 35, 45, 22, 8, 3, 16, 25, 7 If LRU replacement policy is used, which cache block will have memory block 7? - a)4

- b)5

- c)6

- d)7

Correct answer is option 'B'. Can you explain this answer?

Consider a fully associative cache with 8 cache blocks (numbered 0-7) and the following sequence of memory block requests: 4, 3, 25, 8, 19, 6, 25, 8, 16, 35, 45, 22, 8, 3, 16, 25, 7 If LRU replacement policy is used, which cache block will have memory block 7?

a)

4

b)

5

c)

6

d)

7

|

|

Pranab Banerjee answered |

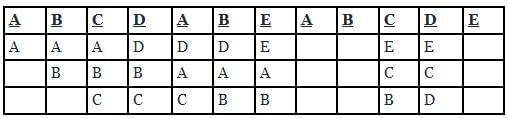

Block size is =8 Given 4, 3, 25, 8, 19, 6, 25, 8, 16, 35, 45, 22, 8, 3, 16, 25, 7 So from 0 to 7 ,we have

- 4 3 25 8 19 6 16 35 //25,8 LRU so next 16,35 come in the block.

- 45 3 25 8 19 6 16 35

- 45 22 25 8 19 6 16 35

- 45 22 25 8 19 6 16 35

- 45 22 25 8 3 6 16 35 //16 and 25 already there

- 45 22 25 8 3 7 16 35 //7 in 5th block Therefore , answer is B

A system uses FIFO policy for page replacement. It has 4 page frames with no pages loaded to begin with. The system first accesses 100 distinct pages in some order and then accesses the same 100 pages but now in the reverse order. How many page faults will occur?- a)196

- b)192

- c)197

- d)195

Correct answer is option 'A'. Can you explain this answer?

A system uses FIFO policy for page replacement. It has 4 page frames with no pages loaded to begin with. The system first accesses 100 distinct pages in some order and then accesses the same 100 pages but now in the reverse order. How many page faults will occur?

a)

196

b)

192

c)

197

d)

195

|

|

Keerthana Sarkar answered |

First-in, First-out (FIFO) Page Replacement Policy:

FIFO is a page replacement policy in which the page that is loaded first into the memory is the first one to be replaced when a page fault occurs. In this policy, a queue is used to keep track of the loaded pages, and the page at the front of the queue is the one to be replaced.

Given Information:

- Number of page frames available = 4

- The system first accesses 100 distinct pages in some order.

- The system then accesses the same 100 pages, but in reverse order.

Analysis:

1. Initially, all the page frames are empty (no pages loaded).

2. As the system accesses the first 100 distinct pages, each page will cause a page fault because there are no pages loaded in the memory.

3. The page faults will continue until all the page frames are filled with the distinct pages.

4. Once all the page frames are filled, the next accessed page will cause a page fault, and the page at the front of the queue (the oldest loaded page) will be replaced.

5. As the system accesses the same 100 pages in reverse order, the page faults will occur only when the next accessed page is not already present in the memory.

Calculating the Page Faults:

1. Initially, the first 100 distinct pages will cause page faults until all the page frames are filled. So, there will be 100 page faults.

2. After the page frames are filled, the next accessed page will cause a page fault, and the oldest page (at the front of the queue) will be replaced with the new page.

3. Since there are 100 pages, and each page will cause a page fault as it is not already present in the memory, there will be 100 page faults during the reverse order access.

4. Therefore, the total number of page faults will be 100 (distinct pages access) + 100 (reverse order access) = 200.

The correct answer is not among the options given. However, the closest option to the correct answer is option 'A' (196), which is the result of subtracting 4 (the initial 4 empty page frames) from the calculated 200 page faults.

FIFO is a page replacement policy in which the page that is loaded first into the memory is the first one to be replaced when a page fault occurs. In this policy, a queue is used to keep track of the loaded pages, and the page at the front of the queue is the one to be replaced.

Given Information:

- Number of page frames available = 4

- The system first accesses 100 distinct pages in some order.

- The system then accesses the same 100 pages, but in reverse order.

Analysis:

1. Initially, all the page frames are empty (no pages loaded).

2. As the system accesses the first 100 distinct pages, each page will cause a page fault because there are no pages loaded in the memory.

3. The page faults will continue until all the page frames are filled with the distinct pages.

4. Once all the page frames are filled, the next accessed page will cause a page fault, and the page at the front of the queue (the oldest loaded page) will be replaced.

5. As the system accesses the same 100 pages in reverse order, the page faults will occur only when the next accessed page is not already present in the memory.

Calculating the Page Faults:

1. Initially, the first 100 distinct pages will cause page faults until all the page frames are filled. So, there will be 100 page faults.

2. After the page frames are filled, the next accessed page will cause a page fault, and the oldest page (at the front of the queue) will be replaced with the new page.

3. Since there are 100 pages, and each page will cause a page fault as it is not already present in the memory, there will be 100 page faults during the reverse order access.

4. Therefore, the total number of page faults will be 100 (distinct pages access) + 100 (reverse order access) = 200.

The correct answer is not among the options given. However, the closest option to the correct answer is option 'A' (196), which is the result of subtracting 4 (the initial 4 empty page frames) from the calculated 200 page faults.

Removing of suspended process from memory to disk and their subsequent return is called- a)Swapping

- b)Segmentation

- c)I/O operation

- d)Replacement

Correct answer is option 'A'. Can you explain this answer?

Removing of suspended process from memory to disk and their subsequent return is called

a)

Swapping

b)

Segmentation

c)

I/O operation

d)

Replacement

|

Riverdale Learning Institute answered |

Swapping

Swapping is a memory management scheme in which a process can be swapped temporarily out of the main memory (or move) to secondary storage (disk) and make that memory available to other processes. At some later time, the system swaps back the process from the secondary storage to the main memory.

Swapping is a memory management scheme in which a process can be swapped temporarily out of the main memory (or move) to secondary storage (disk) and make that memory available to other processes. At some later time, the system swaps back the process from the secondary storage to the main memory.

A process refers to 5 pages, A, B, C, D, E in the order :

A, B, C, D, A, B, E, A, B, C, D, EIf the page replacement algorithm is FIFO, the number of page transfers with an empty internal store of 3 frames is: - a)8

- b)10

- c)9

- d)7

Correct answer is option 'C'. Can you explain this answer?

A process refers to 5 pages, A, B, C, D, E in the order :

A, B, C, D, A, B, E, A, B, C, D, E

A, B, C, D, A, B, E, A, B, C, D, E

If the page replacement algorithm is FIFO, the number of page transfers with an empty internal store of 3 frames is:

a)

8

b)

10

c)

9

d)

7

|

|

Luminary Institute answered |

From the table below, we can see that pages have been transferred into frames 9 times.

The crew performed experiments such as pollinatary planets and faster computer chips price tag is- a)51 million dollars

- b)52 million dollars

- c)54 million dollars

- d)56 million dollars

Correct answer is option 'D'. Can you explain this answer?

The crew performed experiments such as pollinatary planets and faster computer chips price tag is

a)

51 million dollars

b)

52 million dollars

c)

54 million dollars

d)

56 million dollars

|

Gayatri Chavan answered |

Kalpana Chawla’s first mission was in the space shuttle Columbia. It was a 15 days, 16

hours and 34 minutes. During this time, she went around the earth 252 times traveling 1.45

million km. The crew performed experiments such as pollinating plants to observe food

growth in space. It also made test for making stronger metals and faster computer chips. It

was all done for a price tag of 56 million dollars.

_____ contains the swap space.- a)RAM

- b)Disk

- c)ROM

- d)On-chip cache

Correct answer is option 'B'. Can you explain this answer?

_____ contains the swap space.

a)

RAM

b)

Disk

c)

ROM

d)

On-chip cache

|

|

Nitin Datta answered |

Understanding Swap Space

Swap space is a crucial component in computer systems that helps manage memory resources effectively. It is an area on the disk that the operating system uses as an extension of RAM (Random Access Memory). Here’s a detailed breakdown of why the correct answer is option 'B' (Disk):

What is Swap Space?

- Swap space is a designated area on a storage device (usually a hard disk or SSD) that allows the operating system to store data that cannot be held in RAM.

- When RAM is full, inactive pages of memory are moved to this disk space, freeing up RAM for active processes.

Role of Disk in Swap Space

- Storage Capacity: Disks provide a larger storage capacity compared to RAM, making them ideal for swap space.

- Persistence: Data stored in swap space remains intact even when the computer is turned off, unlike RAM, which is volatile.

Comparison with Other Options

- RAM: While RAM is used for active data storage, it cannot serve as swap space since it is temporary and volatile.

- ROM: Read-Only Memory is primarily for permanent data storage and does not function as swap space.

- On-chip Cache: This provides fast access to frequently used data, but it is not used for swap purposes.

Conclusion

In summary, swap space resides on the disk, providing essential support when RAM is insufficient. This mechanism allows systems to operate efficiently by utilizing available disk space as a temporary memory extension. Thus, the correct answer to the question is option 'B' (Disk).

Swap space is a crucial component in computer systems that helps manage memory resources effectively. It is an area on the disk that the operating system uses as an extension of RAM (Random Access Memory). Here’s a detailed breakdown of why the correct answer is option 'B' (Disk):

What is Swap Space?

- Swap space is a designated area on a storage device (usually a hard disk or SSD) that allows the operating system to store data that cannot be held in RAM.

- When RAM is full, inactive pages of memory are moved to this disk space, freeing up RAM for active processes.

Role of Disk in Swap Space

- Storage Capacity: Disks provide a larger storage capacity compared to RAM, making them ideal for swap space.

- Persistence: Data stored in swap space remains intact even when the computer is turned off, unlike RAM, which is volatile.

Comparison with Other Options

- RAM: While RAM is used for active data storage, it cannot serve as swap space since it is temporary and volatile.

- ROM: Read-Only Memory is primarily for permanent data storage and does not function as swap space.

- On-chip Cache: This provides fast access to frequently used data, but it is not used for swap purposes.

Conclusion

In summary, swap space resides on the disk, providing essential support when RAM is insufficient. This mechanism allows systems to operate efficiently by utilizing available disk space as a temporary memory extension. Thus, the correct answer to the question is option 'B' (Disk).

Recall that Belady’s anomaly is that the page-fault rate may increase as the number of allocated frames increases. Now, consider the following statements:S1: Random page replacement algorithm (where a page chosen at random is replaced)

Suffers from Belady’s anomaly

S2: LRU page replacement algorithm suffers from Belady’s anomalyWhich of the following is CORRECT?- a)S1 is true, S2 is true

- b)S1 is true, S2 is false

- c)S1 is false, S2 is true

- d)S1 is false, S2 is false

Correct answer is option 'B'. Can you explain this answer?

Recall that Belady’s anomaly is that the page-fault rate may increase as the number of allocated frames increases. Now, consider the following statements:

S1: Random page replacement algorithm (where a page chosen at random is replaced)

Suffers from Belady’s anomaly

S2: LRU page replacement algorithm suffers from Belady’s anomaly

Suffers from Belady’s anomaly

S2: LRU page replacement algorithm suffers from Belady’s anomaly

Which of the following is CORRECT?

a)

S1 is true, S2 is true

b)

S1 is true, S2 is false

c)

S1 is false, S2 is true

d)

S1 is false, S2 is false

|

|

Sudhir Patel answered |

S1: Random page replacement algorithm (where a page chosen at random is replaced)

Suffers from Belady’s anomaly.

Random page replacement algorithm can behave like any replacement algorithm. It may behave as FIFO, LRU, MRU etc.). When random page replacement algorithm behaves like a FIFO page replacement algorithm in that case there can be chances of belady’s anamoly.

For this let us consider an example of FIFO case, if we consider the reference string 3 2 1 0 3 2 4 3 2 1 0 4 and 3 frame slots, in this we get 9 page fault but if we increase slots to 4, then we get 10 page faults.

So, page faults are increasing by increasing the number of frame slots. It suffers from belady’s anamoly.

Suffers from Belady’s anomaly.

Random page replacement algorithm can behave like any replacement algorithm. It may behave as FIFO, LRU, MRU etc.). When random page replacement algorithm behaves like a FIFO page replacement algorithm in that case there can be chances of belady’s anamoly.

For this let us consider an example of FIFO case, if we consider the reference string 3 2 1 0 3 2 4 3 2 1 0 4 and 3 frame slots, in this we get 9 page fault but if we increase slots to 4, then we get 10 page faults.

So, page faults are increasing by increasing the number of frame slots. It suffers from belady’s anamoly.

S2: LRU page replacement algorithm suffers from Belady’s anomaly

It doesn’t suffers from page replacement algorithm because in LRU, the page which is least recently used is replaced by the new page. Also, LRU Is a stack algorithm. (A stack algorithm is one that satisfies the inclusion property.) and stack algorithm doesn’t suffer from belady’s anamoly.

It doesn’t suffers from page replacement algorithm because in LRU, the page which is least recently used is replaced by the new page. Also, LRU Is a stack algorithm. (A stack algorithm is one that satisfies the inclusion property.) and stack algorithm doesn’t suffer from belady’s anamoly.

Consider a main memory with five page frames and the following sequence of page references: 3, 8, 2, 3, 9, 1, 6, 3, 8, 9, 3, 6, 2, 1, 3. Which one of the following is true with respect to page replacement policies First In First Out (FIFO) and Least Recently Used (LRU)?- a)Both incur the same number of page faults

- b)FIFO incurs 2 more page faults than LRU

- c)LRU incurs 2 more page faults than FIFO

- d)FIFO incurs 1 more page faults than LRU

Correct answer is option 'A'. Can you explain this answer?

Consider a main memory with five page frames and the following sequence of page references: 3, 8, 2, 3, 9, 1, 6, 3, 8, 9, 3, 6, 2, 1, 3. Which one of the following is true with respect to page replacement policies First In First Out (FIFO) and Least Recently Used (LRU)?

a)

Both incur the same number of page faults

b)

FIFO incurs 2 more page faults than LRU

c)

LRU incurs 2 more page faults than FIFO

d)

FIFO incurs 1 more page faults than LRU

|

|

Sudhir Patel answered |

LRU:

9-page faults:

FIFO:

9-page faults:

Hence LRU and FIFO will have 9-page faults.

In process swapping in operating system, what is the residing location of swap space?- a)RAM

- b)Disk

- c)ROM

- d)On-chip cache

Correct answer is option 'B'. Can you explain this answer?

In process swapping in operating system, what is the residing location of swap space?

a)

RAM

b)

Disk

c)

ROM

d)

On-chip cache

|

|

Anmol Basu answered |

Swap Space in Operating System

In an operating system, swap space is a designated area on a disk that is used to temporarily store data that cannot fit in the computer's physical memory (RAM). When the RAM becomes full, the operating system moves inactive pages of memory to the swap space, freeing up valuable RAM for other processes.

Residing Location of Swap Space

The residing location of swap space is on a disk. This means that the swap space is allocated on the hard disk or solid-state drive (SSD) connected to the computer.

Reasons for Storing Swap Space on Disk

There are several reasons why swap space is stored on a disk:

1. Capacity: Disks have much larger storage capacity compared to RAM. This allows the operating system to allocate a significant amount of swap space to handle memory requirements of various processes.

2. Persistence: Data stored in swap space is persistent, meaning it remains on the disk even if the computer is powered off. This ensures that the swapped-out pages can be retrieved when needed, even after a system restart.

3. Flexibility: Disk storage allows for flexibility in managing swap space. The operating system can dynamically allocate or deallocate disk space for swap as per the memory demands of running processes.

4. Efficiency: Disk storage is slower compared to RAM, but it is still much faster than accessing data from secondary storage devices like hard disks. While accessing swapped-out pages from disk incurs a performance penalty, it is still more efficient than running out of physical memory and causing system instability or crashes.

5. Virtual Memory Management: Storing swap space on disk is an essential component of virtual memory management in modern operating systems. It enables the operating system to transparently manage memory allocation and paging, ensuring that processes can utilize more memory than physically available.

Conclusion

In summary, the residing location of swap space in the process swapping mechanism of an operating system is on a disk. This disk-based storage provides the necessary capacity, persistence, flexibility, and efficiency to handle memory demands and ensure smooth operation of the system.

In an operating system, swap space is a designated area on a disk that is used to temporarily store data that cannot fit in the computer's physical memory (RAM). When the RAM becomes full, the operating system moves inactive pages of memory to the swap space, freeing up valuable RAM for other processes.

Residing Location of Swap Space

The residing location of swap space is on a disk. This means that the swap space is allocated on the hard disk or solid-state drive (SSD) connected to the computer.

Reasons for Storing Swap Space on Disk

There are several reasons why swap space is stored on a disk:

1. Capacity: Disks have much larger storage capacity compared to RAM. This allows the operating system to allocate a significant amount of swap space to handle memory requirements of various processes.

2. Persistence: Data stored in swap space is persistent, meaning it remains on the disk even if the computer is powered off. This ensures that the swapped-out pages can be retrieved when needed, even after a system restart.

3. Flexibility: Disk storage allows for flexibility in managing swap space. The operating system can dynamically allocate or deallocate disk space for swap as per the memory demands of running processes.

4. Efficiency: Disk storage is slower compared to RAM, but it is still much faster than accessing data from secondary storage devices like hard disks. While accessing swapped-out pages from disk incurs a performance penalty, it is still more efficient than running out of physical memory and causing system instability or crashes.

5. Virtual Memory Management: Storing swap space on disk is an essential component of virtual memory management in modern operating systems. It enables the operating system to transparently manage memory allocation and paging, ensuring that processes can utilize more memory than physically available.

Conclusion

In summary, the residing location of swap space in the process swapping mechanism of an operating system is on a disk. This disk-based storage provides the necessary capacity, persistence, flexibility, and efficiency to handle memory demands and ensure smooth operation of the system.

Moving process from main memory to disk is called- a)scheduling

- b)caching

- c)swapping

- d)spooling

Correct answer is option 'C'. Can you explain this answer?

Moving process from main memory to disk is called

a)

scheduling

b)

caching

c)

swapping

d)

spooling

|

Riverdale Learning Institute answered |

- Memory management is the functionality of an operating system which handles or manages primary memory and moves processes back and forth between main memory and disk during execution.

- Swapping is a mechanism in which a process can be swapped temporarily out of main memory (or move) to secondary storage (disk) and make that memory available to other processes.

Consider a 2-way set associative cache memory with 4 sets and total 8 cache blocks (0-7) and a main memory with 128 blocks (0-127). What memory blocks will be present in the cache after the following sequence of memory block references if LRU policy is used for cache block replacement. Assuming that initially the cache did not have any memory block from the current job? 0 5 3 9 7 0 16 55 - a)0 3 5 7 16 55

- b)0 3 5 7 9 16 55

- c)0 5 7 9 16 55

- d)3 5 7 9 16 55

Correct answer is option 'C'. Can you explain this answer?

Consider a 2-way set associative cache memory with 4 sets and total 8 cache blocks (0-7) and a main memory with 128 blocks (0-127). What memory blocks will be present in the cache after the following sequence of memory block references if LRU policy is used for cache block replacement. Assuming that initially the cache did not have any memory block from the current job? 0 5 3 9 7 0 16 55

a)

0 3 5 7 16 55

b)

0 3 5 7 9 16 55

c)

0 5 7 9 16 55

d)

3 5 7 9 16 55

|

|

Atharva Das answered |

2-way set associative cache memory, .i.e K = 2.

No of sets is given as 4, i.e. S = 4 ( numbered 0 - 3 )

No of blocks in cache memory is given as 8, i.e. N =8 ( numbered from 0 -7)

Each set in cache memory contains 2 blocks.

The number of blocks in the main memory is 128, i.e M = 128. ( numbered from 0 -127)

A referred block numbered X of the main memory is placed in the set numbered ( X mod S ) of the the cache memory. In that set, the

block can be placed at any location, but if the set has already become full, then the current referred block of the main memory should replace a block in that set according to some replacement policy. Here the replacement policy is LRU ( i.e. Least Recently Used block should be replaced with currently referred block).

X ( Referred block no ) and

the corresponding Set values are as follows:

X-->set no ( X mod 4 )

0--->0 ( block 0 is placed in set 0, set 0 has 2 empty block locations,

block 0 is placed in any one of them )

5--->1 ( block 5 is placed in set 1, set 1 has 2 empty block locations,

block 5 is placed in any one of them )

3--->3 ( block 3 is placed in set 3, set 3 has 2 empty block locations,

block 3 is placed in any one of them )

9--->1 ( block 9 is placed in set 1, set 1 has currently 1 empty block location,

block 9 is placed in that, now set 1 is full, and block 5 is the

least recently used block )

7--->3 ( block 7 is placed in set 3, set 3 has 1 empty block location,

block 7 is placed in that, set 3 is full now,

and block 3 is the least recently used block)

0--->block 0 is referred again, and it is present in the cache memory in set 0,

so no need to put again this block into the cache memory.

16--->0 ( block 16 is placed in set 0, set 0 has 1 empty block location,

block 0 is placed in that, set 0 is full now, and block 0 is the LRU one)

55--->3 ( block 55 should be placed in set 3, but set 3 is full with block 3 and 7,

hence need to replace one block with block 55, as block 3 is the least

recently used block in the set 3, it is replaced with block 55.

Hence the main memory blocks present in the cache memory are : 0, 5, 7, 9, 16, 55 .

(Note: block 3 is not present in the cache memory, it was replaced with block 55 )

A processor uses 2-level page tables for virtual to physical address translation. Page tables for both levels are stored in the main memory. Virtual and physical addresses are both 32 bits wide. The memory is byte addressable. For virtual to physical address translation, the 10 most significant bits of the virtual address are used as index into the first level page table while the next 10 bits are used as index into the second level page table. The 12 least significant bits of the virtual address are used as offset within the page. Assume that the page table entries in both levels of page tables are 4 bytes wide. Further, the processor has a translation look-aside buffer (TLB), with a hit rate of 96%. The TLB caches recently used virtual page numbers and the corresponding physical page numbers. The processor also has a physically addressed cache with a hit rate of 90%. Main memory access time is 10 ns, cache access time is 1 ns, and TLB access time is also 1 ns. Suppose a process has only the following pages in its virtual address space: two contiguous code pages starting at virtual address 0x00000000, two contiguous data pages starting at virtual address 0×00400000, and a stack page starting at virtual address 0×FFFFF000. The amount of memory required for storing the page tables of this process is:- a)8 KB

- b)12 KB

- c)16 KB

- d)20 KB

Correct answer is option 'C'. Can you explain this answer?

A processor uses 2-level page tables for virtual to physical address translation. Page tables for both levels are stored in the main memory. Virtual and physical addresses are both 32 bits wide. The memory is byte addressable. For virtual to physical address translation, the 10 most significant bits of the virtual address are used as index into the first level page table while the next 10 bits are used as index into the second level page table. The 12 least significant bits of the virtual address are used as offset within the page. Assume that the page table entries in both levels of page tables are 4 bytes wide. Further, the processor has a translation look-aside buffer (TLB), with a hit rate of 96%. The TLB caches recently used virtual page numbers and the corresponding physical page numbers. The processor also has a physically addressed cache with a hit rate of 90%. Main memory access time is 10 ns, cache access time is 1 ns, and TLB access time is also 1 ns. Suppose a process has only the following pages in its virtual address space: two contiguous code pages starting at virtual address 0x00000000, two contiguous data pages starting at virtual address 0×00400000, and a stack page starting at virtual address 0×FFFFF000. The amount of memory required for storing the page tables of this process is:

a)

8 KB

b)

12 KB

c)

16 KB

d)

20 KB

|

|

Hrishikesh Unni answered |

Breakup of given addresses into bit form:- 32bits are broken up as 10bits (L2) | 10bits (L1) | 12bits (offset)

first code page:

0x00000000 = 0000 0000 00 | 00 0000 0000 | 0000 0000 0000

so next code page will start from 0x00001000 = 0000 0000 00 | 00 0000 0001 | 0000 0000 0000

first data page:

0x00400000 = 0000 0000 01 | 00 0000 0000 | 0000 0000 0000

so next data page will start from 0x00401000 = 0000 0000 01 | 00 0000 0001 | 0000 0000 0000

only one stack page:

0xFFFFF000 = 1111 1111 11 | 11 1111 1111 | 0000 0000 0000

Now, for second level page table, we will just require 1 Page which will contain following 3 distinct entries i.e. 0000 0000 00, 0000 0000 01, 1111 1111 11. Now, for each of these distinct entries, we will have 1-1 page in Level-1.

Hence, we will have in total 4 pages and page size = 2^12 = 4KB.

Therefore, Memory required to store page table = 4*4KB = 16KB.

first code page:

0x00000000 = 0000 0000 00 | 00 0000 0000 | 0000 0000 0000

so next code page will start from 0x00001000 = 0000 0000 00 | 00 0000 0001 | 0000 0000 0000

first data page:

0x00400000 = 0000 0000 01 | 00 0000 0000 | 0000 0000 0000

so next data page will start from 0x00401000 = 0000 0000 01 | 00 0000 0001 | 0000 0000 0000

only one stack page:

0xFFFFF000 = 1111 1111 11 | 11 1111 1111 | 0000 0000 0000

Now, for second level page table, we will just require 1 Page which will contain following 3 distinct entries i.e. 0000 0000 00, 0000 0000 01, 1111 1111 11. Now, for each of these distinct entries, we will have 1-1 page in Level-1.

Hence, we will have in total 4 pages and page size = 2^12 = 4KB.

Therefore, Memory required to store page table = 4*4KB = 16KB.

Consider a system with byte-addressable memory, 32 bit logical addresses, 4 kilobyte page size and page table entries of 4 bytes each. The size of the page table in the system in megabytes is ___________- a)2

- b)4

- c)8

- d)16

Correct answer is option 'B'. Can you explain this answer?

Consider a system with byte-addressable memory, 32 bit logical addresses, 4 kilobyte page size and page table entries of 4 bytes each. The size of the page table in the system in megabytes is ___________

a)

2

b)

4

c)

8

d)

16

|

|

Hrishikesh Saini answered |

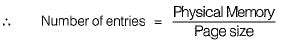

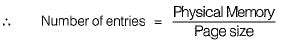

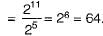

Number of entries in page table = 232 / 4Kbyte

= 232 / 212

= 220

= 232 / 212

= 220

Size of page table = (No. page table entries)*(Size of an entry)

= 220 * 4 bytes

= 222 = 4 Megabytes

= 220 * 4 bytes

= 222 = 4 Megabytes

The address sequence generated by tracing a particular program executing in a pure demand paging system with 100 bytes per page is

0100, 0200, 0430, 0499, 0510, 0530, 0560, 0120, 0220, 0240, 0260, 0320, 0410.

Suppose that the memory can store only one page and if x is the address which causes a page fault then the bytes from addresses x to x + 99 are loaded on to the memory.Q. How many page faults will occur ?- a)0

- b)4

- c)7

- d)8

Correct answer is option 'C'. Can you explain this answer?

The address sequence generated by tracing a particular program executing in a pure demand paging system with 100 bytes per page is

0100, 0200, 0430, 0499, 0510, 0530, 0560, 0120, 0220, 0240, 0260, 0320, 0410.

Suppose that the memory can store only one page and if x is the address which causes a page fault then the bytes from addresses x to x + 99 are loaded on to the memory.

0100, 0200, 0430, 0499, 0510, 0530, 0560, 0120, 0220, 0240, 0260, 0320, 0410.

Suppose that the memory can store only one page and if x is the address which causes a page fault then the bytes from addresses x to x + 99 are loaded on to the memory.

Q. How many page faults will occur ?

a)

0

b)

4

c)

7

d)

8

|

|

Shubham Ghoshal answered |

The optimal page replacement algorithm will select the page that- a)Has not been used for the longest time in the past.

- b)Will not be used for the longest time in the future.

- c)Has been used least number of times.

- d)Has been used most number of times.

Correct answer is option 'B'. Can you explain this answer?

The optimal page replacement algorithm will select the page that

a)

Has not been used for the longest time in the past.

b)

Will not be used for the longest time in the future.

c)

Has been used least number of times.

d)

Has been used most number of times.

|

|

Sandeep Majumdar answered |

The optimal page replacement algorithm will select the page whose next occurrence will be after the longest time in future. For example, if we need to swap a page and there are two options from which we can swap, say one would be used after 10s and the other after 5s, then the algorithm will swap out the page that would be required 10s later. Thus, B is the correct choice. Please comment below if you find anything wrong in the above post.

Consider a system using paging and segmentation. The virtual address space consist of up to 8 segments and each segment is 229 bytes long. The hardware pages each segment into 28 byte pages.How many bits in the virtual address specify the offset within page?- a)8 bits

- b)16 bits

- c)32 bits

- d)64 bits

Correct answer is option 'A'. Can you explain this answer?

Consider a system using paging and segmentation. The virtual address space consist of up to 8 segments and each segment is 229 bytes long. The hardware pages each segment into 28 byte pages.

How many bits in the virtual address specify the offset within page?

a)

8 bits

b)

16 bits

c)

32 bits

d)

64 bits

|

|

Aditya Nair answered |

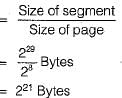

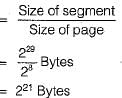

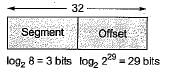

Virtual address space = 8 x 229 bytes

= 232 bytes

No. of pages within each segment

Offset within the page = 8 bits (for 28 bytes)

= 232 bytes

No. of pages within each segment

Offset within the page = 8 bits (for 28 bytes)

If page fault service time is 50 milliseconds and memory access time is 100 nanoseconds, then what will be the effective access time, if the probability of page fault is p. - a)(500000 + 100 p) nanoseconds

- b)(100 + 500000 x p) nanoseconds

- c)10-7 -10-7p (500000) seconds

- d)10-7 + 49.9 x 10-3 p seconds

Correct answer is option 'D'. Can you explain this answer?

If page fault service time is 50 milliseconds and memory access time is 100 nanoseconds, then what will be the effective access time, if the probability of page fault is p.

a)

(500000 + 100 p) nanoseconds

b)

(100 + 500000 x p) nanoseconds

c)

10-7 -10-7p (500000) seconds

d)

10-7 + 49.9 x 10-3 p seconds

|

|

Samarth Kapoor answered |

[(1- p)100 + p x 50 x 106] ns

Since, 1 ns = 10-9sec

=> 10-9[(1 -p)100 + p x 50 x 106] sec

=> [(1 - p) x 10-7 + p x 50 x 10-3] sec

=> 10-7 - 10-7p + p x 50 x 10-3

=> 10-7 + l0-3 x p f [50 - 10-4]

~ [10-7 + p x 10-3 x 49.9] sec

Since, 1 ns = 10-9sec

=> 10-9[(1 -p)100 + p x 50 x 106] sec

=> [(1 - p) x 10-7 + p x 50 x 10-3] sec

=> 10-7 - 10-7p + p x 50 x 10-3

=> 10-7 + l0-3 x p f [50 - 10-4]

~ [10-7 + p x 10-3 x 49.9] sec

Memory protection is of no use in a- a)Single user system

- b)Non-multiprogramming system

- c)Non-multitasking system

- d)None of the above

Correct answer is option 'D'. Can you explain this answer?

Memory protection is of no use in a

a)

Single user system

b)

Non-multiprogramming system

c)

Non-multitasking system

d)

None of the above

|

|

Snehal Desai answered |

Introduction:

Memory protection is a mechanism implemented in computer systems to prevent unauthorized access or modifications to memory locations. It ensures that each process running on the system can only access the memory assigned to it and cannot interfere with the memory of other processes. However, in certain types of systems, memory protection may not be necessary or useful.

Explanation:

Single user system:

In a single user system, there is only one user who has complete control over the system. This means that the user has full access to all the resources of the system, including memory. Since there is no need to protect memory from the user itself, memory protection is of no use in a single user system.

Non-multiprogramming system:

In a non-multiprogramming system, only one program is executed at a time. The entire system resources, including memory, are dedicated to that single program. In such systems, there is no need to protect memory from other programs or processes because there are no other programs running concurrently. Therefore, memory protection is not required in a non-multiprogramming system.

Non-multitasking system:

In a non-multitasking system, only one task or process is executed at a time. The system resources, including memory, are dedicated to that single task. Similar to a non-multiprogramming system, there is no need to protect memory from other tasks or processes because there are no other tasks running concurrently. Hence, memory protection is not necessary in a non-multitasking system.

Conclusion:

In summary, memory protection is not useful in a single user system, non-multiprogramming system, or non-multitasking system. These types of systems do not have multiple users, multiple programs, or multiple tasks running concurrently, eliminating the need for memory protection. However, in multi-user, multiprogramming, or multitasking systems where multiple processes or tasks run simultaneously, memory protection becomes crucial to ensure the stability, security, and isolation of each process.

Memory protection is a mechanism implemented in computer systems to prevent unauthorized access or modifications to memory locations. It ensures that each process running on the system can only access the memory assigned to it and cannot interfere with the memory of other processes. However, in certain types of systems, memory protection may not be necessary or useful.

Explanation:

Single user system:

In a single user system, there is only one user who has complete control over the system. This means that the user has full access to all the resources of the system, including memory. Since there is no need to protect memory from the user itself, memory protection is of no use in a single user system.

Non-multiprogramming system:

In a non-multiprogramming system, only one program is executed at a time. The entire system resources, including memory, are dedicated to that single program. In such systems, there is no need to protect memory from other programs or processes because there are no other programs running concurrently. Therefore, memory protection is not required in a non-multiprogramming system.

Non-multitasking system:

In a non-multitasking system, only one task or process is executed at a time. The system resources, including memory, are dedicated to that single task. Similar to a non-multiprogramming system, there is no need to protect memory from other tasks or processes because there are no other tasks running concurrently. Hence, memory protection is not necessary in a non-multitasking system.

Conclusion:

In summary, memory protection is not useful in a single user system, non-multiprogramming system, or non-multitasking system. These types of systems do not have multiple users, multiple programs, or multiple tasks running concurrently, eliminating the need for memory protection. However, in multi-user, multiprogramming, or multitasking systems where multiple processes or tasks run simultaneously, memory protection becomes crucial to ensure the stability, security, and isolation of each process.

Consider a system using paging and segmentation. The virtual address space consist of up to 8 segments and each segment is 229 bytes long. The hardware pages each segment into 28 byte pages.How many bits in the virtual address specify the entire virtual address?- a)8 bits

- b)16 bits

- c)32 bits

- d)64 bits

Correct answer is option 'C'. Can you explain this answer?

Consider a system using paging and segmentation. The virtual address space consist of up to 8 segments and each segment is 229 bytes long. The hardware pages each segment into 28 byte pages.

How many bits in the virtual address specify the entire virtual address?

a)

8 bits

b)

16 bits

c)

32 bits

d)

64 bits

|

|

Janani Joshi answered |

In a paged memory, the page hit ratio is 0.35. The time required to access a page in secondary memory is equal to 100 ns. The time required to access a page in primary memory is 10 ns. The average time required to access a page is- a)3.0 ns

- b)68.0 ns

- c)68.5 ns

- d)78.5 ns

Correct answer is option 'C'. Can you explain this answer?

In a paged memory, the page hit ratio is 0.35. The time required to access a page in secondary memory is equal to 100 ns. The time required to access a page in primary memory is 10 ns. The average time required to access a page is

a)

3.0 ns

b)

68.0 ns

c)

68.5 ns

d)

78.5 ns

|

|

Ishaan Saini answered |

Hit ratio = 0.35

Time (secondary memory) = 100 ns

T(main memory) = 10 ns

Average access time = h(Tm) + (1 - h) (Ts)

= 0.35 x 10 +(0.65) x 100

= 3.5 + 65

= 68.5 ns

Time (secondary memory) = 100 ns

T(main memory) = 10 ns

Average access time = h(Tm) + (1 - h) (Ts)

= 0.35 x 10 +(0.65) x 100

= 3.5 + 65

= 68.5 ns

Hence option (C) is correct

For basics of Memory management lecture click on the following link:

For 64 bit virtual addresses, a 4 KB page size and 256 MB of RAM, an inverted page table requires- a)240 entries

- b)252 entries

- c)216 entries

- d)278 entries

Correct answer is option 'C'. Can you explain this answer?

For 64 bit virtual addresses, a 4 KB page size and 256 MB of RAM, an inverted page table requires

a)

240 entries

b)

252 entries

c)

216 entries

d)

278 entries

|

|

Mansi Shah answered |

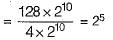

Virtul address space = 264 bytes

Page size = 4 KB

= 212 Bytes

Physical Memotry = 256 MB.

= 228 Bytes

Number of entries in inverted page table = Number of frames in physical memory

Page size = 4 KB

= 212 Bytes

Physical Memotry = 256 MB.

= 228 Bytes

Number of entries in inverted page table = Number of frames in physical memory

Page fault occurs when- a)The page is corrupted by application software

- b)The page is in main memory

- c)The page is not in main memory

- d)The tries to divide a number by 0

Correct answer is option 'C'. Can you explain this answer?

Page fault occurs when

a)

The page is corrupted by application software

b)

The page is in main memory

c)

The page is not in main memory

d)

The tries to divide a number by 0

|

|

Anand Chatterjee answered |

Page fault occurs when required page that is to be needed is not found in the main memory. When the page is found in the main memory it is called page hit, otherwise miss.

Fragmentation is- a)Dividing the secondary memory into equal sized fragments

- b)Dividing the main memory into equal-size fragments

- c)Fragments of memory words used in a page

- d)Fragments of memory words unused in a page

Correct answer is option 'B'. Can you explain this answer?

Fragmentation is

a)

Dividing the secondary memory into equal sized fragments

b)

Dividing the main memory into equal-size fragments

c)

Fragments of memory words used in a page

d)

Fragments of memory words unused in a page

|

|

Mira Rane answered |

Fragmentation is basically dividing main memory into fragments [smaller size parts] usually of the same size, it is of two types:

(i) External fragmentation

(ii) Internal fragmentation.

(i) External fragmentation

(ii) Internal fragmentation.

In partitioned memory allocation scheme, the- a)Best fit algorithm is always better than the first fit algorithm.

- b)First fit algorithm is always better than the best fit algorithm.

- c)Superiority of the first fit and best-fit algorithms depend on the sequence of memory requests.

- d)None of these

Correct answer is option 'C'. Can you explain this answer?

In partitioned memory allocation scheme, the

a)

Best fit algorithm is always better than the first fit algorithm.

b)

First fit algorithm is always better than the best fit algorithm.

c)

Superiority of the first fit and best-fit algorithms depend on the sequence of memory requests.

d)

None of these

|

|

Mahi Yadav answered |

Superiority of the first fit and best-fit algorithms depend on the sequence of memory requests and not on the algorithm itself.

Which of the following is NOT an advantage of using shared, dynamically linked libraries as opposed to using statically linked libraries ?- a)Smaller sizes of executable files

- b)Lesser overall page fault rate in the system

- c)Faster program startup

- d)Existing programs need not be re-linked to take advantage of newer versions of libraries

Correct answer is option 'C'. Can you explain this answer?

Which of the following is NOT an advantage of using shared, dynamically linked libraries as opposed to using statically linked libraries ?

a)

Smaller sizes of executable files

b)

Lesser overall page fault rate in the system

c)

Faster program startup

d)

Existing programs need not be re-linked to take advantage of newer versions of libraries

|

|

Sagar Saha answered |

Refer Static and Dynamic Libraries In Non-Shared (static) libraries, since library code is connected at compile time, the final executable has no dependencies on the the library at run time i.e. no additional run-time loading costs, it means that you don’t need to carry along a copy of the library that is being used and you have everything under your control and there is no dependency.

Kalpana had encyclopaedic knowledge means:- a)she knew everything about aeronauticals

- b)she had mastery over biology, astrophysics and engineering

- c)having knowledge of a wide variety of subjects

- d)she knew the whole Britannica Encyclopaedia

Correct answer is option 'C'. Can you explain this answer?

Kalpana had encyclopaedic knowledge means:

a)

she knew everything about aeronauticals

b)

she had mastery over biology, astrophysics and engineering

c)

having knowledge of a wide variety of subjects

d)

she knew the whole Britannica Encyclopaedia

|

|

Sarita Singh answered |

She having encyclopedic knowledge means having knowledge of a wide variety of subjects.

A Computer system implements 8 kilobyte pages and a 32-bit physical address space. Each page table entry contains a valid bit, a dirty bit three permission bits, and the translation. If the maximum size of the page table of a process is 24 megabytes, the length of the virtual address supported by the system is _______________ bits- a)36

- b)32

- c)28

- d)40

Correct answer is option 'A'. Can you explain this answer?

A Computer system implements 8 kilobyte pages and a 32-bit physical address space. Each page table entry contains a valid bit, a dirty bit three permission bits, and the translation. If the maximum size of the page table of a process is 24 megabytes, the length of the virtual address supported by the system is _______________ bits

a)

36

b)

32

c)

28

d)

40

|

|

Tanvi Datta answered |

Max size of virtual address can be calculated by calculating maximum number of page table entries.

Maximum Number of page table entries can be calculated using given maximum page table size and size of a page table entry.

Given maximum page table size = 24 MB

Let us calculate size of a page table entry.

A page table entry has following number of bits.

1 (valid bit) +

1 (dirty bit) +

3 (permission bits) +

x bits to store physical address space of a page.

Maximum Number of page table entries can be calculated using given maximum page table size and size of a page table entry.

Given maximum page table size = 24 MB

Let us calculate size of a page table entry.

A page table entry has following number of bits.

1 (valid bit) +

1 (dirty bit) +

3 (permission bits) +

x bits to store physical address space of a page.

Value of x = (Total bits in physical address) - (Total bits for addressing within a page)

Since size of a page is 8 kilobytes, total bits needed within a page is 13.

So value of x = 32 - 13 = 19

Since size of a page is 8 kilobytes, total bits needed within a page is 13.

So value of x = 32 - 13 = 19

Putting value of x, we get size of a page table entry = 1 + 1 + 3 + 19 = 24bits.

Number of page table entries

= (Page Table Size) / (An entry size)

= (24 megabytes / 24 bits)

= 223

Number of page table entries

= (Page Table Size) / (An entry size)

= (24 megabytes / 24 bits)

= 223

Vrtual address Size

= (Number of page table entries) * (Page Size)

= 223 * 8 kilobits

= 236

Therefore, length of virtual address space = 36

= (Number of page table entries) * (Page Size)

= 223 * 8 kilobits

= 236

Therefore, length of virtual address space = 36

A disk has 200 tracks (numbered 0 through 199). At a given time, it was servicing the request of reading data from track 120, and at the previous request, service was for track 90. The pending requests (in order of their arrival) are for track numbers. 30 70 115 130 110 80 20 25. How many times will the head change its direction for the disk scheduling policies SSTF(Shortest Seek Time First) and FCFS (First Come Fist Serve)- a)2 and 3

- b)3 and 3

- c)3 and 4

- d)4 and 4

Correct answer is option 'C'. Can you explain this answer?

A disk has 200 tracks (numbered 0 through 199). At a given time, it was servicing the request of reading data from track 120, and at the previous request, service was for track 90. The pending requests (in order of their arrival) are for track numbers. 30 70 115 130 110 80 20 25. How many times will the head change its direction for the disk scheduling policies SSTF(Shortest Seek Time First) and FCFS (First Come Fist Serve)

a)

2 and 3

b)

3 and 3

c)

3 and 4

d)

4 and 4

|

|

Saptarshi Saha answered |

Explanation:

To determine the number of times the head changes its direction for the SSTF and FCFS disk scheduling policies, we need to analyze the order in which the pending requests are serviced.

SSTF (Shortest Seek Time First):

1. Start at track 120.

2. The nearest pending request is track 115 (5 tracks away).

3. Move the head to track 115.

4. The nearest pending request is track 110 (5 tracks away).

5. Move the head to track 110.

6. The nearest pending request is track 130 (20 tracks away).

7. Move the head to track 130.

8. The nearest pending request is track 130 (0 tracks away).

9. Move the head to track 130.

10. The nearest pending request is track 70 (60 tracks away).

11. Move the head to track 70.

12. The nearest pending request is track 80 (10 tracks away).

13. Move the head to track 80.

14. The nearest pending request is track 90 (10 tracks away).

15. Move the head to track 90.

16. The nearest pending request is track 30 (60 tracks away).

17. Move the head to track 30.

18. The nearest pending request is track 25 (5 tracks away).

19. Move the head to track 25.

20. The nearest pending request is track 20 (5 tracks away).

21. Move the head to track 20.

FCFS (First Come First Serve):

1. Start at track 120.

2. Service the pending request for track 30.

3. Move the head to track 30.

4. Service the pending request for track 70.

5. Move the head to track 70.

6. Service the pending request for track 115.

7. Move the head to track 115.

8. Service the pending request for track 130.

9. Move the head to track 130.

10. Service the pending request for track 110.

11. Move the head to track 110.

12. Service the pending request for track 80.

13. Move the head to track 80.

14. Service the pending request for track 20.

15. Move the head to track 20.

16. Service the pending request for track 25.

The head changes its direction whenever it moves from a higher track number to a lower track number or vice versa.

Number of times the head changes its direction for SSTF: 2 times (from track 120 to 115, and from track 115 to 110)

Number of times the head changes its direction for FCFS: 4 times (from track 120 to 30, from track 30 to 70, from track 70 to 115, and from track 115 to 130)

Therefore, the correct answer is option 'C': 3 times for SSTF and 4 times for FCFS.

To determine the number of times the head changes its direction for the SSTF and FCFS disk scheduling policies, we need to analyze the order in which the pending requests are serviced.

SSTF (Shortest Seek Time First):

1. Start at track 120.

2. The nearest pending request is track 115 (5 tracks away).

3. Move the head to track 115.

4. The nearest pending request is track 110 (5 tracks away).

5. Move the head to track 110.

6. The nearest pending request is track 130 (20 tracks away).

7. Move the head to track 130.

8. The nearest pending request is track 130 (0 tracks away).

9. Move the head to track 130.

10. The nearest pending request is track 70 (60 tracks away).

11. Move the head to track 70.

12. The nearest pending request is track 80 (10 tracks away).

13. Move the head to track 80.

14. The nearest pending request is track 90 (10 tracks away).

15. Move the head to track 90.

16. The nearest pending request is track 30 (60 tracks away).

17. Move the head to track 30.

18. The nearest pending request is track 25 (5 tracks away).

19. Move the head to track 25.

20. The nearest pending request is track 20 (5 tracks away).

21. Move the head to track 20.

FCFS (First Come First Serve):

1. Start at track 120.

2. Service the pending request for track 30.

3. Move the head to track 30.

4. Service the pending request for track 70.

5. Move the head to track 70.

6. Service the pending request for track 115.

7. Move the head to track 115.

8. Service the pending request for track 130.

9. Move the head to track 130.

10. Service the pending request for track 110.

11. Move the head to track 110.

12. Service the pending request for track 80.

13. Move the head to track 80.

14. Service the pending request for track 20.

15. Move the head to track 20.

16. Service the pending request for track 25.

The head changes its direction whenever it moves from a higher track number to a lower track number or vice versa.

Number of times the head changes its direction for SSTF: 2 times (from track 120 to 115, and from track 115 to 110)

Number of times the head changes its direction for FCFS: 4 times (from track 120 to 30, from track 30 to 70, from track 70 to 115, and from track 115 to 130)

Therefore, the correct answer is option 'C': 3 times for SSTF and 4 times for FCFS.

Consider six memory partitions of size 200 KB, 400 KB, 600 KB, 500 KB, 300 KB, and 250 KB, where KB refers to kilobyte. These partitions need to be allotted to four processes of sizes 357 KB, 210 KB, 468 KB and 491 KB in that order. If the best fit algorithm is used, which partitions are NOT allotted to any process?- a)200 KB and 300 KB

- b)200 KB and 250 KB

- c)250 KB and 300 KB

- d)300 KB and 400 KB

Correct answer is option 'A'. Can you explain this answer?

Consider six memory partitions of size 200 KB, 400 KB, 600 KB, 500 KB, 300 KB, and 250 KB, where KB refers to kilobyte. These partitions need to be allotted to four processes of sizes 357 KB, 210 KB, 468 KB and 491 KB in that order. If the best fit algorithm is used, which partitions are NOT allotted to any process?

a)

200 KB and 300 KB

b)

200 KB and 250 KB

c)

250 KB and 300 KB

d)

300 KB and 400 KB

|

|

Gargi Menon answered |

Best fit allocates the smallest block among those that are large enough for the new process. So the memory blocks are allocated in below order.

357 ---> 400

210 ---> 250

468 ---> 500

491 ---> 600

357 ---> 400

210 ---> 250

468 ---> 500

491 ---> 600

Sot the remaining blocks are of 200 KB and 300 KB

Suppose the time to service a page fault is on the average 10 milliseconds, while a memory access takes 1 microsecond. Then a 99.99% hit ratio results in average memory access time of - a)1.9999 milliseconds

- b)1 millisecond

- c)9.999 microseconds

- d)1.9999 microseconds

Correct answer is option 'D'. Can you explain this answer?

Suppose the time to service a page fault is on the average 10 milliseconds, while a memory access takes 1 microsecond. Then a 99.99% hit ratio results in average memory access time of

a)

1.9999 milliseconds

b)

1 millisecond

c)

9.999 microseconds

d)

1.9999 microseconds

|

|

Arka Bajaj answered |

If any page request comes it will first search into page table, if present, then it will directly fetch the page from memory, thus in this case time requires will be only memory access time. But if required page will not be found, first we have to bring it out and then go for memory access. This extra time is called page fault service time. Let hit ratio be p , memory access time be t1 , and page fault service time be t2.

Hence, average memory access time = p*t1 + (1-p)*t2

=(99.99*1 + 0.01*(10*1000 + 1))/100 =1.9999 *10^-6 sec

=(99.99*1 + 0.01*(10*1000 + 1))/100 =1.9999 *10^-6 sec

Consider a computer system with ten physical page frames. The system is provided with an access sequence a1, a2, ..., a20, a1, a2, ..., a20), where each ai number. The difference in the number of page faults between the last-in-first-out page replacement policy and the optimal page replacement policy is __________

[Note that this question was originally Fill-in-the-Blanks question]- a)0

- b)1

- c)2

- d)3

Correct answer is option 'B'. Can you explain this answer?

Consider a computer system with ten physical page frames. The system is provided with an access sequence a1, a2, ..., a20, a1, a2, ..., a20), where each ai number. The difference in the number of page faults between the last-in-first-out page replacement policy and the optimal page replacement policy is __________

[Note that this question was originally Fill-in-the-Blanks question]

[Note that this question was originally Fill-in-the-Blanks question]

a)

0

b)

1

c)

2

d)

3

|

|

Arnab Kapoor answered |

LIFO stands for last in, first out a1 to a10 will result in page faults, So 10 page faults from a1 to a10. Then a11 will replace a10(last in is a10), a12 will replace a11 and so on till a20, so 10 page faults from a11 to a20 and a20 will be top of stack and a9…a1 are remained as such. Then a1 to a9 are already there. So 0 page faults from a1 to a9. a10 will replace a20, a11 will replace a10 and so on. So 11 page faults from a10 to a20. So total faults will be 10+10+11 = 31.

Optimal a1 to a10 will result in page faults, So 10 page faults from a1 to a10. Then a11 will replace a10 because among a1 to a10, a10 will be used later, a12 will replace a11 and so on. So 10 page faults from a11 to a20 and a20 will be top of stack and a9…a1 are remained as such. Then a1 to a9 are already there. So 0 page faults from a1 to a9. a10 will replace a1 because it will not be used afterwards and so on, a10 to a19 will have 10 page faults. a20 is already there, so no page fault for a20. Total faults 10+10+10 = 30. Difference = 1

Optimal a1 to a10 will result in page faults, So 10 page faults from a1 to a10. Then a11 will replace a10 because among a1 to a10, a10 will be used later, a12 will replace a11 and so on. So 10 page faults from a11 to a20 and a20 will be top of stack and a9…a1 are remained as such. Then a1 to a9 are already there. So 0 page faults from a1 to a9. a10 will replace a1 because it will not be used afterwards and so on, a10 to a19 will have 10 page faults. a20 is already there, so no page fault for a20. Total faults 10+10+10 = 30. Difference = 1

The size of the virtual memory depends on the size of the- a)Data bus

- b)Main memory

- c)Address bus

- d)None of these

Correct answer is option 'C'. Can you explain this answer?

The size of the virtual memory depends on the size of the

a)

Data bus

b)

Main memory

c)

Address bus

d)

None of these

|

|

Prerna Joshi answered |

The size of virtual memory depends on the size of the address bus. Processor generates the memory address as per the size of virtual memory.

If the property of locality of reference is well pronounced a program:

1. The number of page faults will be more.

2. The number of page faults will be less.

3. The number of page faults will remain the same.

4. Execution will be faster.- a)1 and 2

- b)2 and 4

- c)3 and 4

- d)None of the above

Correct answer is option 'B'. Can you explain this answer?

If the property of locality of reference is well pronounced a program:

1. The number of page faults will be more.

2. The number of page faults will be less.

3. The number of page faults will remain the same.

4. Execution will be faster.

1. The number of page faults will be more.

2. The number of page faults will be less.

3. The number of page faults will remain the same.

4. Execution will be faster.

a)

1 and 2

b)

2 and 4

c)

3 and 4

d)

None of the above

|

|

Nisha Das answered |

If the property of locality of reference is well pronounced then the page to be accessed will be found in the memory more likely and hence page faults will be less. Also since access time will be only of the upper level in the memory hierarchy bence execution will be faster.

Consider a computer with 8 Mbytes of main memory and a 128 K cache. The cache block size is 4 K. It uses a direct mapping scheme for cache management. How many different main memory blocks can map onto a given physical cache block?- a)2048

- b)256

- c)64

- d)None of these

Correct answer is option 'C'. Can you explain this answer?

Consider a computer with 8 Mbytes of main memory and a 128 K cache. The cache block size is 4 K. It uses a direct mapping scheme for cache management. How many different main memory blocks can map onto a given physical cache block?

a)

2048

b)

256

c)

64

d)

None of these

|

|

Jaya Chakraborty answered |

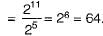

Number of block in cash

Number of block in main memory

Since the system uses direct mapping hence different memory blocks that can map into the cache block.

= Number of blocks in MM% Number of blocks in cache

Number of block in main memory

Since the system uses direct mapping hence different memory blocks that can map into the cache block.

= Number of blocks in MM% Number of blocks in cache

Consider a fully-associative data cache with 32 blocks of 64 bytes each. The cache uses LRU (Least Recently Used) replacement. Consider the following C code to sum together all of the elements of a 64 by 64 two-dimensional array of 64-bit double-precision floating point numbers.double sum (double A [64] [64] ) {

int i, j ;

double sum = 0 ;

for (i = 0 ; i < 64; i++)

for (j = 0; j < 64; j++)

sum += A [i] [j] ;

return sum ;

}Assume all blocks in the cache are initially invalid. How many cache misses will result from the code?- a)256

- b)512

- c)128

- d)1024

Correct answer is option 'B'. Can you explain this answer?

Consider a fully-associative data cache with 32 blocks of 64 bytes each. The cache uses LRU (Least Recently Used) replacement. Consider the following C code to sum together all of the elements of a 64 by 64 two-dimensional array of 64-bit double-precision floating point numbers.

double sum (double A [64] [64] ) {

int i, j ;

double sum = 0 ;

for (i = 0 ; i < 64; i++)

for (j = 0; j < 64; j++)

sum += A [i] [j] ;

return sum ;

}

int i, j ;

double sum = 0 ;

for (i = 0 ; i < 64; i++)

for (j = 0; j < 64; j++)

sum += A [i] [j] ;

return sum ;

}

Assume all blocks in the cache are initially invalid. How many cache misses will result from the code?

a)

256

b)

512

c)

128

d)

1024

|

|

Luminary Institute answered |

Least Recently Used (LRU) page replacement algorithm:

LRU policy follows the concept of locality of reference as the base for its page replacement decisions. LRU policy says that pages that have not been used for the longest period of time will probably not be used for a long time.

LRU policy follows the concept of locality of reference as the base for its page replacement decisions. LRU policy says that pages that have not been used for the longest period of time will probably not be used for a long time.

The given data,

Array size= 64 x64 x size of each element.

Array size=64 x64x 64-bit

Array size= 64 x64 x8 bytes

The data cache with 32 blocks of 64 bytes each.

Data cache= 64 bytes

Initially, the data cache loads blocks when i=0 and j=0 and one cache miss after that till i=0 and j=8 there is no cache misses because at one-time cache loads the 64 bytes of data.

So number for 64 bytes 1 miss then,

64 x64 x8 bytes=?

Number of miss= 64 x64 x8 bytes / 64

The number of miss= 512.

Hence the correct answer is 512.

Array size= 64 x64 x size of each element.

Array size=64 x64x 64-bit

Array size= 64 x64 x8 bytes

The data cache with 32 blocks of 64 bytes each.

Data cache= 64 bytes

Initially, the data cache loads blocks when i=0 and j=0 and one cache miss after that till i=0 and j=8 there is no cache misses because at one-time cache loads the 64 bytes of data.

So number for 64 bytes 1 miss then,

64 x64 x8 bytes=?

Number of miss= 64 x64 x8 bytes / 64

The number of miss= 512.

Hence the correct answer is 512.

A disk has 8 equidistant tracks. The diameters of the innermost and outermost tracks are 1 cm and 8 cm respectively. The innermost track has a storage capacity of 10 MB. What is the total amount of data that can be stored on the disk if it is used with a drive that rotates it with (i) Constant Linear Velocity (ii) Constant Angular Velocity? - a)(i) 80 MB (ii) 2040 MB

- b)(i) 2040 MB (ii) 80 MB

- c)(i) 80 MB (ii) 360 MB

- d)(i) 360 MB (ii) 80 MB

Correct answer is option 'D'. Can you explain this answer?

A disk has 8 equidistant tracks. The diameters of the innermost and outermost tracks are 1 cm and 8 cm respectively. The innermost track has a storage capacity of 10 MB. What is the total amount of data that can be stored on the disk if it is used with a drive that rotates it with (i) Constant Linear Velocity (ii) Constant Angular Velocity?

a)

(i) 80 MB (ii) 2040 MB

b)

(i) 2040 MB (ii) 80 MB

c)

(i) 80 MB (ii) 360 MB

d)

(i) 360 MB (ii) 80 MB

|

|

Anshu Mehta answered |

Constant linear velocity :

Diameter of inner track = d = 1cm Circumference of inner track : = 2 * 3.14 * (d/2) = 3.14 cm

Storage capacity = 10 MB (given) Circumference of all equidistant tracks : = 2 * 3.14 *(0.5 + 1 + 1.5 + 2 + 2.5 + 3+ 3.5 + 4) = 113.14cm

Here, 3.14 cm holds 10 MB. Therefore, 1 cm holds 3.18 MB. 113.14 cm holds 113.14 * 3.18 = 360 MB. Total amount of data that can be stored on the disk = 360 MB

Constant angular velocity :

In case of CAV, the disk rotates at a constant angular speed. Same rotation time is taken by all the tracks. Total amount of data that can be stored on the disk = 8 * 10 = 80 MB

Thus, option (D) is correct.

Please comment below if you find anything wrong in the above post.

Diameter of inner track = d = 1cm Circumference of inner track : = 2 * 3.14 * (d/2) = 3.14 cm

Storage capacity = 10 MB (given) Circumference of all equidistant tracks : = 2 * 3.14 *(0.5 + 1 + 1.5 + 2 + 2.5 + 3+ 3.5 + 4) = 113.14cm

Here, 3.14 cm holds 10 MB. Therefore, 1 cm holds 3.18 MB. 113.14 cm holds 113.14 * 3.18 = 360 MB. Total amount of data that can be stored on the disk = 360 MB

Constant angular velocity :

In case of CAV, the disk rotates at a constant angular speed. Same rotation time is taken by all the tracks. Total amount of data that can be stored on the disk = 8 * 10 = 80 MB

Thus, option (D) is correct.

Please comment below if you find anything wrong in the above post.

A paging scheme uses a Translation Look-aside Buffer (TLB). A TLB-access takes 10 ns and a main memory access takes 50 ns. What is the effective access time(in ns) if the TLB hit ratio is 90% and there is no page-fault?- a)54

- b)60

- c)65

- d)75

Correct answer is option 'C'. Can you explain this answer?

A paging scheme uses a Translation Look-aside Buffer (TLB). A TLB-access takes 10 ns and a main memory access takes 50 ns. What is the effective access time(in ns) if the TLB hit ratio is 90% and there is no page-fault?

a)

54

b)

60

c)

65

d)

75

|

|

Pranab Banerjee answered |

Effective access time = hit ratio * time during hit + miss ratio * time during miss TLB time = 10ns, Memory time = 50ns Hit Ratio= 90% E.A.T. = (0.90)*(60)+0.10*110 =65

Which of the following is not a form of memory?- a)instruction cache

- b)instruction register

- c)instruction opcode

- d)translation lookaside buffer

Correct answer is option 'C'. Can you explain this answer?

Which of the following is not a form of memory?

a)

instruction cache

b)

instruction register

c)

instruction opcode

d)

translation lookaside buffer

|

|

Sonal Nair answered |

Instruction Cache - Used for storing instructions that are frequently used Instruction Register - Part of CPU's control unit that stores the instruction currently being executed Instruction Opcode - It is the portion of a machine language instruction that specifies the operation to be performed Translation Lookaside Buffer - It is a memory cache that stores recent translations of virtual memory to physical addresses for faster access. So, all the above except Instruction Opcode are memories. Thus, C is the correct choice. Please comment below if you find anything wrong in the above post.

In a virtual memory system, size of virtual address is 32-bit, size of physical address is 30-bit, page size is 4 Kbyte and size of each page table entry is 32-bit. The main memory is byte addressable. Which one of the following is the maximum number of bits that can be used for storing protection and other information in each page table entry?- a)2

- b)10

- c)12

- d)14

Correct answer is option 'D'. Can you explain this answer?

In a virtual memory system, size of virtual address is 32-bit, size of physical address is 30-bit, page size is 4 Kbyte and size of each page table entry is 32-bit. The main memory is byte addressable. Which one of the following is the maximum number of bits that can be used for storing protection and other information in each page table entry?

a)

2

b)

10

c)

12

d)

14

|

|

Naina Sharma answered |

Virtual memory = 232 bytes Physical memory = 230 bytes

Page size = Frame size = 4 * 103 bytes = 22 * 210 bytes = 212 bytes

Number of frames = Physical memory / Frame size = 230/212 = 218

Therefore, Numbers of bits for frame = 18 bits

Page Table Entry Size = Number of bits for frame + Other information Other information = 32 - 18 = 14 bits

Thus, option (D) is correct.

Please comment below if you find anything wrong in the above post.

Page size = Frame size = 4 * 103 bytes = 22 * 210 bytes = 212 bytes

Number of frames = Physical memory / Frame size = 230/212 = 218

Therefore, Numbers of bits for frame = 18 bits

Page Table Entry Size = Number of bits for frame + Other information Other information = 32 - 18 = 14 bits

Thus, option (D) is correct.

Please comment below if you find anything wrong in the above post.

A processor uses 2-level page tables for virtual to physical address translation. Page tables for both levels are stored in the main memory. Virtual and physical addresses are both 32 bits wide. The memory is byte addressable. For virtual to physical address translation, the 10 most significant bits of the virtual address are used as index into the first level page table while the next 10 bits are used as index into the second level page table. The 12 least significant bits of the virtual address are used as offset within the page. Assume that the page table entries in both levels of page tables are 4 bytes wide. Further, the processor has a translation look-aside buffer (TLB), with a hit rate of 96%. The TLB caches recently used virtual page numbers and the corresponding physical page numbers. The processor also has a physically addressed cache with a hit rate of 90%. Main memory access time is 10 ns, cache access time is 1 ns, and TLB access time is also 1 ns. Assuming that no page faults occur, the average time taken to access a virtual address is approximately (to the nearest 0.5 ns)- a)1.5 ns

- b)2 ns

- c)3 ns

- d)4 ns

Correct answer is option 'D'. Can you explain this answer?