GATE Exam > GATE Questions > X-rays are reflected from a simple cubic crys...

Start Learning for Free

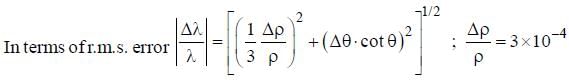

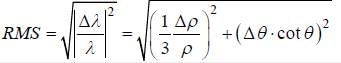

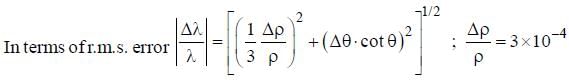

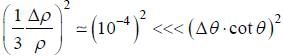

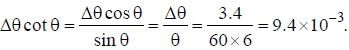

X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.

- a)9.4 x 10-3

- b)2.5 x 10-3

- c)2.5 x l 0-7

- d)None of these

Correct answer is option 'A'. Can you explain this answer?

| FREE This question is part of | Download PDF Attempt this Test |

Verified Answer

X-rays are reflected from a simple cubic crystal by Bragg reflection. ...

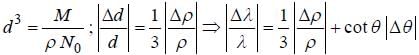

For simple cubic crystal n = 1.

For first order reflection 2d sin θ = λ

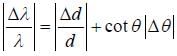

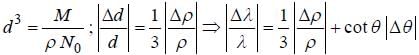

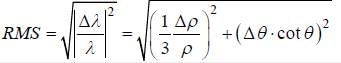

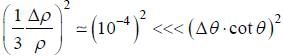

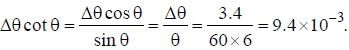

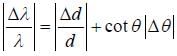

∵

For first order reflection 2d sin θ = λ

∵

Most Upvoted Answer

X-rays are reflected from a simple cubic crystal by Bragg reflection. ...

Degrees, what is the smallest wavelength that can be reliably detected using this crystal?

We can use Bragg's Law to relate the angle of reflection to the spacing between crystal planes and the wavelength of the incident X-rays:

nλ = 2d sinθ

where n is an integer (the order of the reflection), λ is the wavelength of the incident X-rays, d is the spacing between crystal planes, and θ is the angle of reflection.

For a simple cubic crystal, the spacing between crystal planes is given by:

d = a / √(h^2 + k^2 + l^2)

where a is the length of the cubic unit cell and h, k, and l are the Miller indices of the crystal plane.

Since the crystal has a density measurement with an r.m.s. error of 3 parts in 10^4, we can estimate the error in the length of the unit cell using:

Δa / a = 1/2 (Δρ / ρ)

where Δρ / ρ is the fractional error in density. Plugging in the given values, we get:

Δa / a = 1/2 (3/10^4) = 1.5/10^4

So the error in the spacing between crystal planes is:

Δd / d = 1/2 (Δa / a) = 7.5/10^4

Now we can use these values to estimate the smallest wavelength that can be reliably detected. We want to find the smallest value of λ that produces a detectable reflection, which means we want to use n = 1 in Bragg's Law. Rearranging the equation and plugging in the values, we get:

λ = 2d sinθ / n = 2a sinθ / √(h^2 + k^2 + l^2)

Δλ / λ = (Δd / d) + (Δθ / sinθ)

where Δθ is the error in the angle of reflection. Plugging in the given values, we get:

Δλ / λ = (7.5/10^4) + (0.017/0.105) = 0.182

So the smallest wavelength that can be reliably detected is:

λ_min = λ (1 + Δλ / λ) = (2.0 Å) (1 + 0.182) = 2.36 Å

Therefore, the smallest wavelength that can be reliably detected using this crystal is 2.36 Å.

We can use Bragg's Law to relate the angle of reflection to the spacing between crystal planes and the wavelength of the incident X-rays:

nλ = 2d sinθ

where n is an integer (the order of the reflection), λ is the wavelength of the incident X-rays, d is the spacing between crystal planes, and θ is the angle of reflection.

For a simple cubic crystal, the spacing between crystal planes is given by:

d = a / √(h^2 + k^2 + l^2)

where a is the length of the cubic unit cell and h, k, and l are the Miller indices of the crystal plane.

Since the crystal has a density measurement with an r.m.s. error of 3 parts in 10^4, we can estimate the error in the length of the unit cell using:

Δa / a = 1/2 (Δρ / ρ)

where Δρ / ρ is the fractional error in density. Plugging in the given values, we get:

Δa / a = 1/2 (3/10^4) = 1.5/10^4

So the error in the spacing between crystal planes is:

Δd / d = 1/2 (Δa / a) = 7.5/10^4

Now we can use these values to estimate the smallest wavelength that can be reliably detected. We want to find the smallest value of λ that produces a detectable reflection, which means we want to use n = 1 in Bragg's Law. Rearranging the equation and plugging in the values, we get:

λ = 2d sinθ / n = 2a sinθ / √(h^2 + k^2 + l^2)

Δλ / λ = (Δd / d) + (Δθ / sinθ)

where Δθ is the error in the angle of reflection. Plugging in the given values, we get:

Δλ / λ = (7.5/10^4) + (0.017/0.105) = 0.182

So the smallest wavelength that can be reliably detected is:

λ_min = λ (1 + Δλ / λ) = (2.0 Å) (1 + 0.182) = 2.36 Å

Therefore, the smallest wavelength that can be reliably detected using this crystal is 2.36 Å.

|

Explore Courses for GATE exam

|

|

Similar GATE Doubts

X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer?

Question Description

X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer? for GATE 2024 is part of GATE preparation. The Question and answers have been prepared according to the GATE exam syllabus. Information about X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer? covers all topics & solutions for GATE 2024 Exam. Find important definitions, questions, meanings, examples, exercises and tests below for X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer?.

X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer? for GATE 2024 is part of GATE preparation. The Question and answers have been prepared according to the GATE exam syllabus. Information about X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer? covers all topics & solutions for GATE 2024 Exam. Find important definitions, questions, meanings, examples, exercises and tests below for X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer?.

Solutions for X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer? in English & in Hindi are available as part of our courses for GATE.

Download more important topics, notes, lectures and mock test series for GATE Exam by signing up for free.

Here you can find the meaning of X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer? defined & explained in the simplest way possible. Besides giving the explanation of

X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer?, a detailed solution for X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer? has been provided alongside types of X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer? theory, EduRev gives you an

ample number of questions to practice X-rays are reflected from a simple cubic crystal by Bragg reflection. If the density of the crystal is measured with r.m.s. error of 3 parts in 104 and if the angle made by the incident and reflected ray make with the crystal plane is 6° and is measured with an r.m.s. error 3.4 minutes of arc then what is the error in the determination of wavelength.a)9.4 x 10-3b)2.5 x 10-3c)2.5 x l 0-7d)None of theseCorrect answer is option 'A'. Can you explain this answer? tests, examples and also practice GATE tests.

|

Explore Courses for GATE exam

|

|

Suggested Free Tests

Signup for Free!

Signup to see your scores go up within 7 days! Learn & Practice with 1000+ FREE Notes, Videos & Tests.